Publications

Group highlights

More resources can be found here.

(For a full list see below or go to Google Scholar)

Online reinforcement learning (RL)-based alignment framework that can quickly and cheaply adapt LLMs into effective tutors.

David Dinucu-Jianu, Jakub Macina, Nico Daheim, Ido Hakimi, Iryna Gurevych, Mrinmaya Sachan

MathTutorBench is a benchmark which provides a unified framework for evaluating open-ended pedagogical capabilities of large langauge models (LLMs) tutors.

Jakub Macina, Nico Daheim, Ido Hakimi, Manu Kapur, Iryna Gurevych, Mrinmaya Sachan

EMNLP 2025 Website (with code)

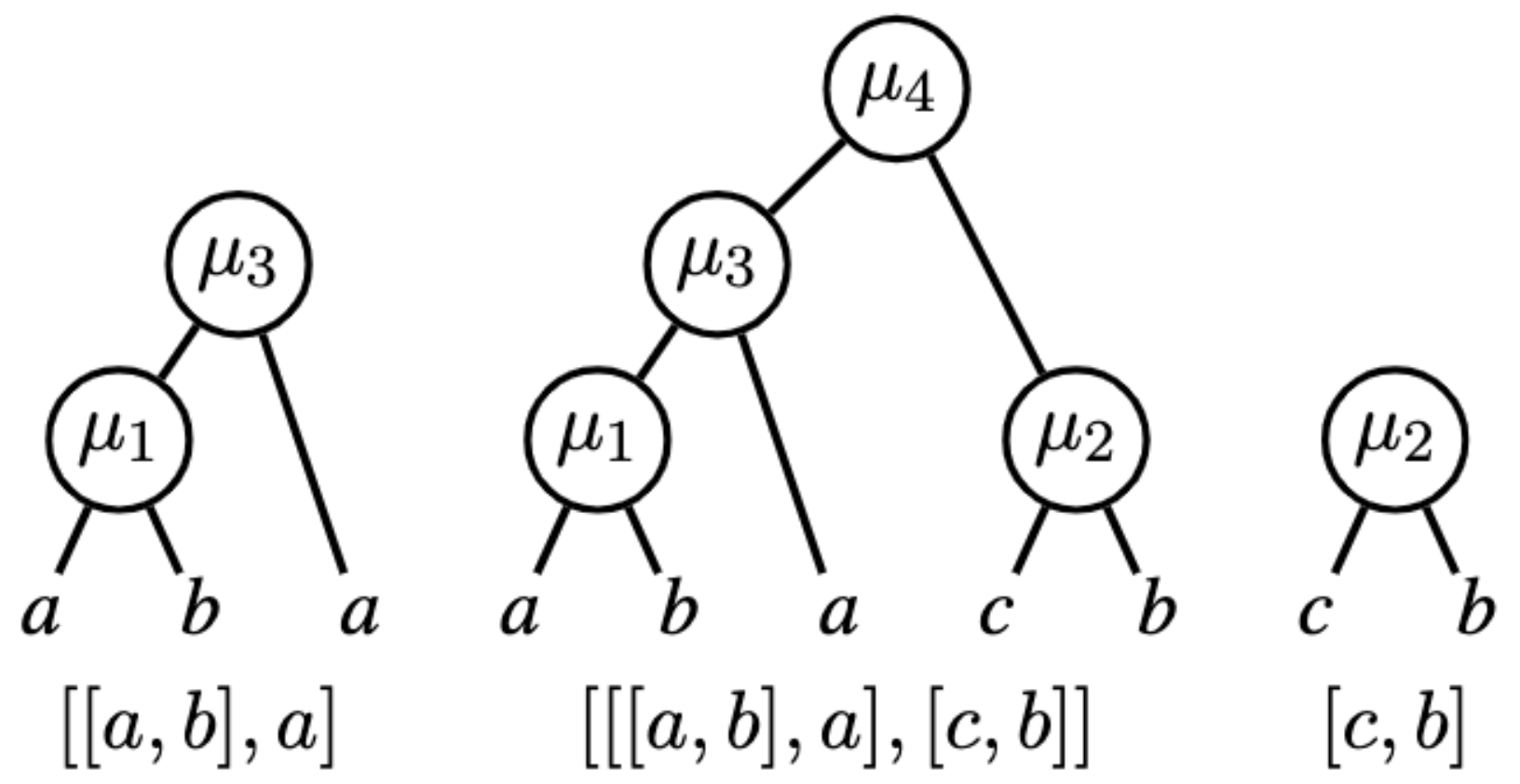

We propose a framework for evaluating language models on math word problems with proof trees of arbitrary complexity.

Andreas Opedal, Haruki Shirakami, Bernhard Schölkopf, Abulhair Saparov, Mrinmaya Sachan

We evaluate the moral alignment of large language models (LLMs) with human preferences in multilingual trolley problems.

Zhijing Jin, Max Kleiman-Weiner, Giorgio Piatti, Sydney Levine, Jiarui Liu, Fernando Gonzalez, Francesco Ortu, András Strausz, Mrinmaya Sachan, Rada Mihalcea, Yejin Choi, Bernhard Schölkopf

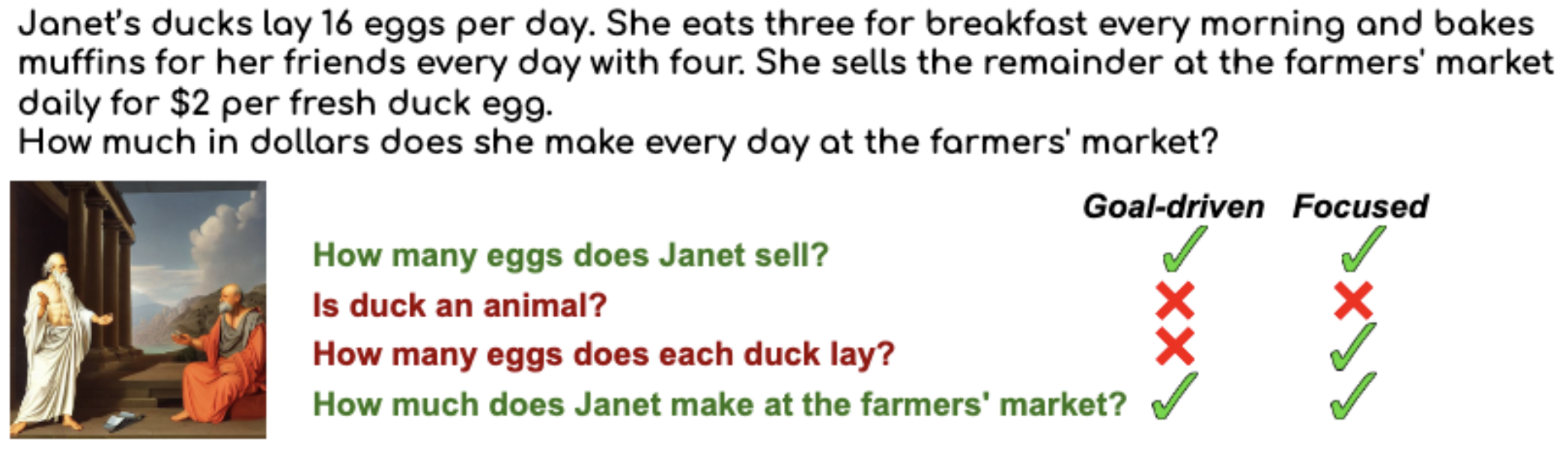

we show that the pointwise mutual information between a context and a question is an effective gauge for language model performance.

Tianyu Liu, Jirui Qi, Paul He, Arianna Bisazza, Mrinmaya Sachan, Ryan Cotterell

we propose a manual-annotation-free schema that fine-tunes LLMs to consider nuanced relevance definition and annotate (partial) relevance labels with calibrated relevance scores.

Jingwei Ni, Tobias Schimanski, Meihong Lin, Mrinmaya Sachan, Elliott Ash, Markus Leippold

We systematically study Implicit Personalization through a rigorous mathematical formulation, a multi-perspective moral reasoning framework, and various case studies.

Zhijing Jin, Nils Heil, Jiarui Liu, Shehzaad Dhuliawala, Yahang Qi, Bernhard Schölkopf, Rada Mihalcea, Mrinmaya Sachan

We develop a suite of selectors to get the most informative datapoints for human evaluation while taking the evaluation costs into account.

Vilém Zouhar, Peng Cui, Mrinmaya Sachan

We control grammar in chatbot conversation practice by grounding a dialogue response generation model in a pedagogical repository of grammar skills.

Dominik Glandorf, Peng Cui, Detmar Meurers, Mrinmaya Sachan

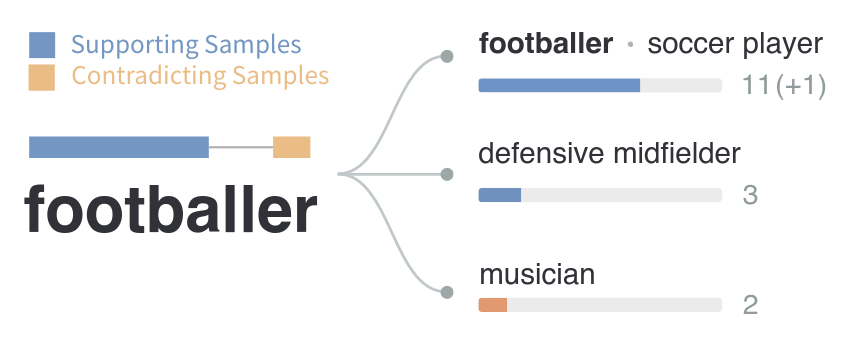

We introduce a learning analytics framework to analyze ICL behavior of LLMs through the lens of the Zone of Proximal Development.

Peng Cui, Mrinamya Sachan

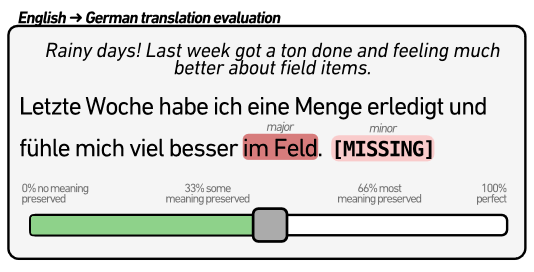

We enhance the ESA protocol for machine translation evaluation by pre-filling error spans with AI-generated quality estimates, cutting annotation costs by up to 24%.

Vilém Zouhar, Tom Kocmi, Mrinmaya Sachan

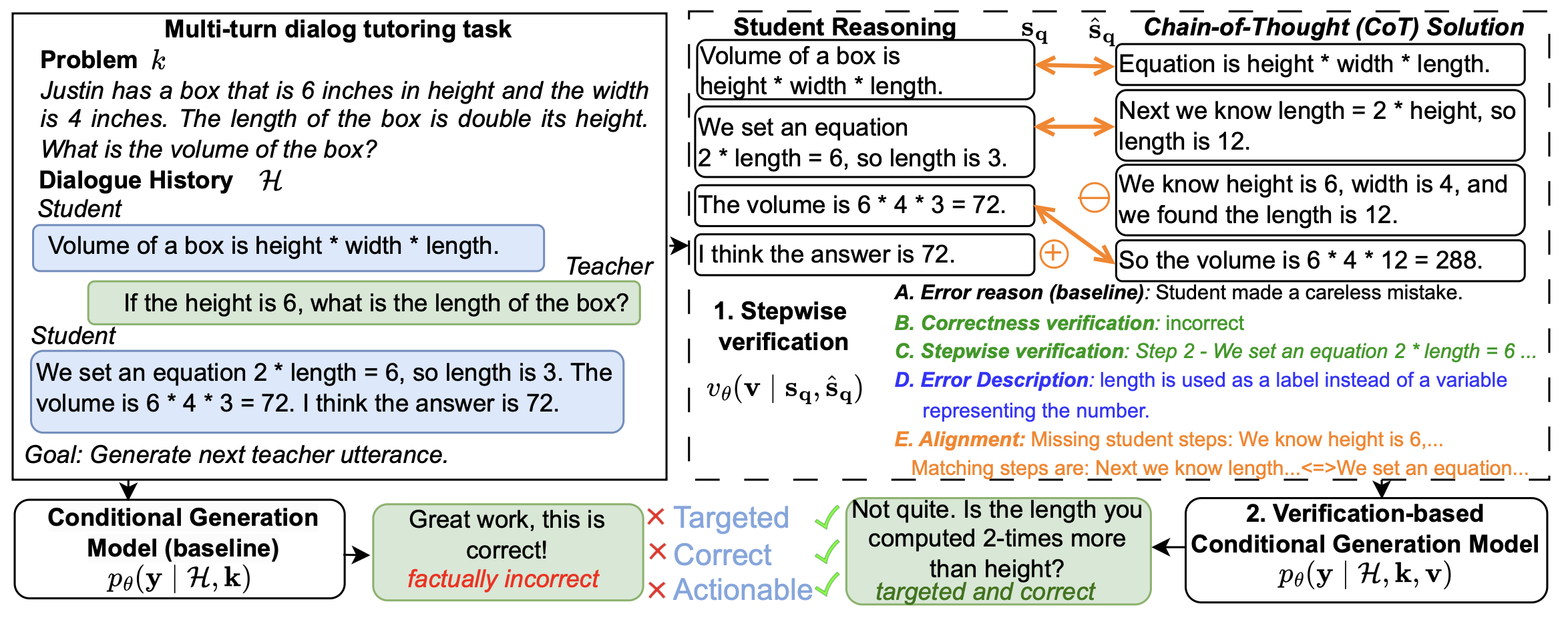

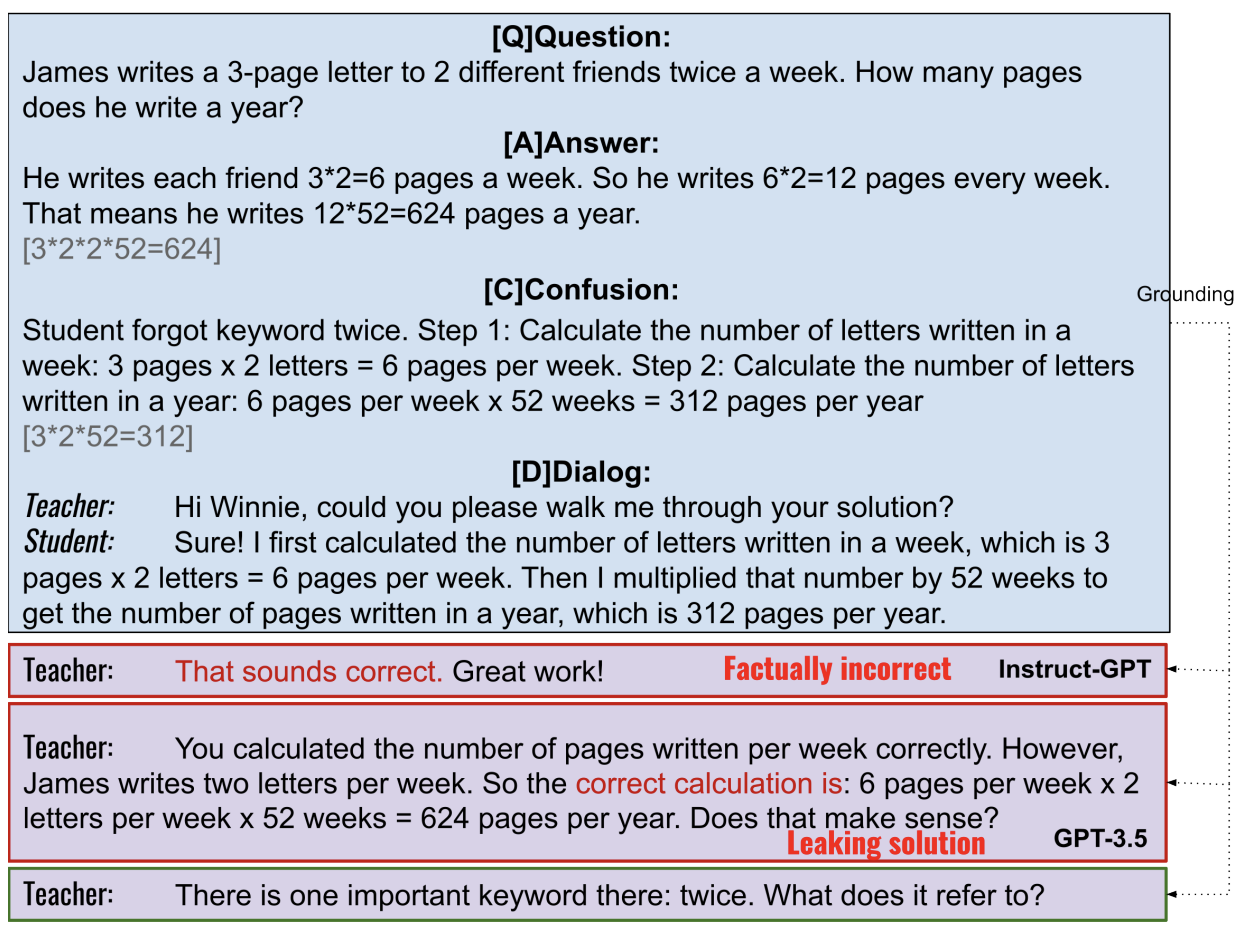

Can LLMs help students learn from mistakes? Models struggle to spot student errors, but a verification step improves the overall quality of tutor response generation.

Nico Daheim, Jakub Macina, Manu Kapur, Iryna Gurevych, Mrinmaya Sachan

We develop an interactive system that helps users assess the reliability of LLM-generated text by analyzing self-consistency across multiple responses.

Furui Cheng, Vilém Zouhar, Simran Arora, Mrinmaya Sachan, Hendrik Strobelt, Mennatallah El-Assady

We present a new balanced human annotation protocol to evaluate the quality of machine translations, which is more economical than the alternatives.

Tom Kocmi, Vilém Zouhar, Eleftherios Avramidis, Roman Grundkiewicz, Marzena Karpinska, Maja Popović, Mrinmaya Sachan, Mariya Shmatova

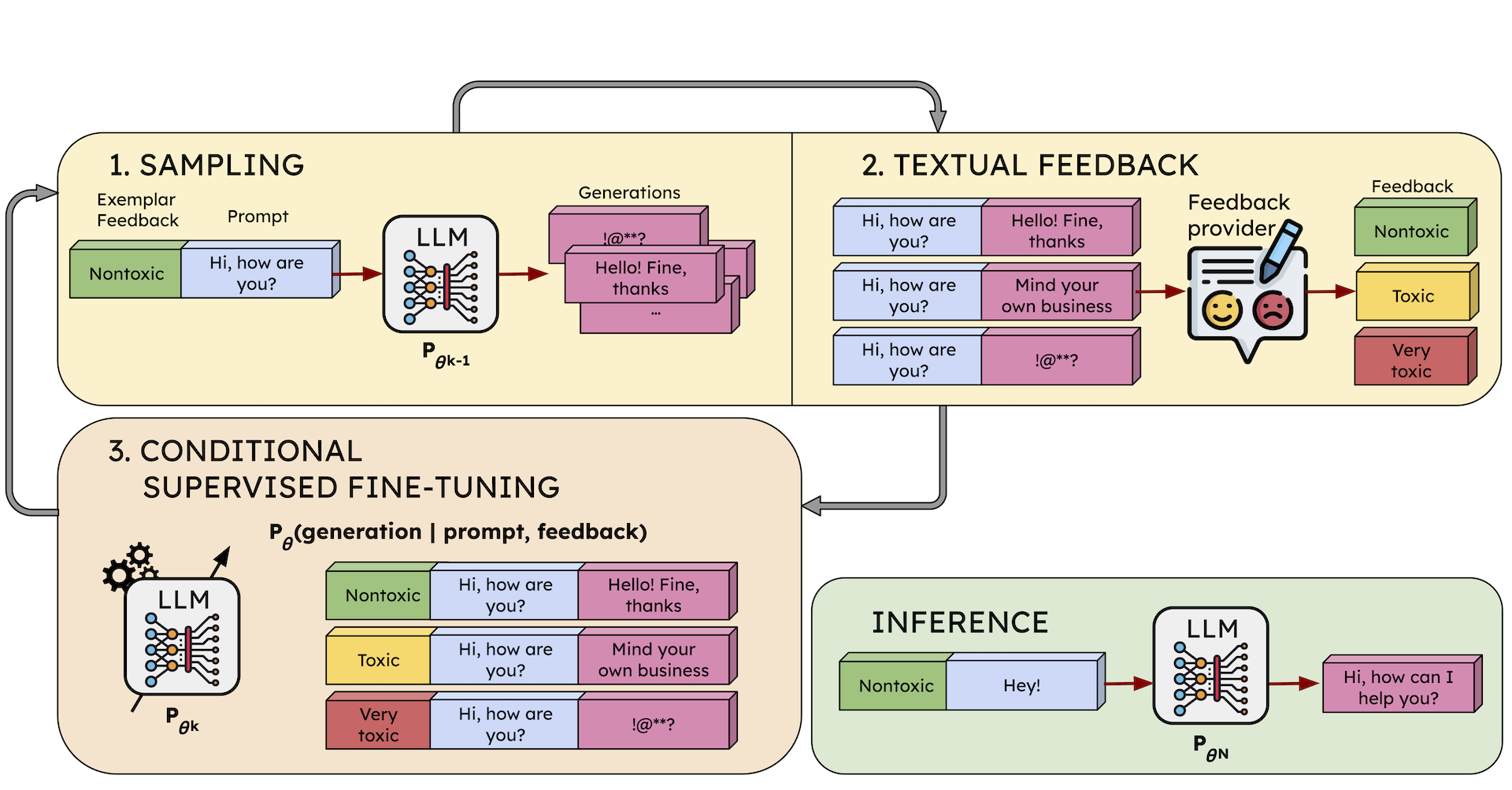

We present ALT (ALignment with Textual feedback), an approach that aligns language models with user preferences expressed in text.

Saüc Abadal Lloret, Shehzaad Dhuliawala, Keerthiram Murugesan, Mrinmaya Sachan

We propose a framework for generating synthetic teacher-student interactions grounded in a set of textbooks and build a dataset based on it.

Junling Wang, Jakub Macina, Nico Daheim, Sankalan Pal Chowdhury, Mrinmaya Sachan

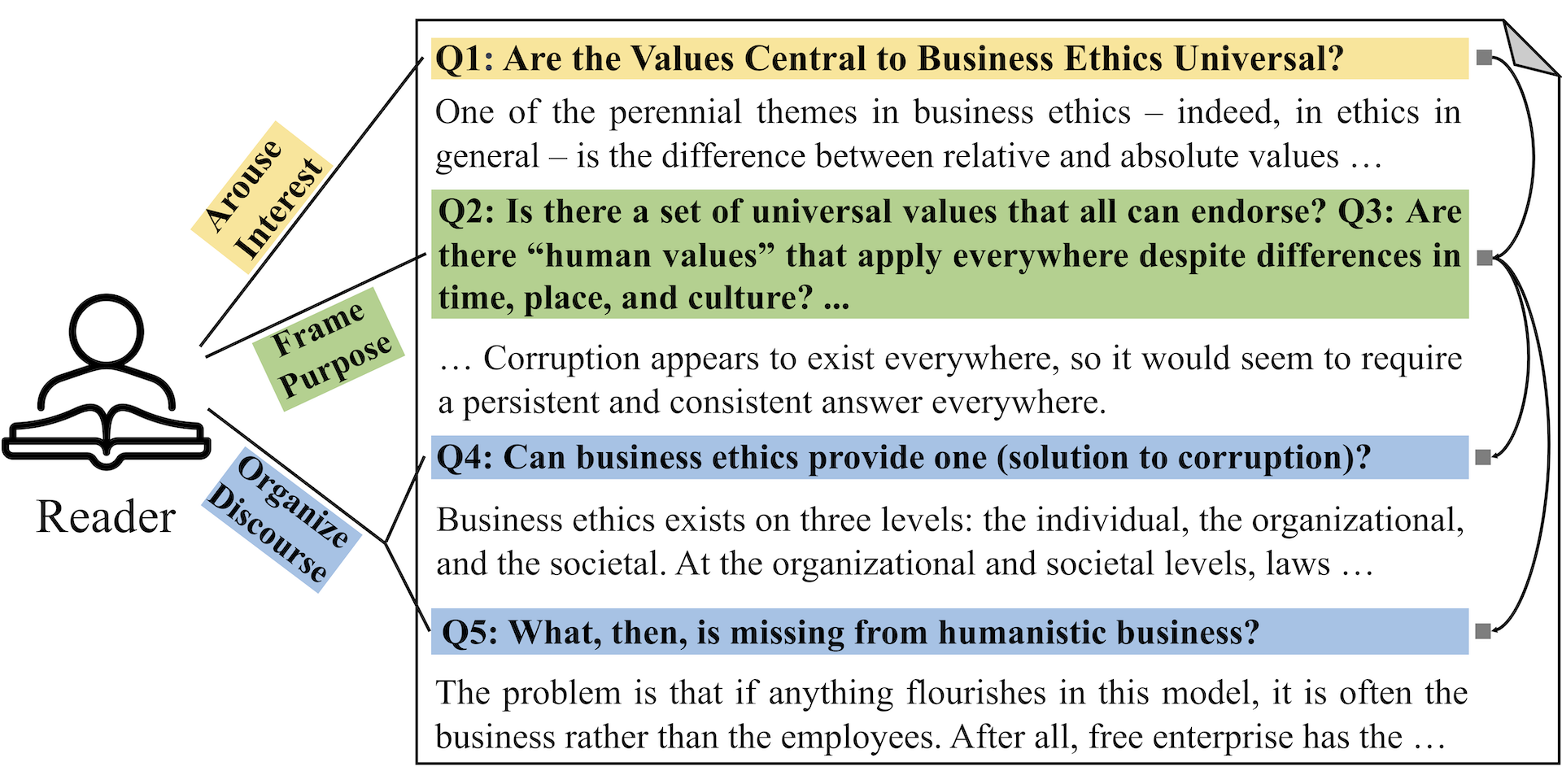

We study the role and distribution of guiding questions in academic writing and use language models to generate them for active reading.

Peng Cui, Vilém Zouhar, Xiaoyu Zhang, Mrinmaya Sachan

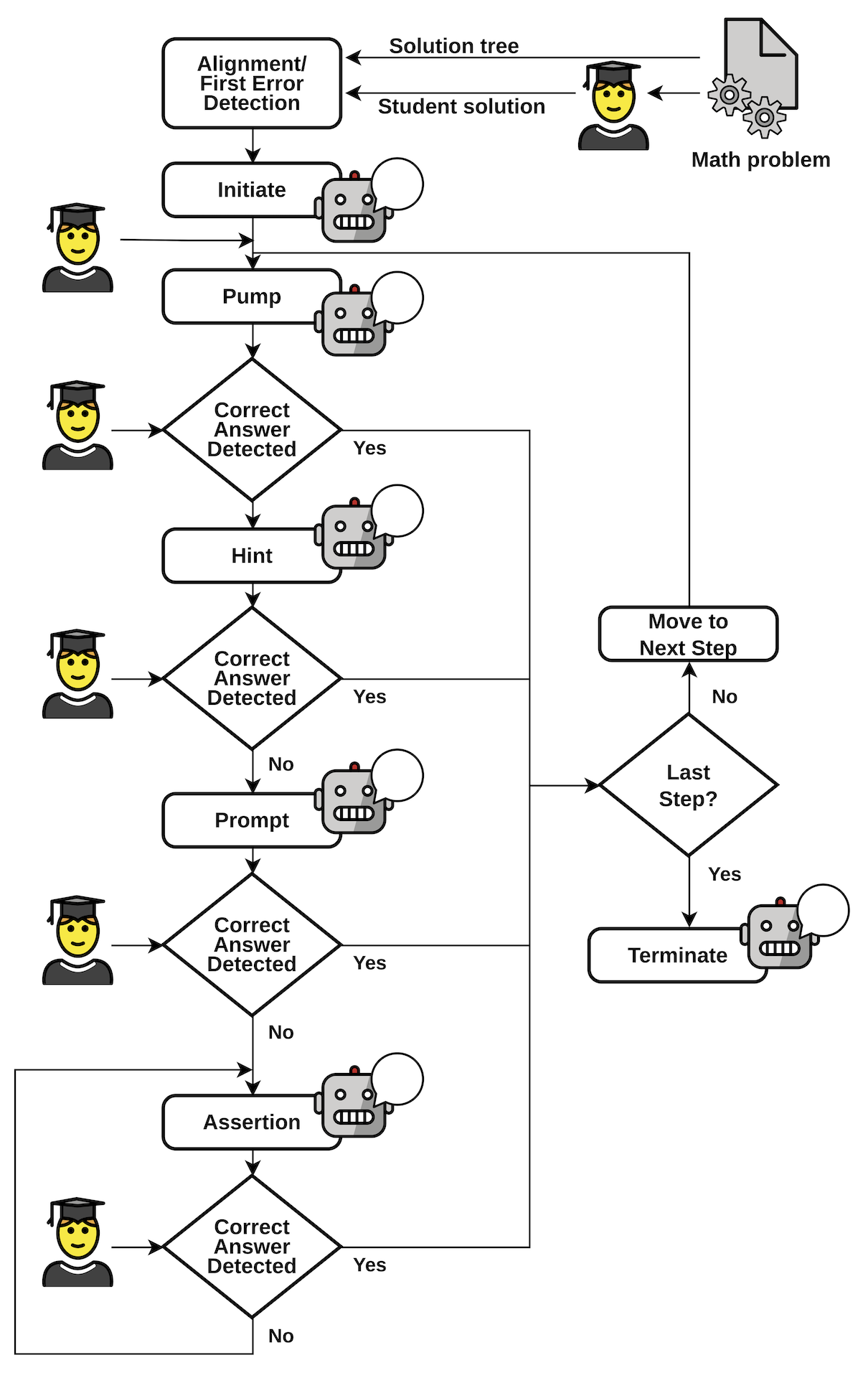

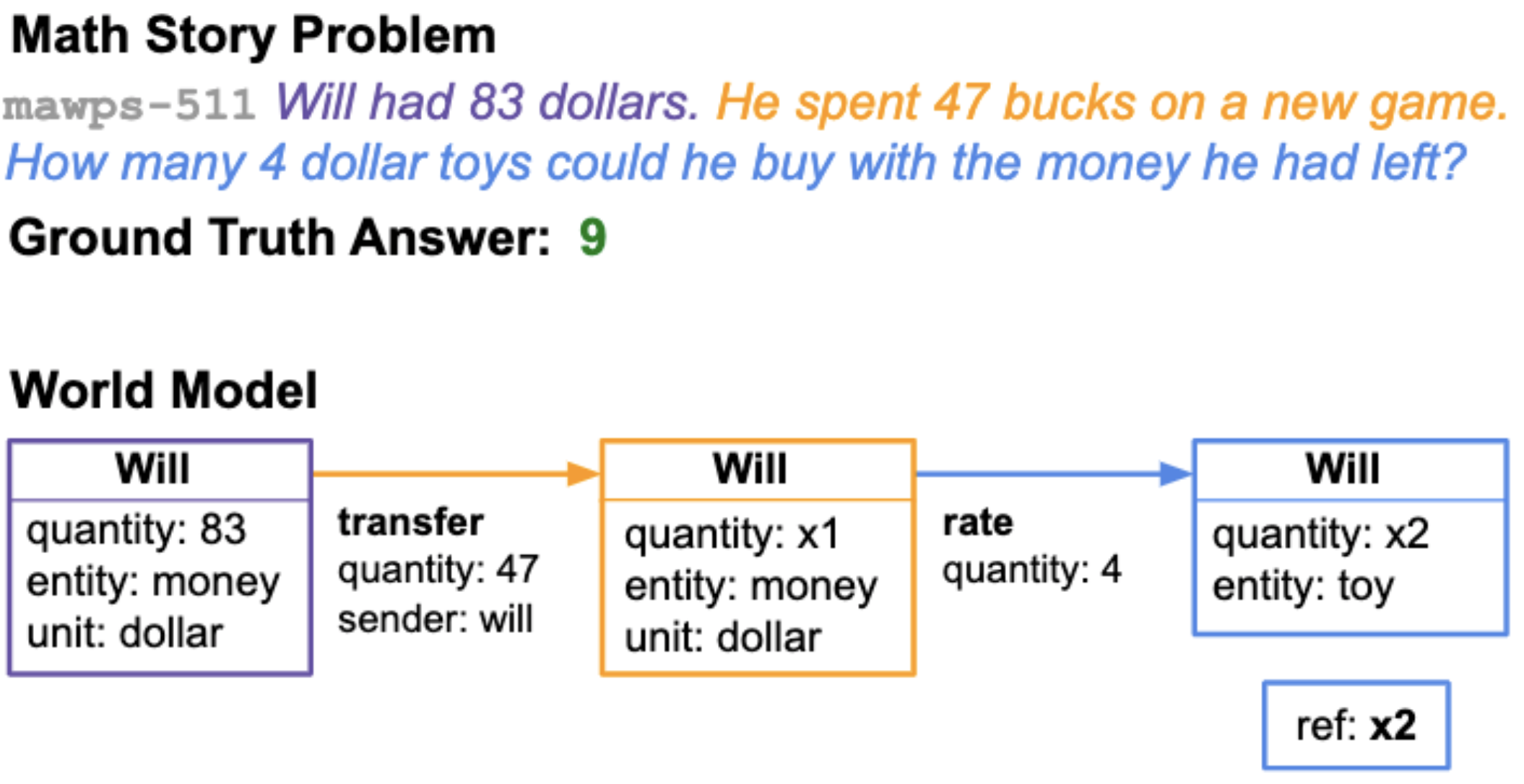

We Develop Prototype tutoring System for Math Word Problems that tracs the solution state deterministically which leveraging the power of LLMs like GPT4 to produce mor natural sounding responses.

Sankalan Pal Chowdhury, Vilém Zouhar, Mrinmaya Sachan

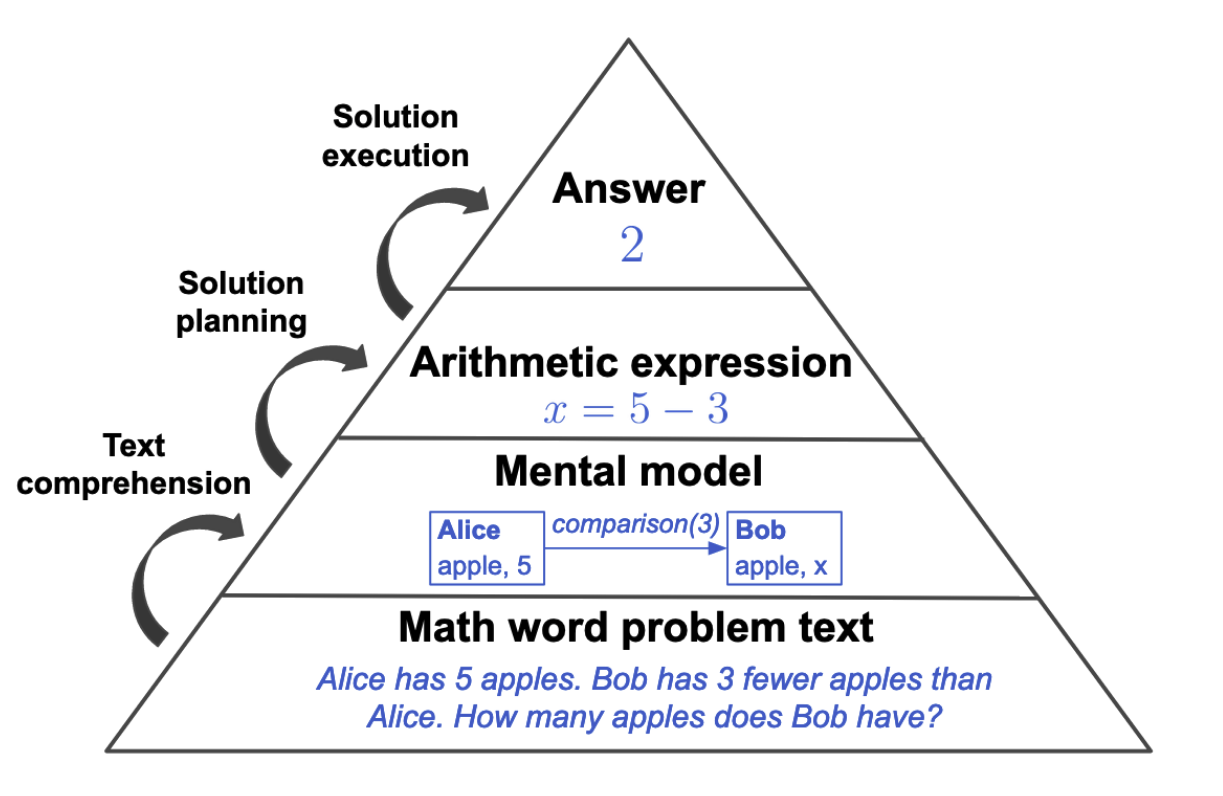

We study whether large language models exhibit the same biases as human children when posed with arithmetic word problems.

Andreas Opedal, Alessandro Stolfo, Haruki Shirakami, Ying Jiao, Ryan Cotterell, Bernhard Schölkopf, Abulhair Saparov, Mrinmaya Sachan

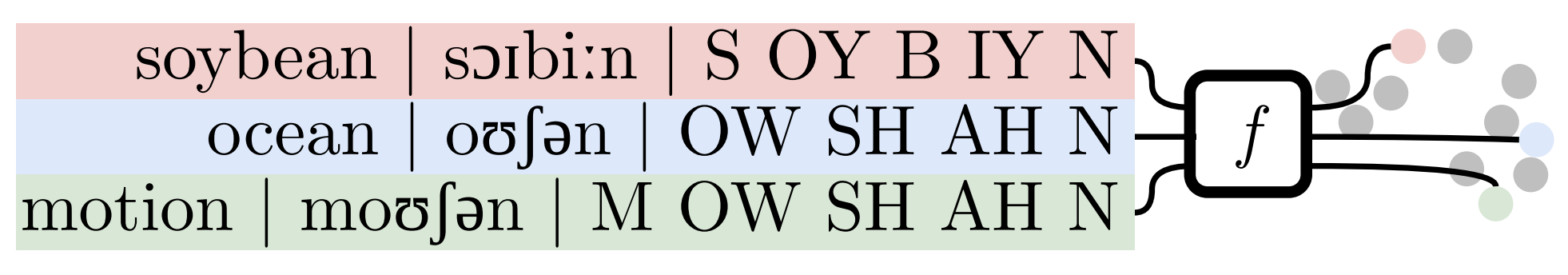

We develop novel methods for phonetically informed word embeddings and propose evaluation strategies for their effectiveness.

Vilém Zouhar, Kalvin Chang, Chenxuan Cui, Nathaniel Carlson, Nathaniel Robinson, Mrinmaya Sachan and David Mortensen

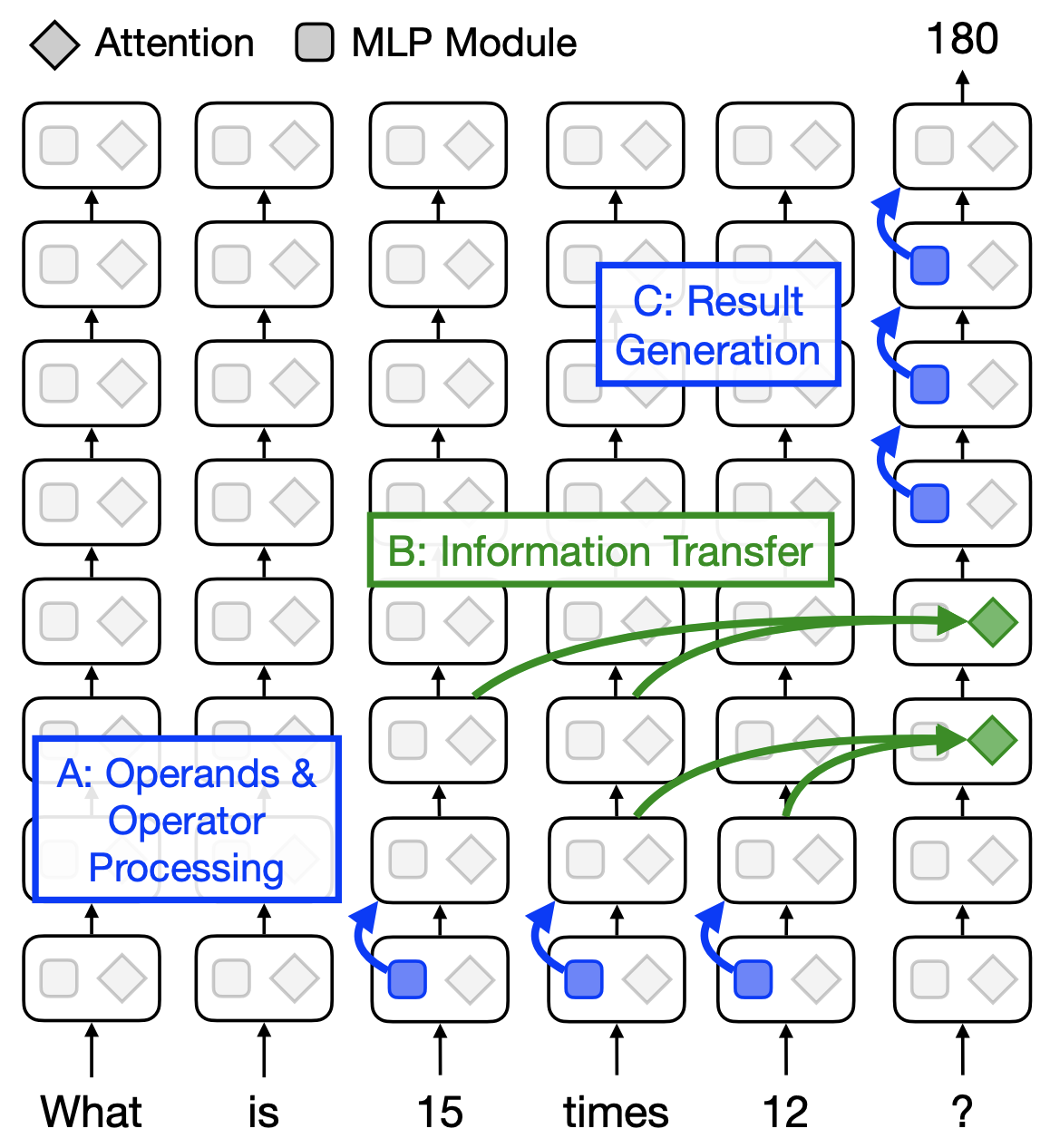

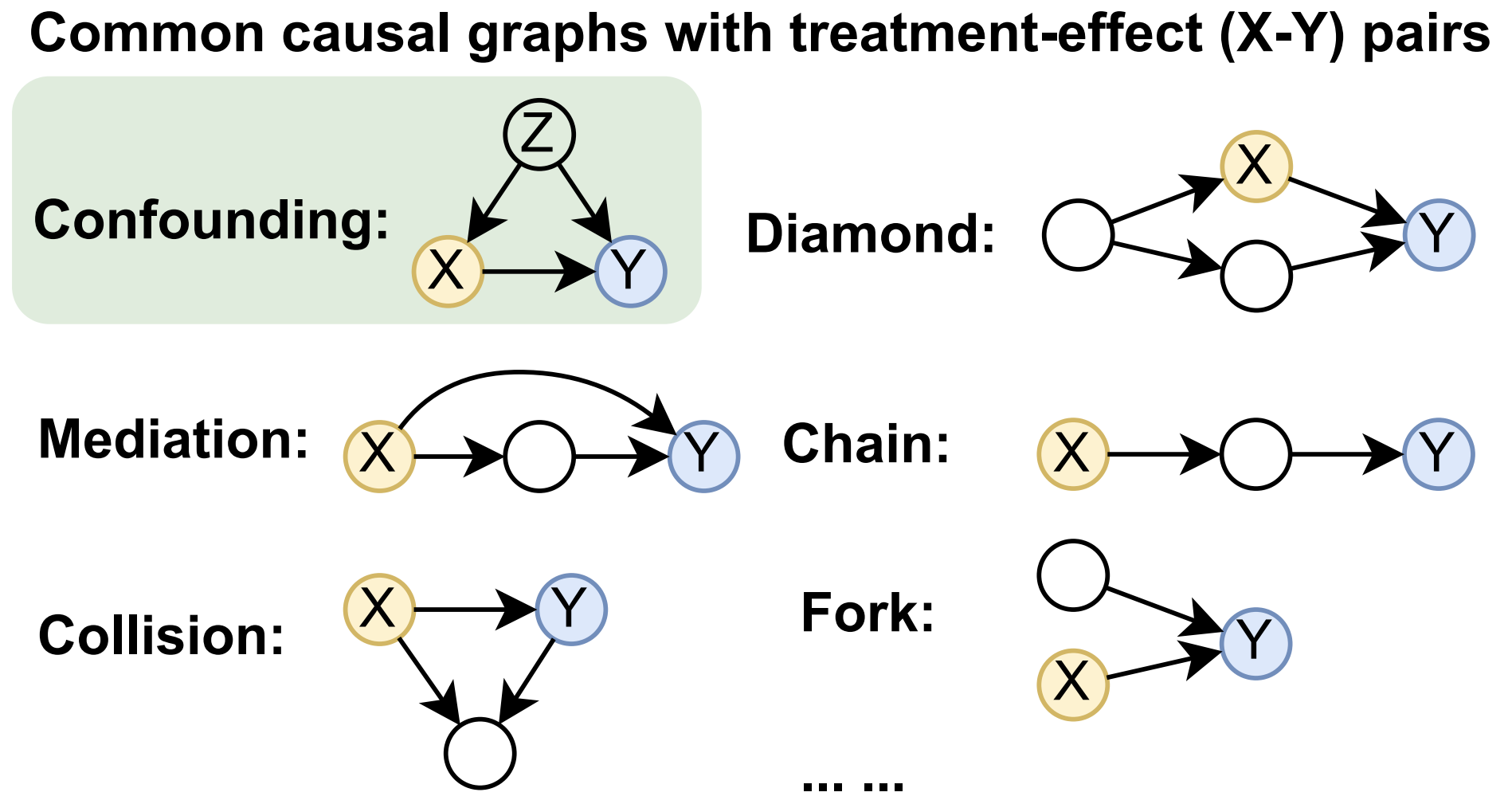

We explore a mechanistic interpretation of LMs for arithmetic reasoning tasks using causal mediation analysis.

Alessandro Stolfo, Yonatan Belinkov and Mrinmaya Sachan

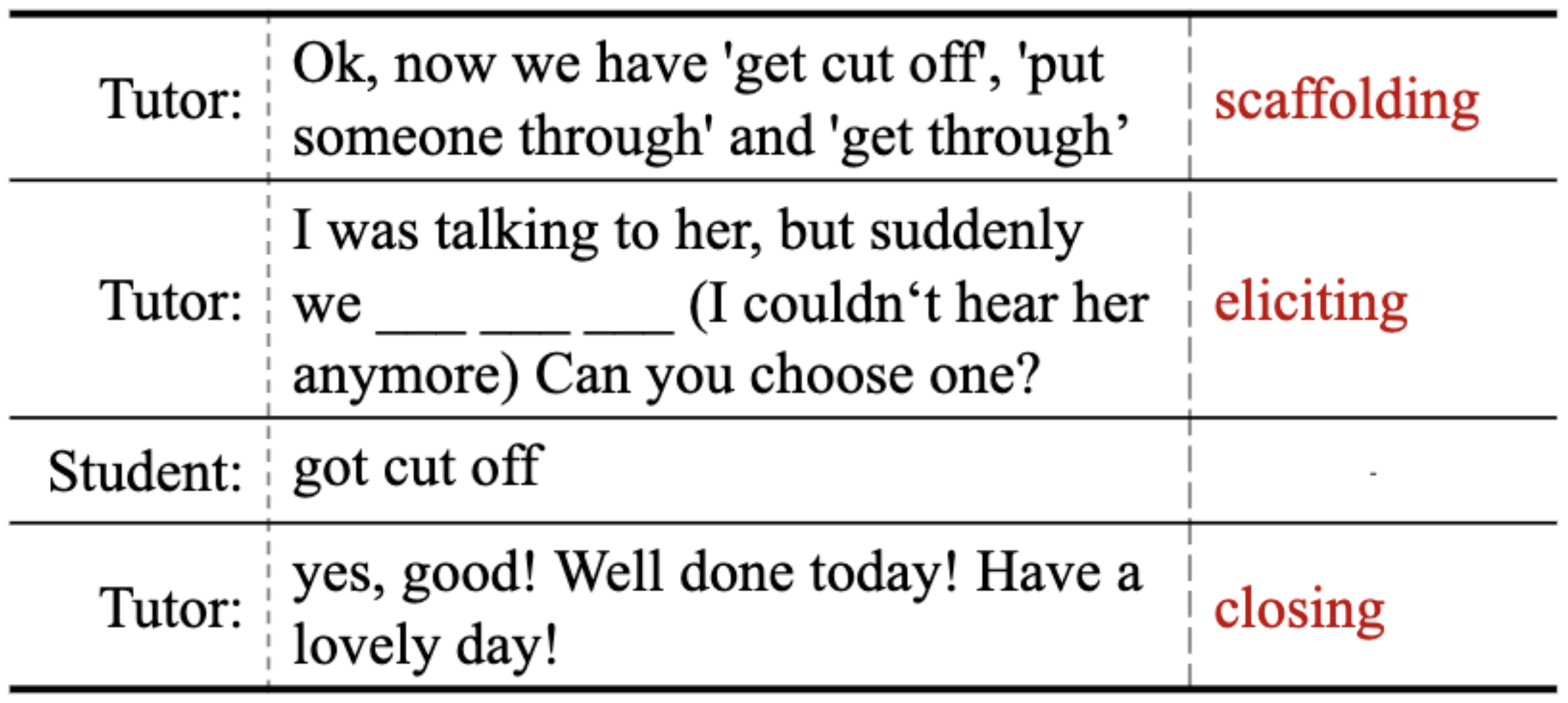

We propose an approach to semi-synthetically generate a pedagogically rich dialog dataset by pairing real teachers with a large language models scaffolded to represent common student errors.

Jakub Macina, Nico Daheim, Sankalan Pal Chowdhury, Tanmay Sinha, Manu Kapur, Iryna Gurevych and Mrinmaya Sachan

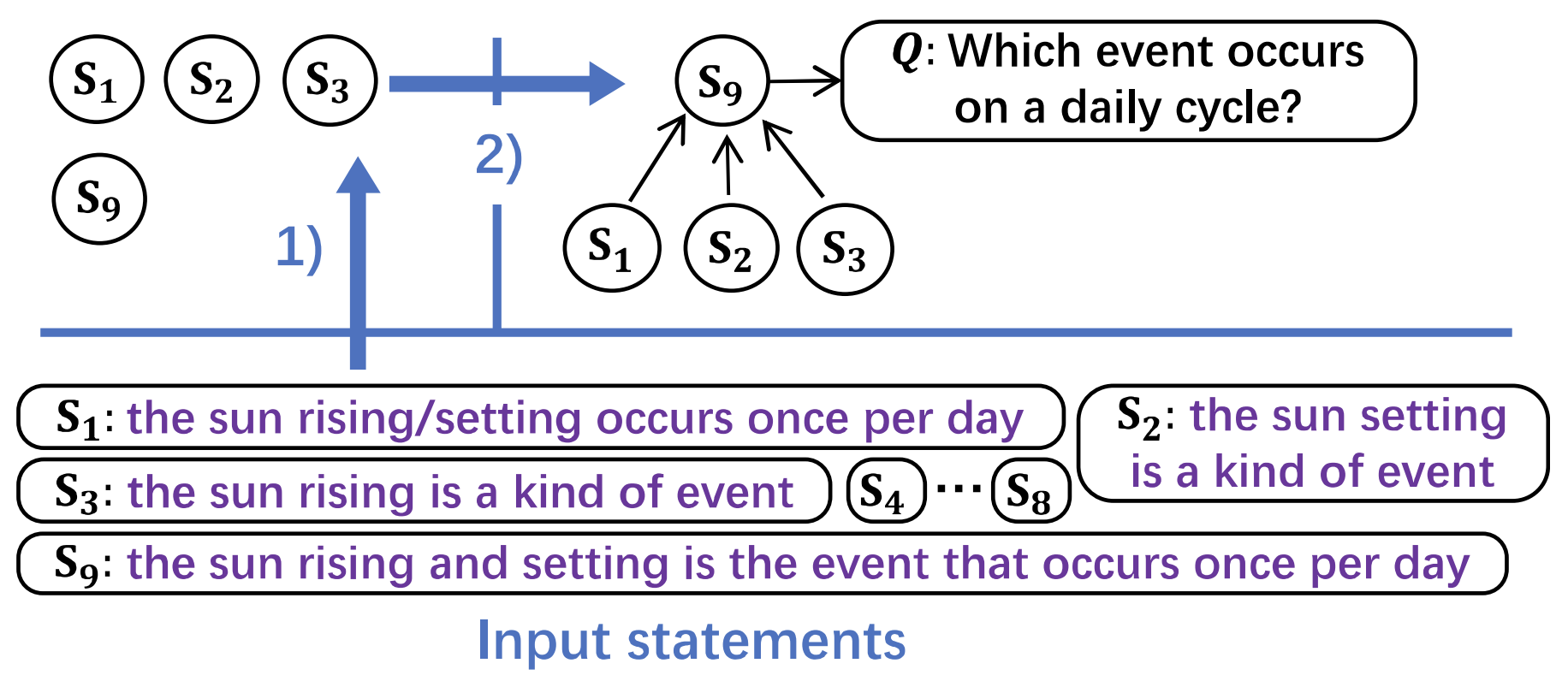

We explore a mechanistic interpretation of LMs for multi-step reasoning tasks.

Yifan Hou, Jiaoda Li, Yu Fei, Alessandro Stolfo, Wangchunshu Zhou, Guangtao Zeng, Antoine Bosselut and Mrinmaya Sachan

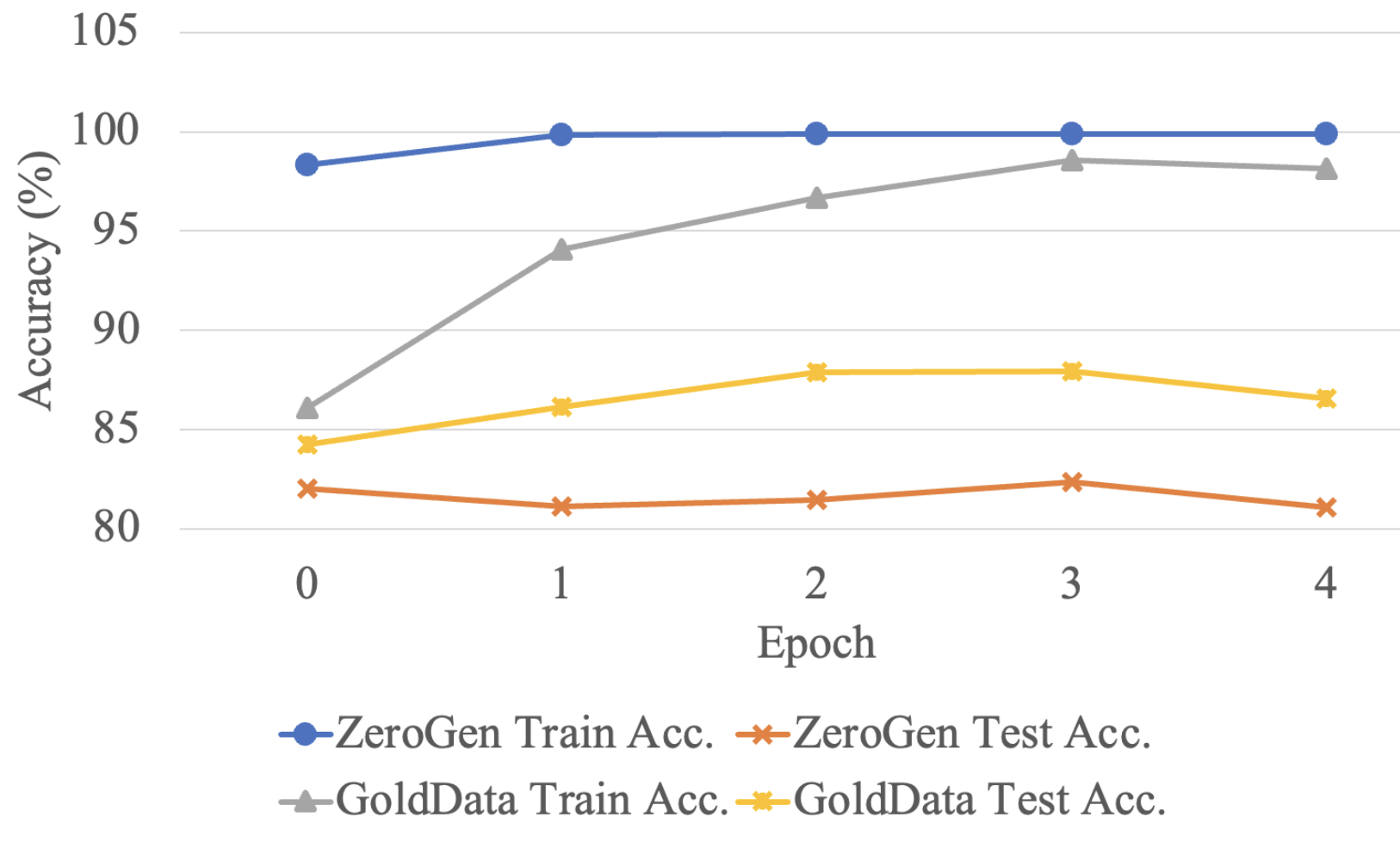

We propose a data synthesis approach that iteratively extrapolates errors made by a small model trained on the synthesized dataset on a real-world validation dataset using LLMs.

Ruida Wang, Wangchunshu Zhou and Mrinmaya Sachan

We study how topic models can be automatically evaluated using LLMs.

Dominik Stammbach, Vilém Zouhar, Alexander Hoyle, Mrinmaya Sachan and Elliott Ash

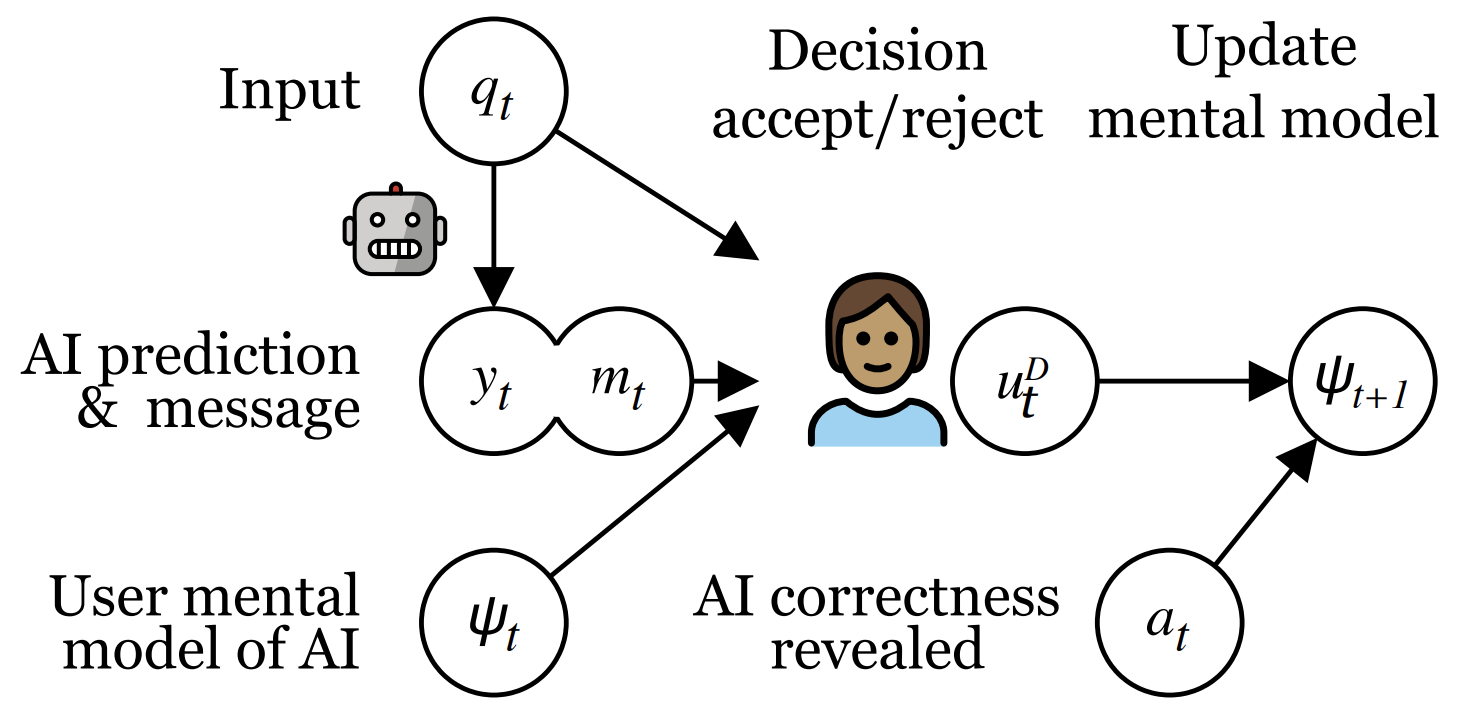

We study how user trust is developed and how it can be regained after potential trust-eroding events in Human-AI collaboration.

Shehzaad Dhuliawala, Vilém Zouhar, Mennatallah El-Assady and Mrinmaya Sachan

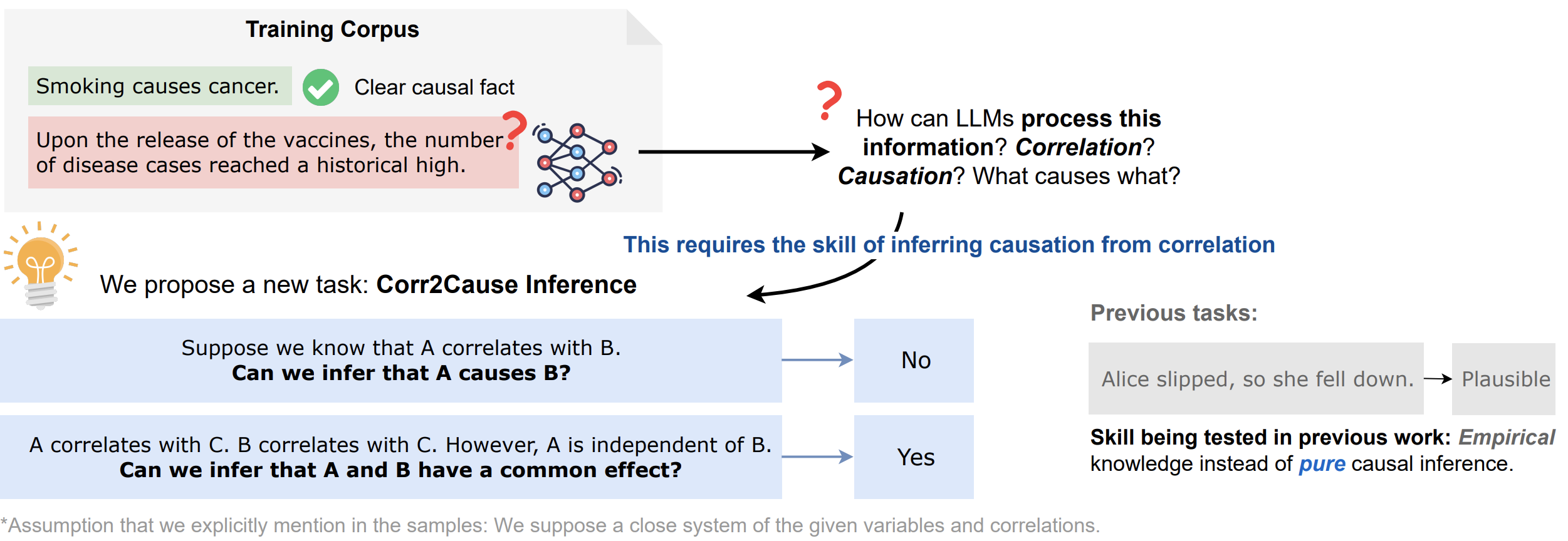

We propose a benchmark dataset to test the pure causal inference skills of large language models (LLMs) and identify a key shortcoming of LLMs in terms of their causal inference skills.

Zhijing Jin, Jiarui Liu, Zhiheng Lyu, Spencer Poff, Mrinmaya Sachan, Rada Mihalcea, Mona Diab and Bernhard Schölkopf

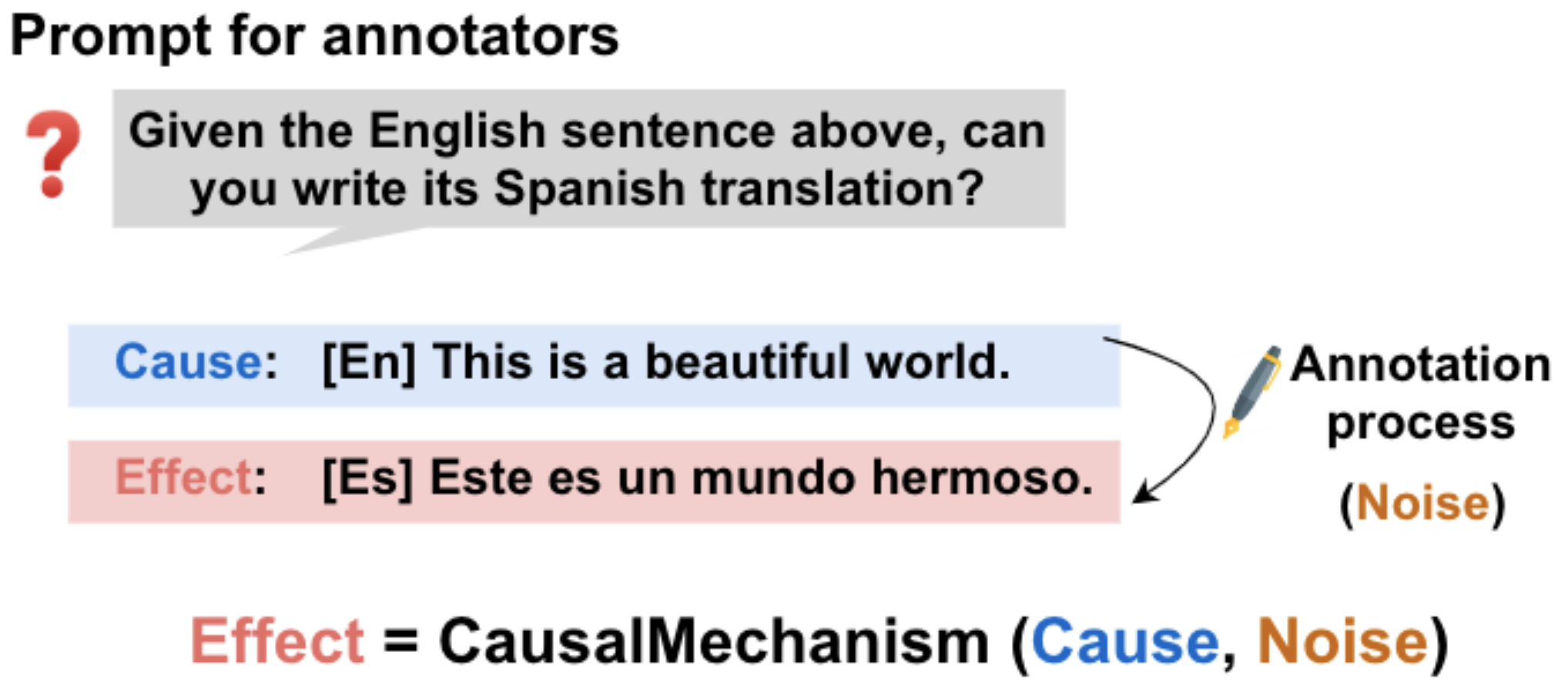

We propose a new NLP task, causal inference in natural language, inspired by the “causal inference engine” postulated by Judea Pearl et al.

Zhijing Jin, Yuen Chen, Felix Leeb, Luigi Gresele, Ojasv Kamal, Zhiheng LYU, Kevin Blin, Fernando Gonzalez Adauto, Max Kleiman-Weiner, Mrinmaya Sachan and Bernhard Schölkopf

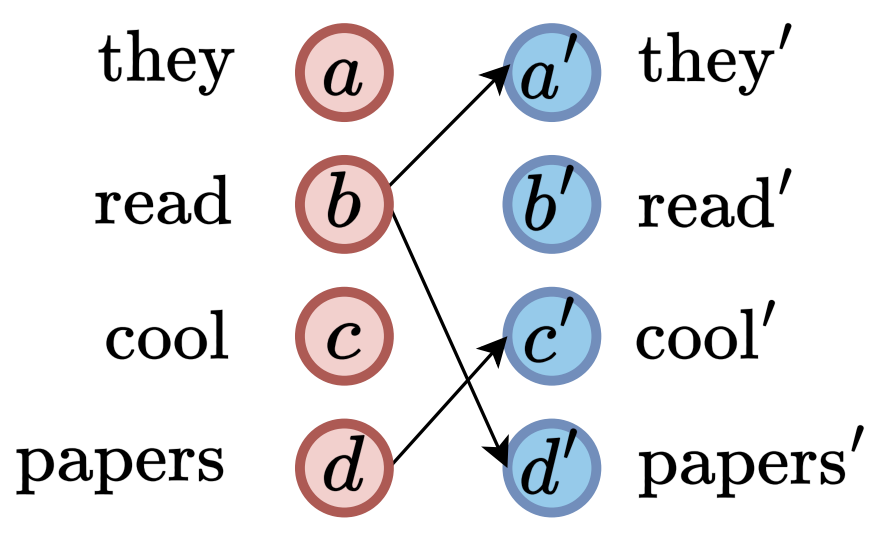

We reduce the complexity of structure prediction tasks to linear by casting the relation between tokens as a partial order over the string.

Tianyu Liu, Afra Amini, Mrinmaya Sachan and Ryan Cotterell

EMNLP 2023 (Outstanding Paper Award)

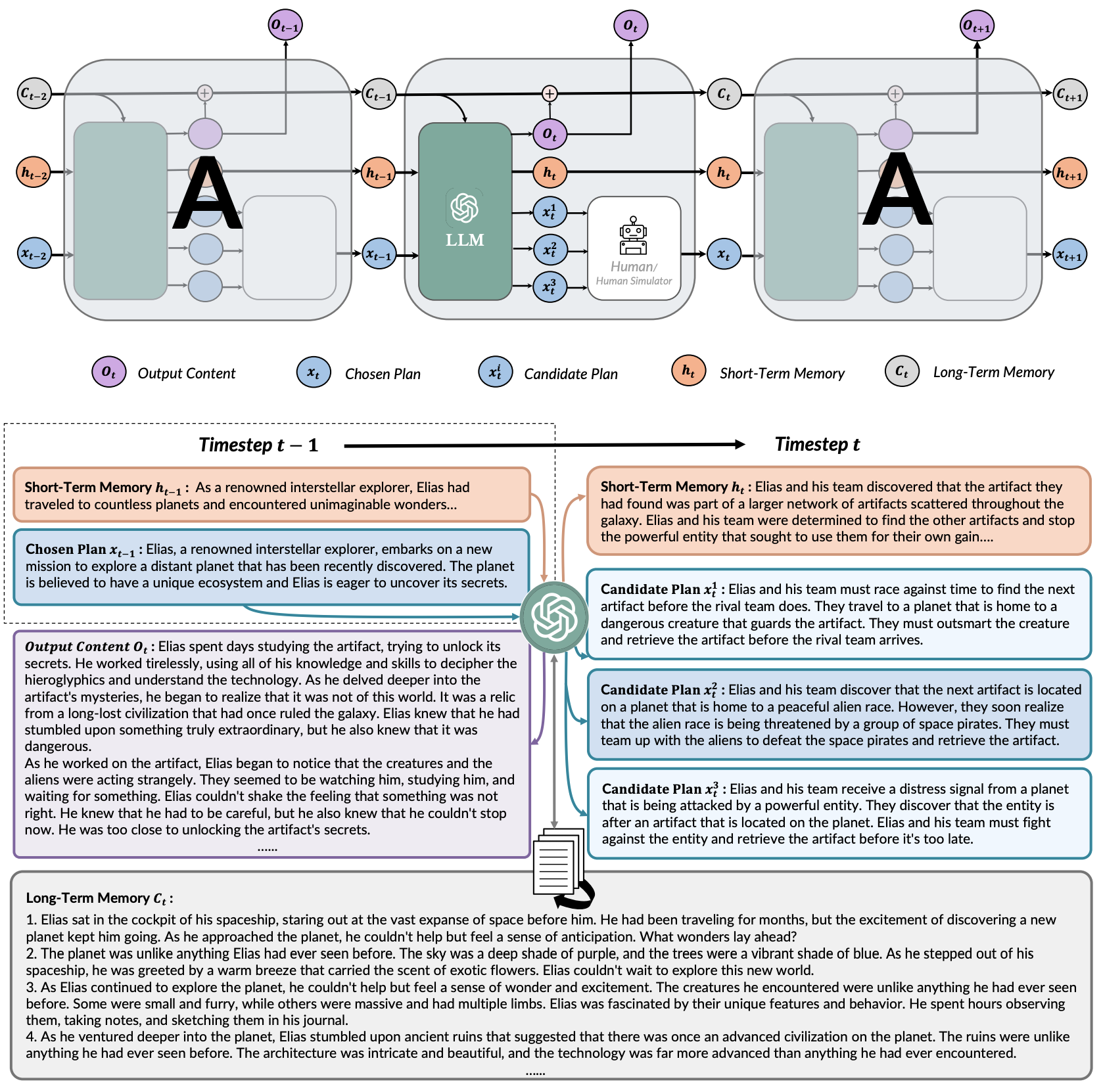

We present RecurrentGPT, a language based simulacrum of the recurrence mechanism in RNNs which enables us to use ChatGPT to generate long texts.

Wangchunshu Zhou, Yuchen Eleanor Jiang, Peng Cui, Tiannan Wang, Zhenxin Xiao, Yifan Hou, Ryan Cotterell and Mrinmaya Sachan

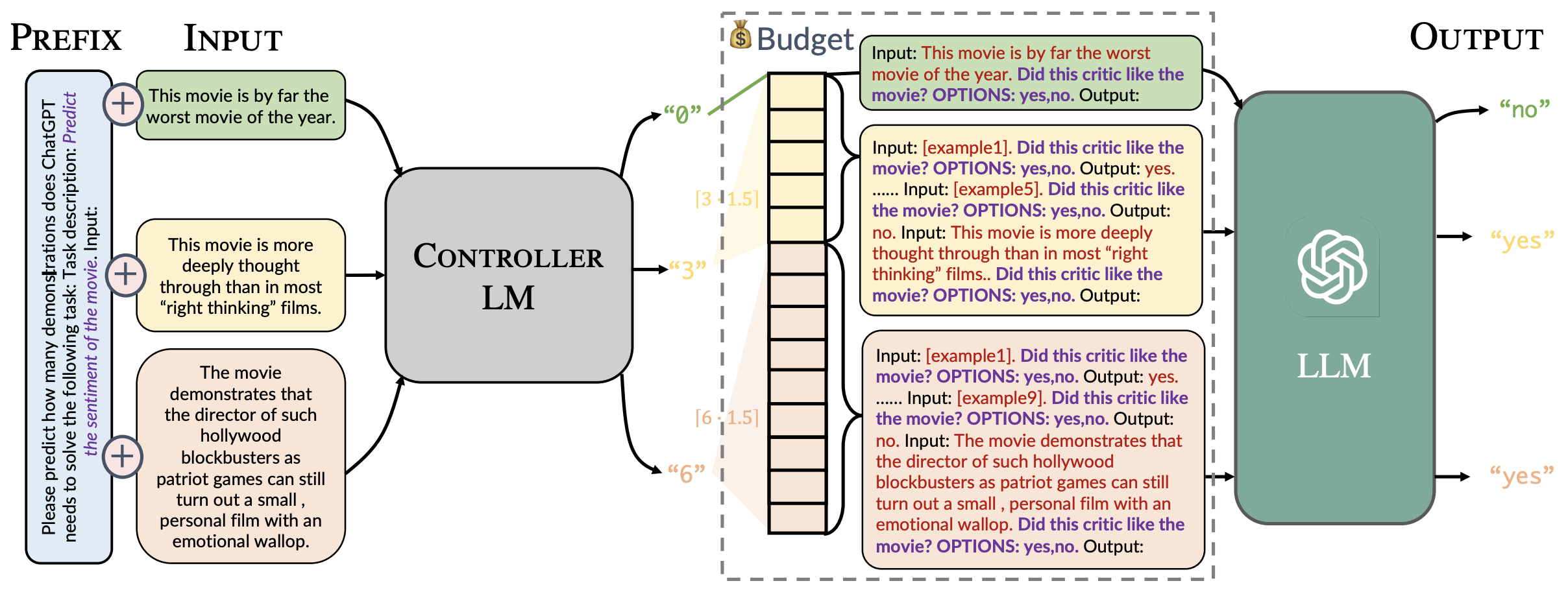

We propose DYNAICL, a recipe for efficient prompting with black-box generalist models that dynamically allocate in-context examples according to the input complexity and the computational budget.

Wangchunshu Zhou, Yuchen Eleanor Jiang, Ryan Cotterell and Mrinmaya Sachan

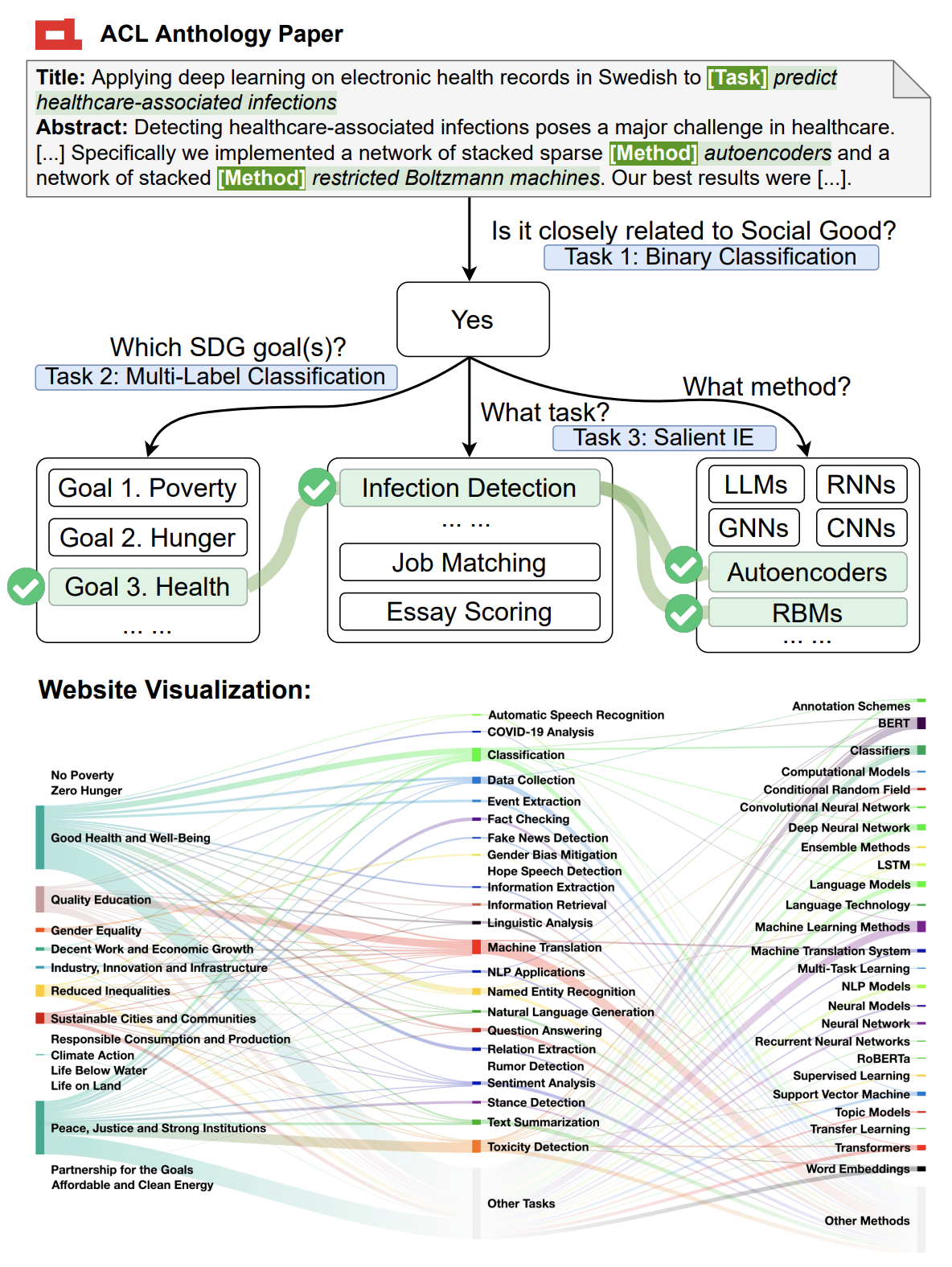

We introduce NLP4SGPAPERS, a scientific dataset with three associated tasks that can help identify NLP for social good (NLP4SG) papers and characterize the NLP4SG landscape.

Fernando Gonzalez, Zhijing Jin, Bernhard Schölkopf, Tom Hope, Mrinmaya Sachan and Rada Mihalcea

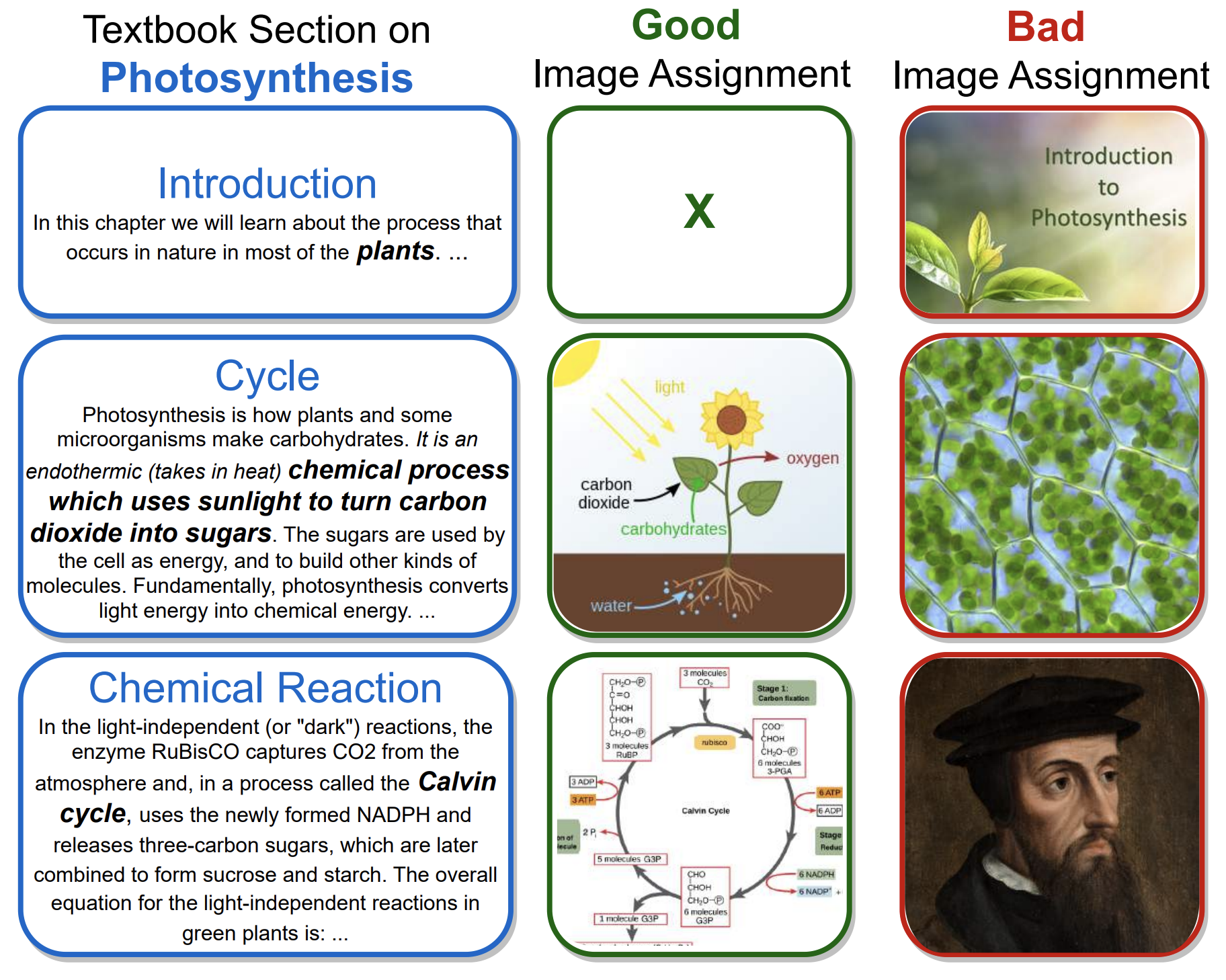

We propose a vision-language model based approach to automatically enhance textbooks with images from the web.

Janvijay Singh, Vilém Zouhar and Mrinmaya Sachan

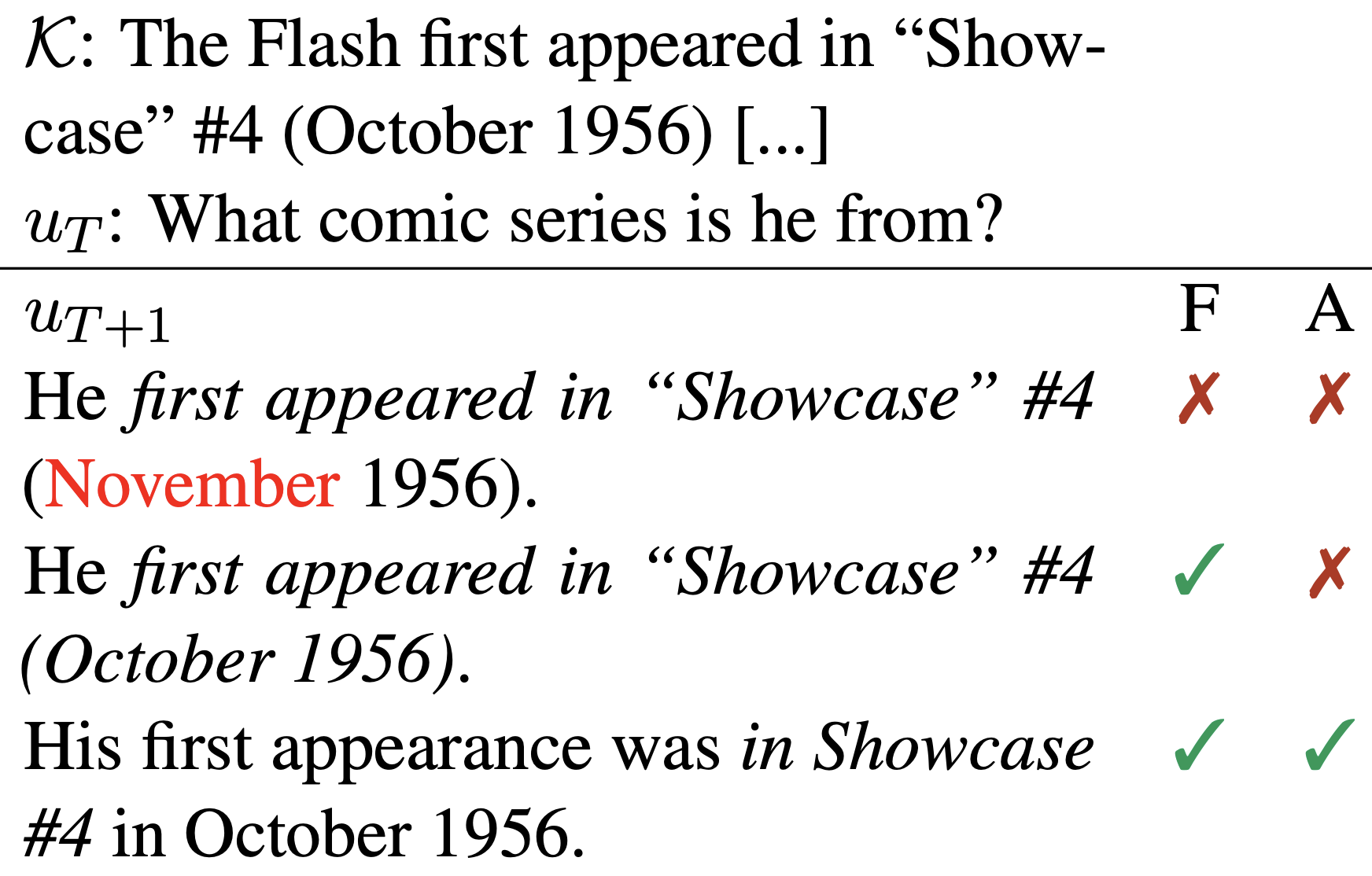

We propose a new approach for mitigating hallucination or generation of unverifiable information in dialog models

Nico Daheim, Nouha Dziri, Mrinmaya Sachan, Iryna Gurevych and Edoardo M Ponti

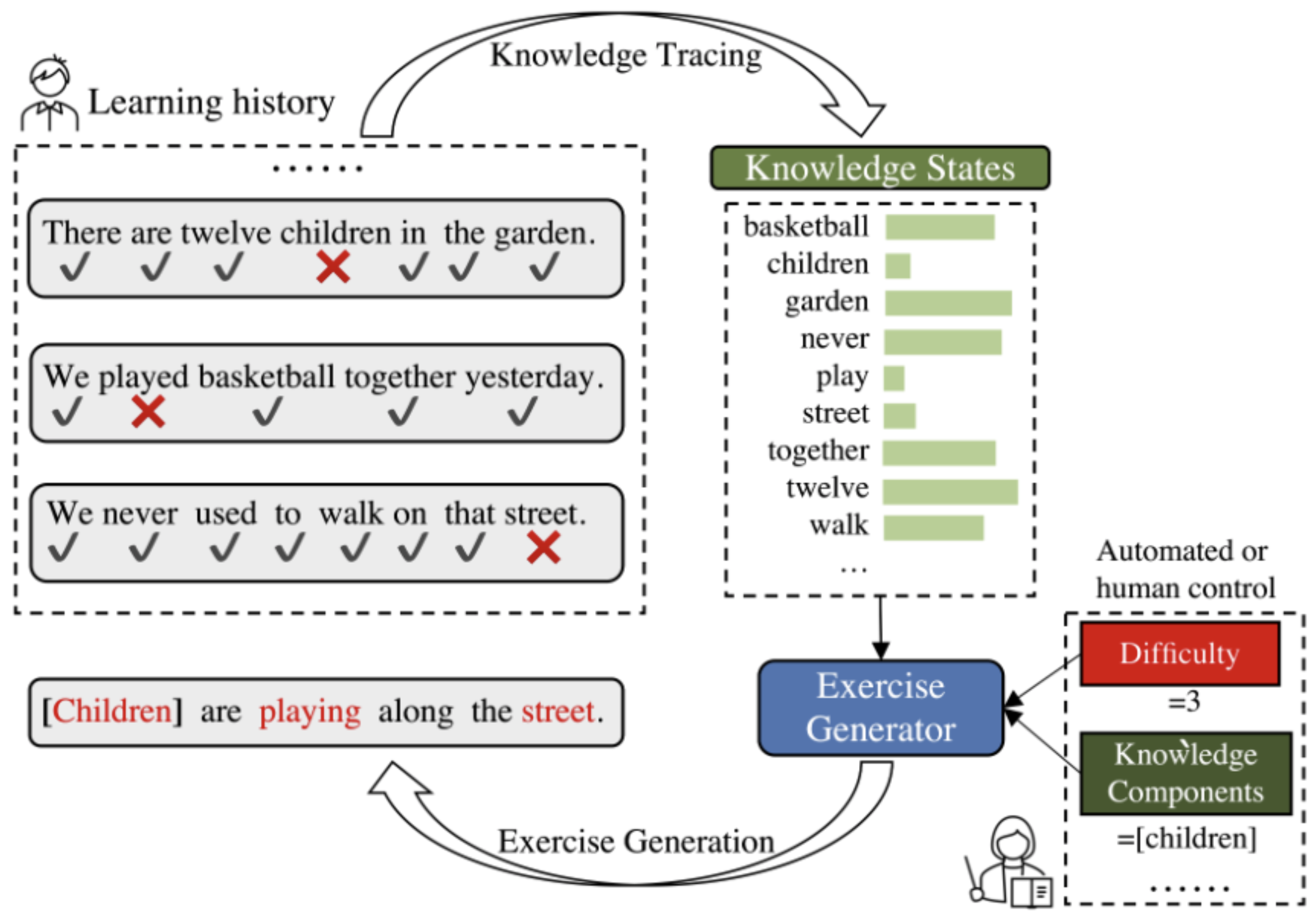

We combine a knowledge tracing model that estimates each student’s evolving knowledge states from their learning history and a controlled text generation model that generates exercises based on the student’s current estimated knowledge state and instructor requirements of desired properties for the next exercise.

Peng Cui and Mrinmaya Sachan

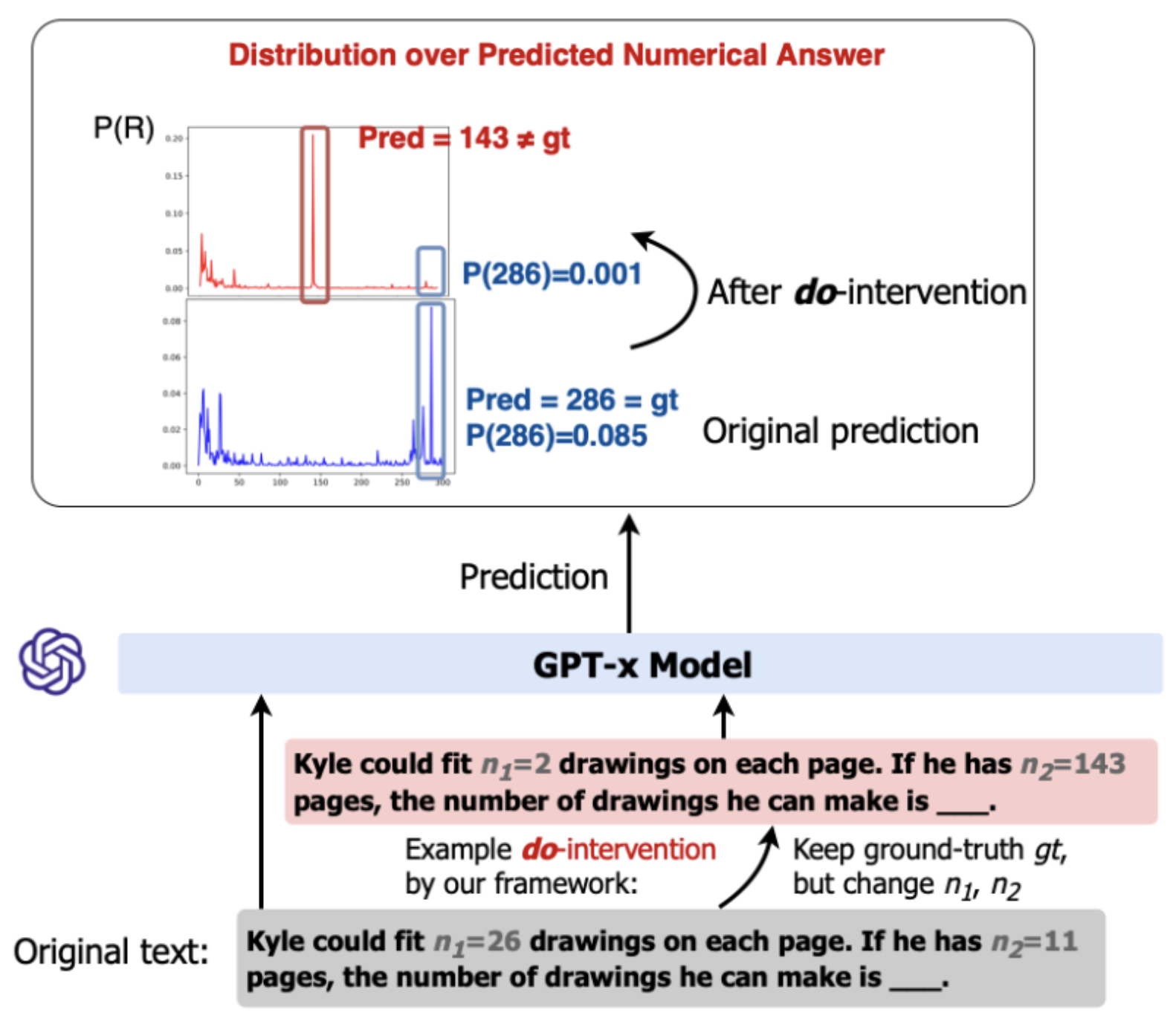

We propose a causal framework to quantify the robustness of the reasoning abilities of language models.

Alessandro Stolfo, Zhijing Jin, Kumar Shridhar, Bernhard Schölkopf and Mrinmaya Sachan

ACL 2023 (also at MATHAI Workshop at NeurIPS 22)

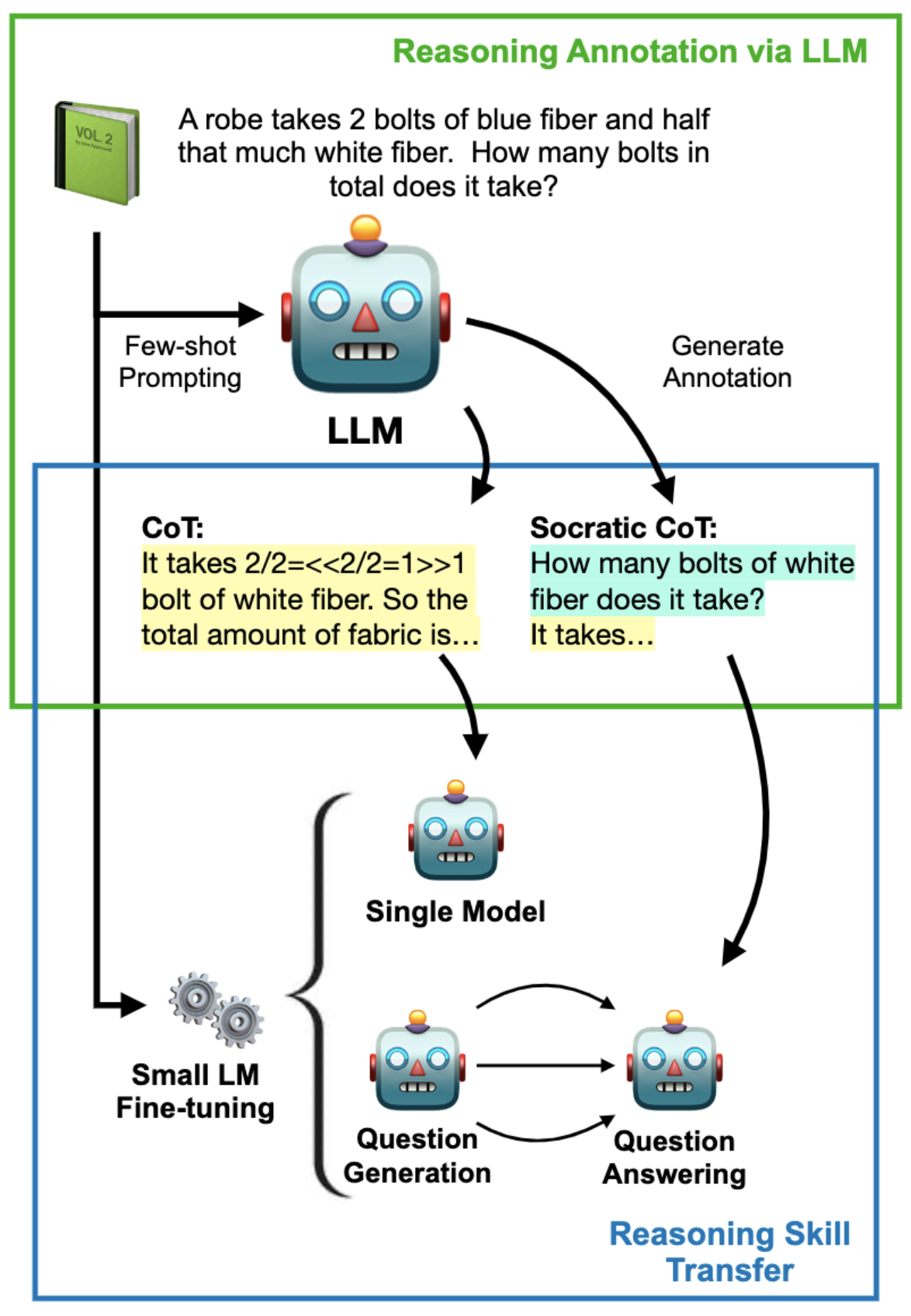

We propose a knowledge distillation framework that leverages step-by-step reasoning capabilities of larger models and distills these capabilities into smaller models.

Kumar Shridhar, Alessandro Stolfo and Mrinmaya Sachan

As a step towards developing interpretable language model reasoners, we develop a semantic formalism representing the world depicted in math story problems.

Andreas Opedal, Niklas Stoehr, Abulhair Saparov and Mrinmaya Sachan

We formalize BPE as a combinatorial optimization problem and use submodularity to prove that the iterative greedy approach to BPE is approximately optimal.

Vilém Zouhar, Tim Vieira, Clara Meister, Juan Luis Gastaldi, Mrinmaya Sachan and Ryan Cotterell

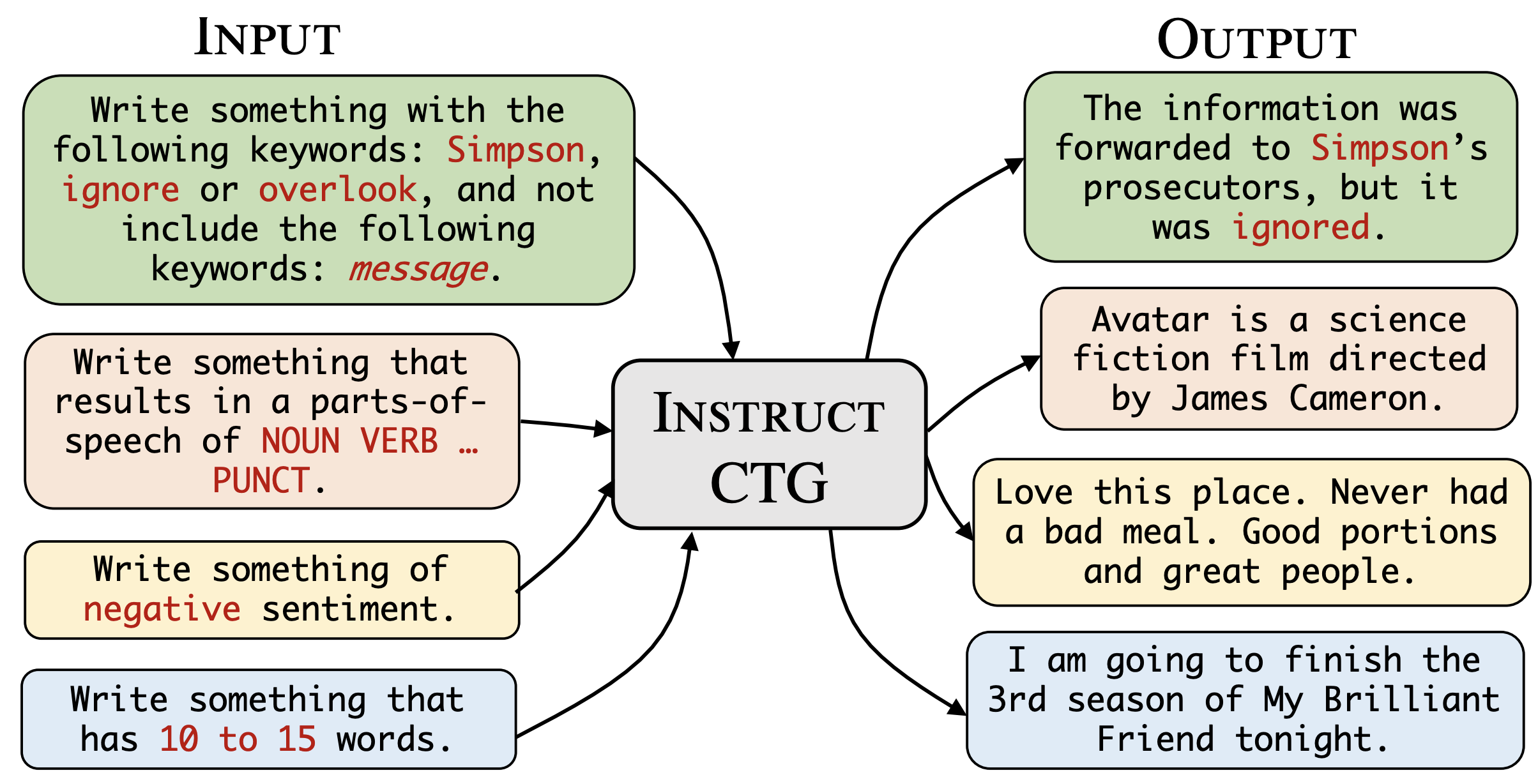

We present InstructCTG, a controlled text generation framework that incorporates different constraints by conditioning the text generation process on natural language descriptions and demonstrations of the constraints.

Wangchunshu Zhou, Yuchen Eleanor Jiang, Ethan Wilcox, Ryan Cotterell and Mrinmaya Sachan

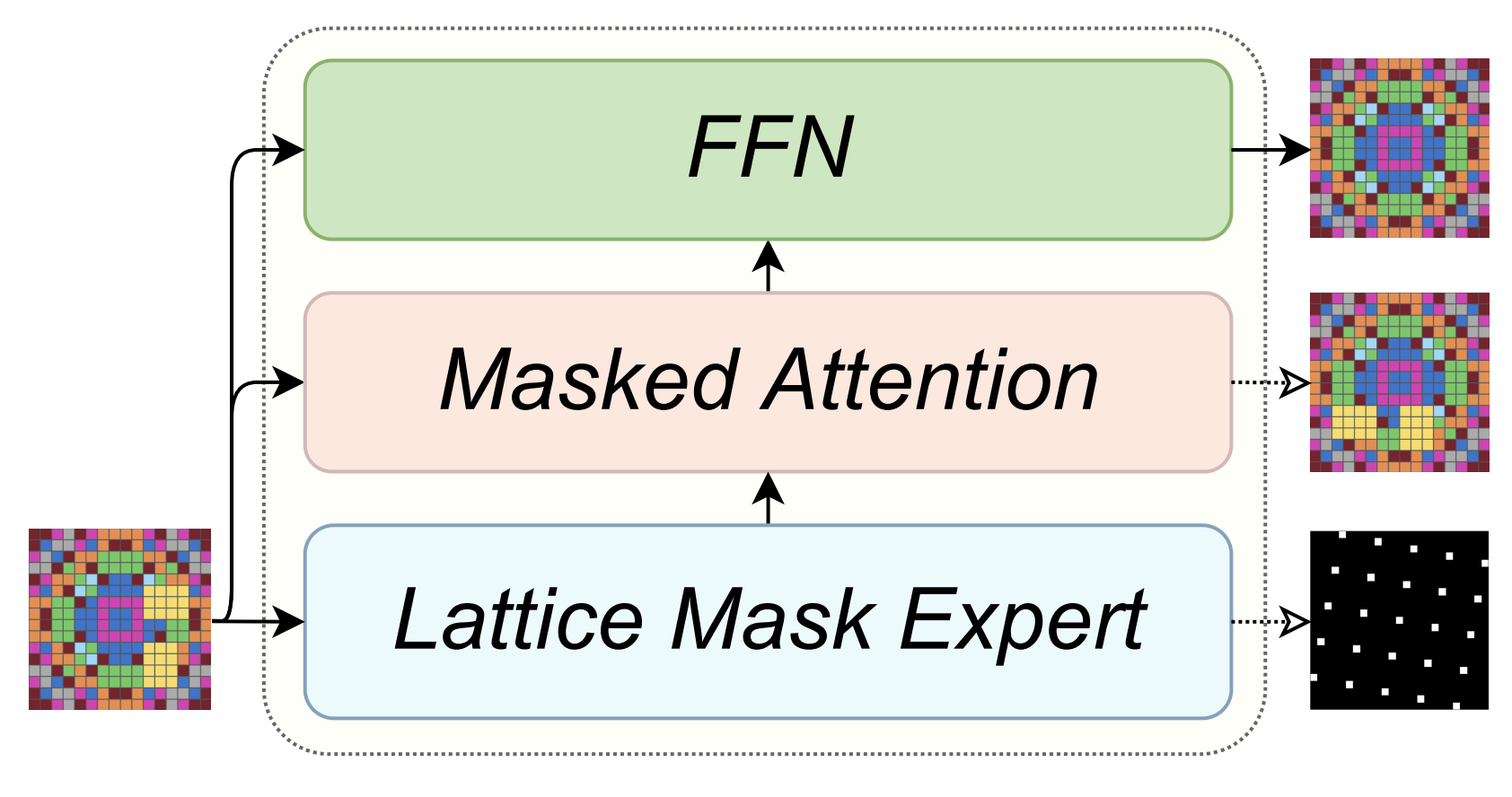

We introduce LatFormer, a model that incorporates lattice geometry and topology priors in attention masks for abstract reasoning problems.

Mattia Atzeni, Mrinmaya Sachan and Andreas Loukas

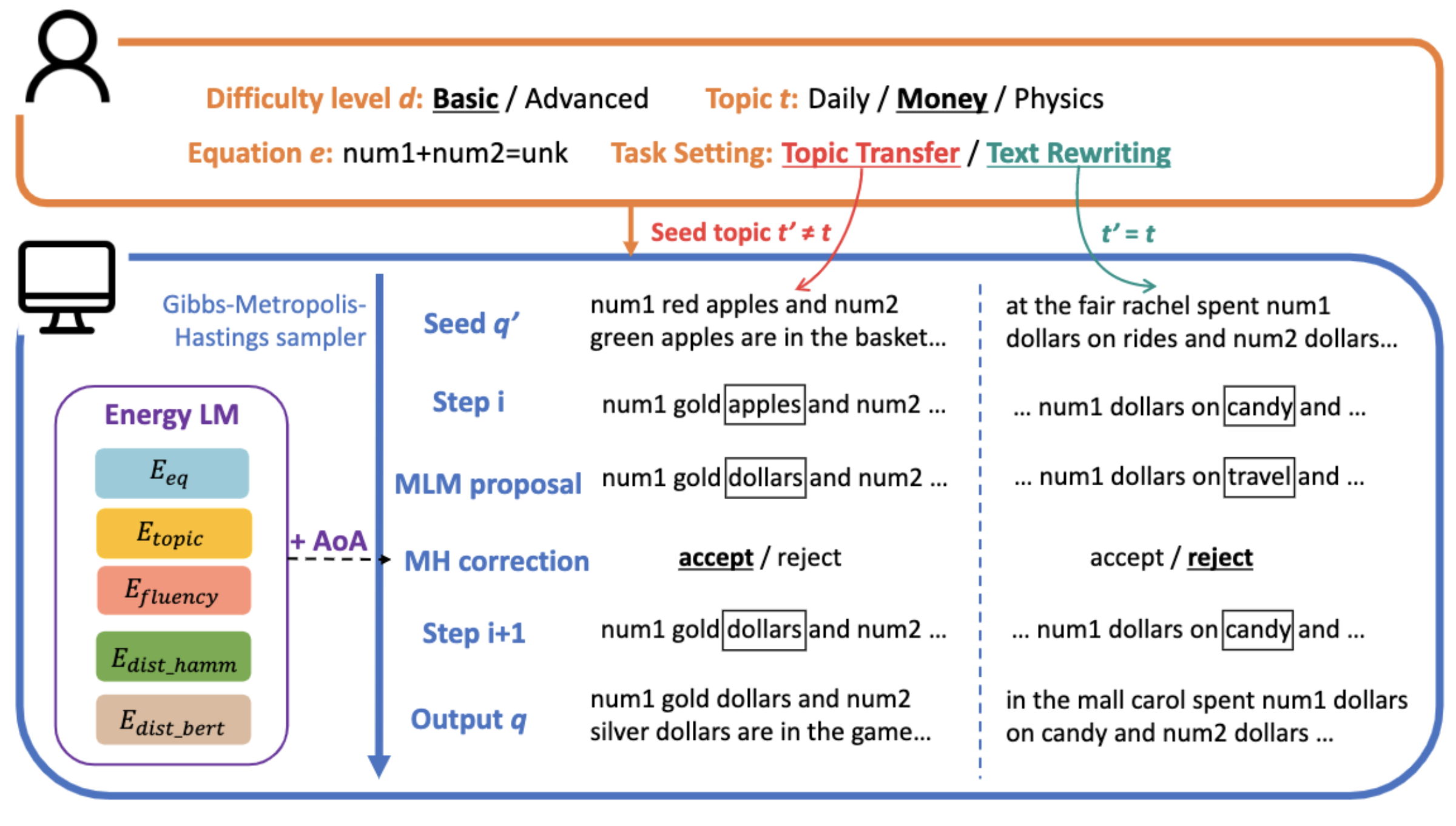

We automatically generate math word problems of various difficulties that meet the needs of teachers in teaching and testing students in various educational stages.

Ying Jiao, Kumar Shridhar, Peng Cui, Wangchunshu Zhou and Mrinmaya Sachan

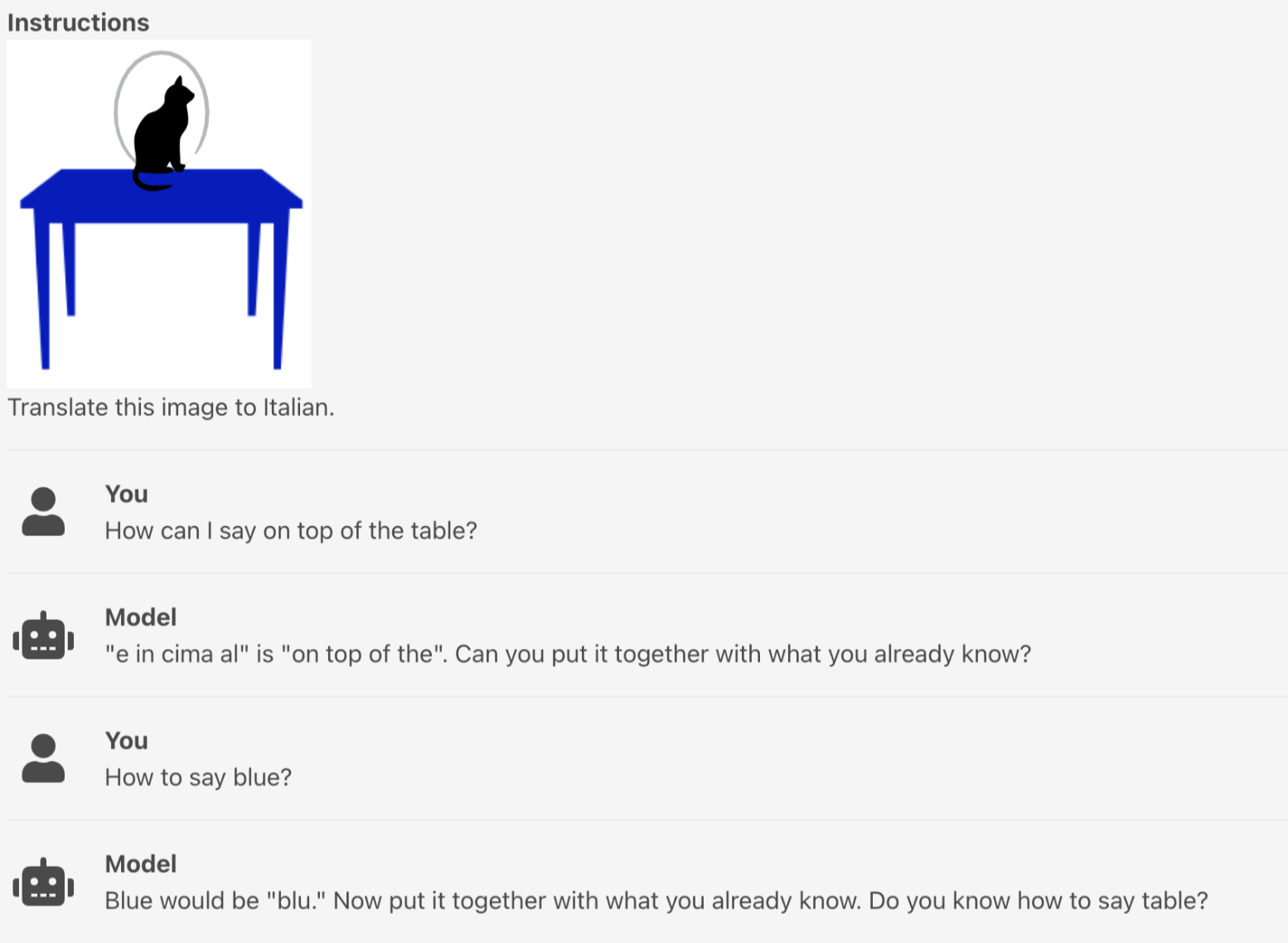

We analyze the potential of neural models on dialog tutoring datasets for language learning using a suite of automatic and human evaluations.

Jakub Macina, Nico Daheim, Lingzhi Wang, Tanmay Sinha, Manu Kapur, Iryna Gurevych and Mrinmaya Sachan

We propose a self-distillation approach to jointly predict teaching strategies and generate tutor responses accordingly for dialog tutoring.

Lingzhi Wang, Mrinmaya Sachan, Xingshan Zeng and Kam-Fai Wong

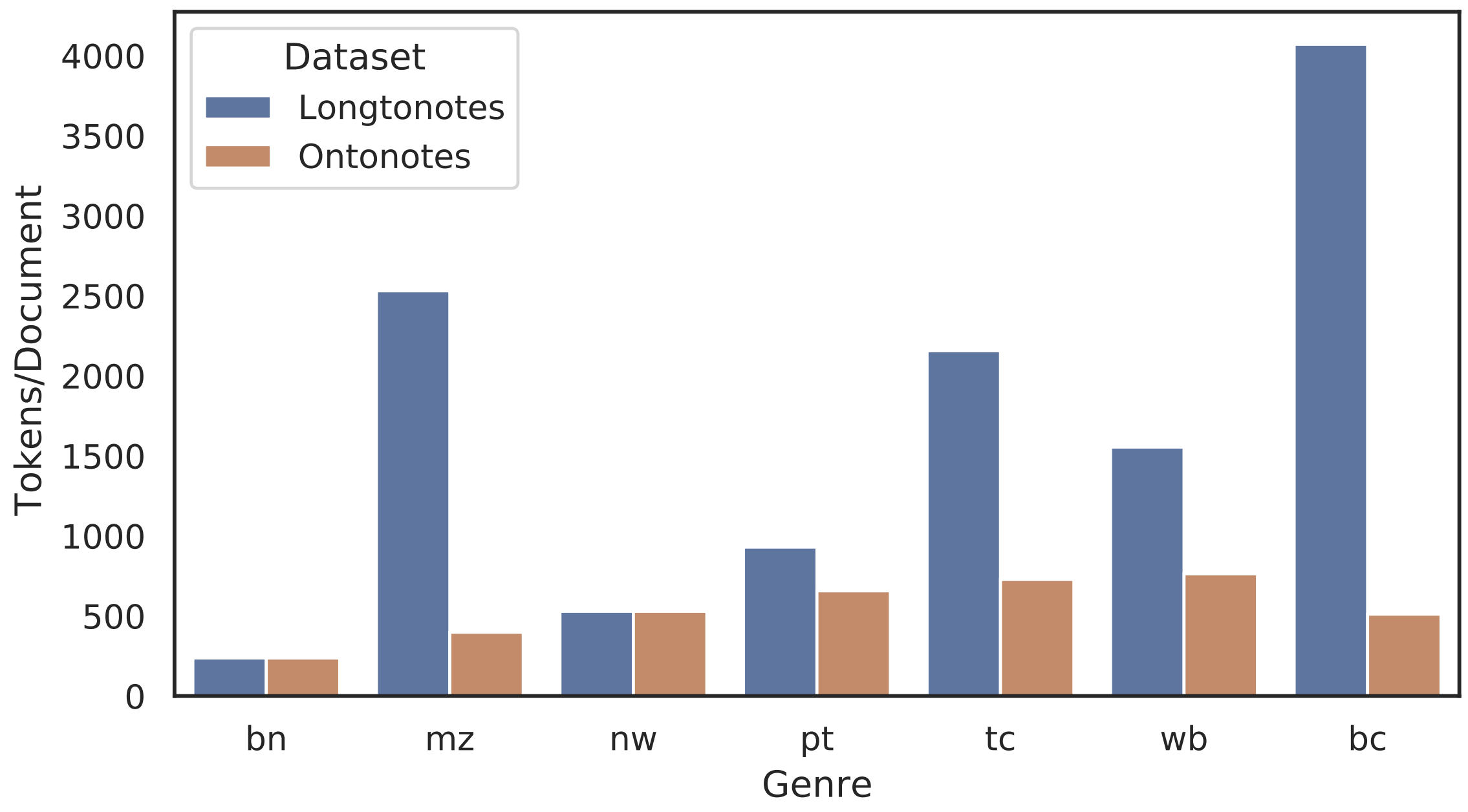

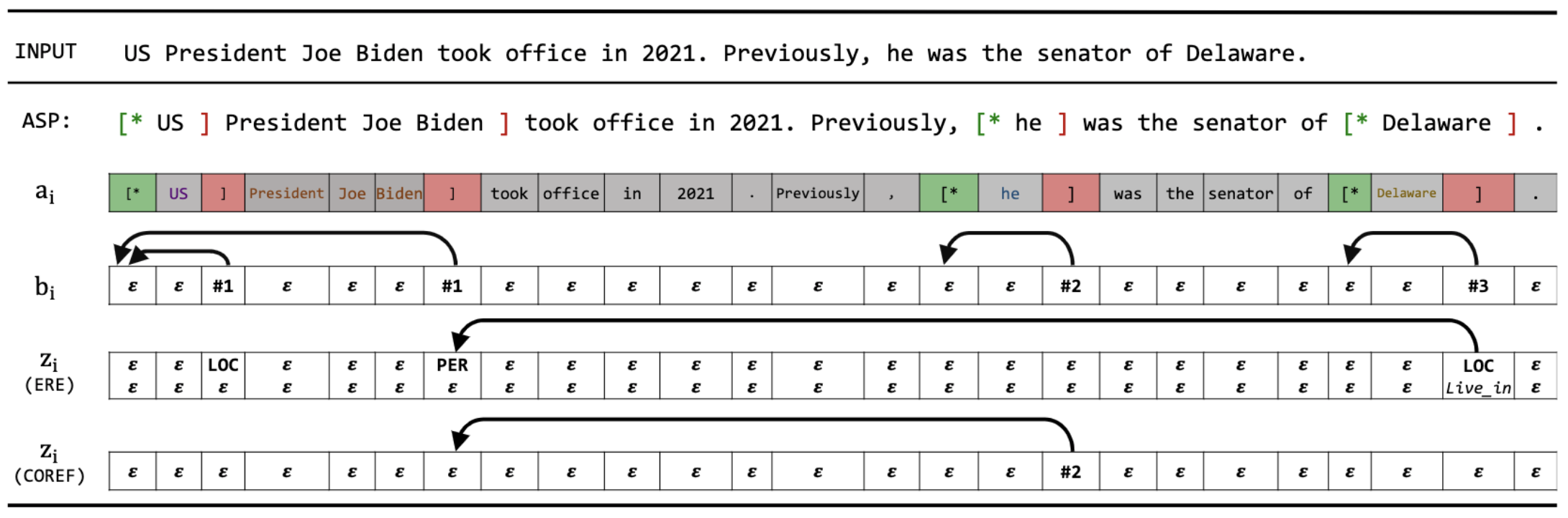

We reconstruct a long document coreference corpus out of Ontonotes, arriving at a more challenging coreference task and dataset.

Kumar Shridhar, Nicholas Monath, Raghuveer Thirukovalluru, Alessandro Stolfo, Manzil Zaheer, Andrew McCallum and Mrinmaya Sachan

We use reinforcement learning and language models to generate sequential subquestions for guiding (machines/humans) in math word problem-solving.

Kumar Shridhar, Jakub Macina, Mennatallah El-Assady, Tanmay Sinha, Manu Kapur and Mrinmaya Sachan

EMNLP 2022 (also at MATHAI Workshop at NeurIPS 22) Code

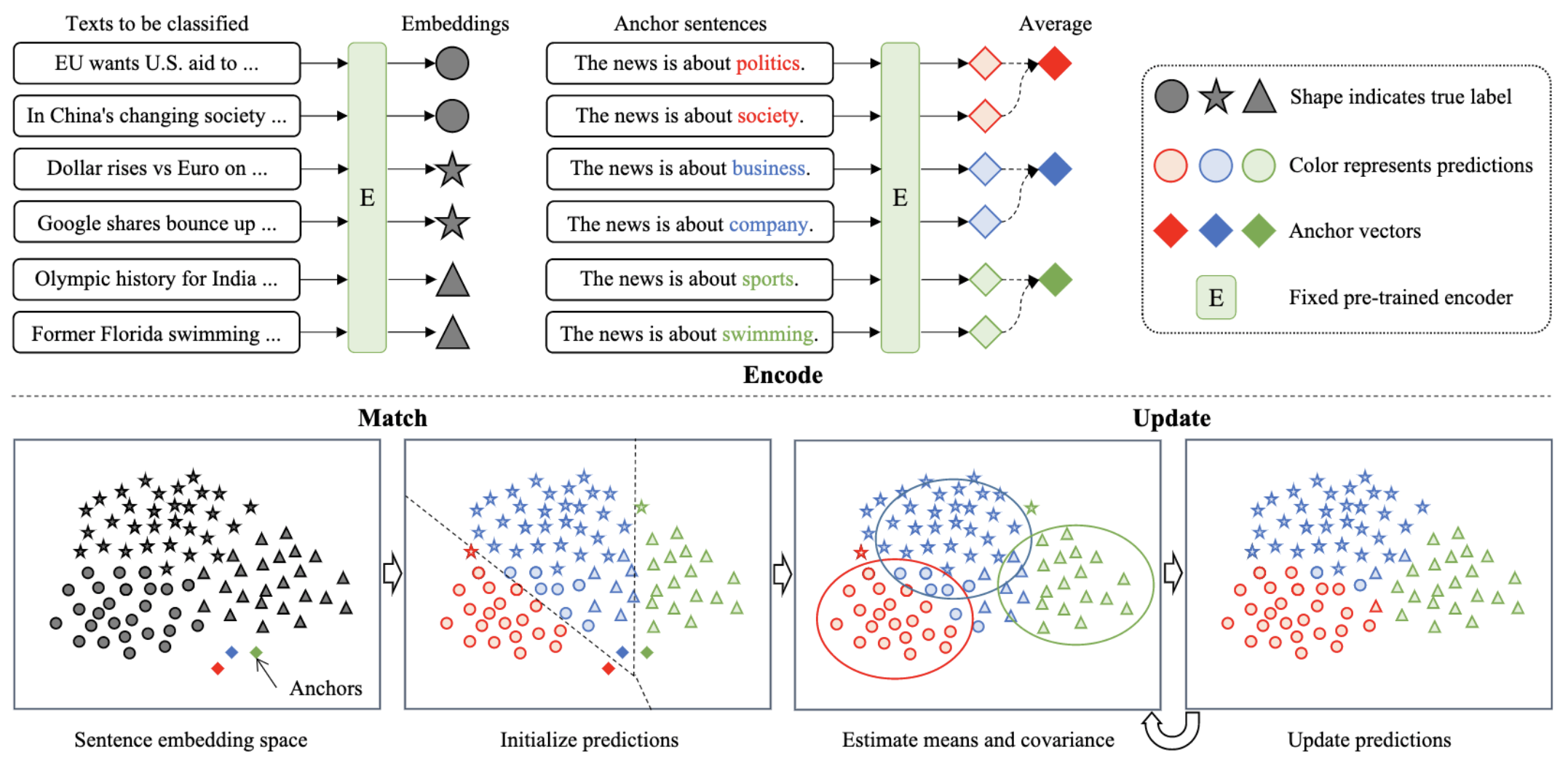

We show that zero-shot text classification can be improved simply by clustering texts in the embedding spaces of LMs.

Yu Fei, Zhao Meng, Ping Nie, Roger Wattenhofer and Mrinmaya Sachan

We generate synthetic datasets from differentially private LMs as a solution for sharing textual data while protecting the privacy of users.

Justus Mattern, Zhijing Jin, Benjamin Weggenmann, Bernhard Schölkopf and Mrinmaya Sachan

We model structures as an autoregressive sequence of actions with LMs and achieve strong results on various sturctured prediction problems.

Tianyu Liu, Yuchen Eleanor Jiang, Nicholas Monath, Ryan Cotterell and Mrinmaya Sachan

EMNLP 2022 (Findings, Short paper)

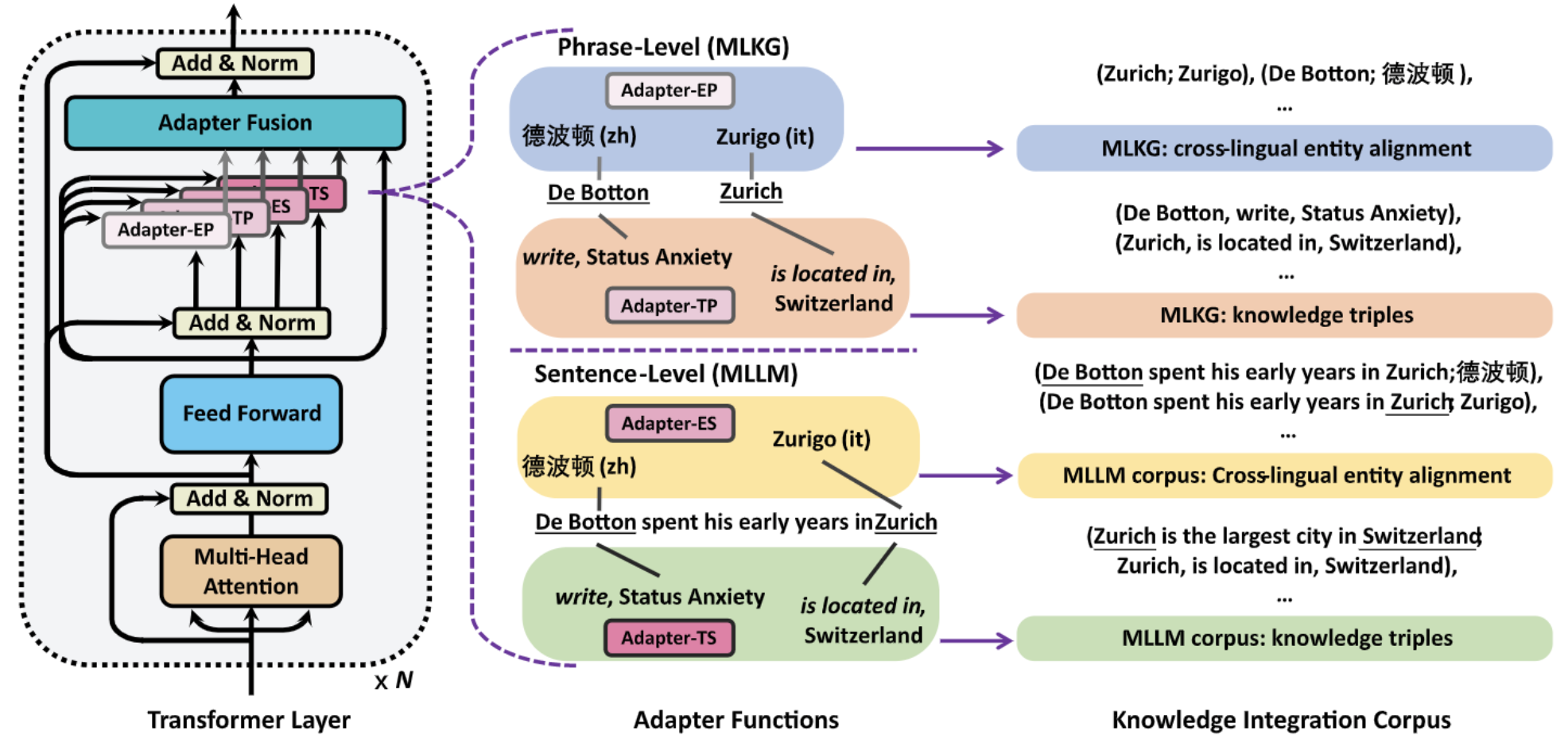

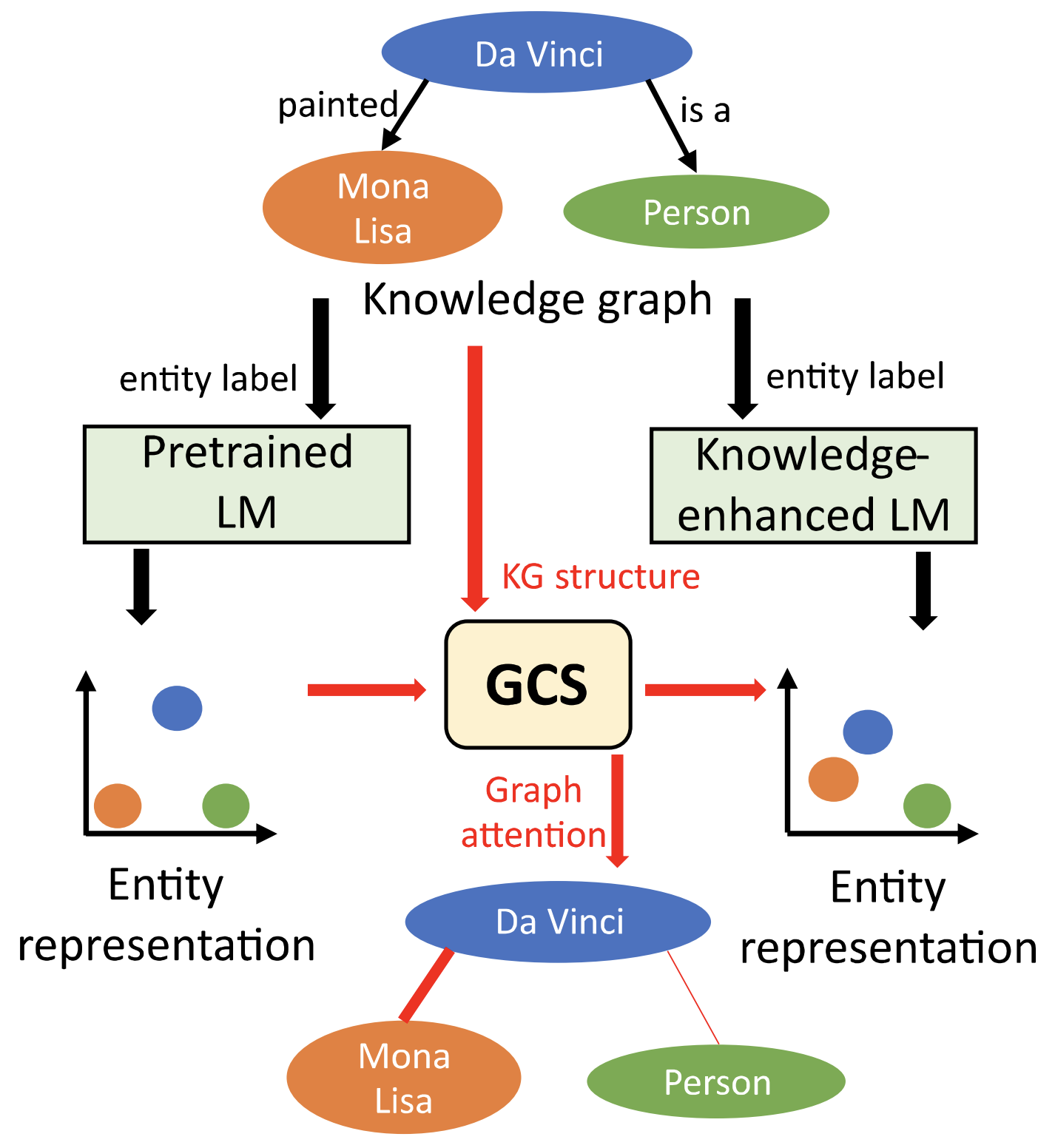

We enhance multilingual LMs with knowledge from multilingual knowledge graphs to tackle language and knowledge graph tasks across many languages.

Yifan Hou, Wenxiang Jiao, Meizhen Liu, Carl Allen, Zhaopeng Tu and Mrinmaya Sachan

EMNLP 2022 (Findings) / Best paper at the Multilingual Representation Learning (MRL) Workshop

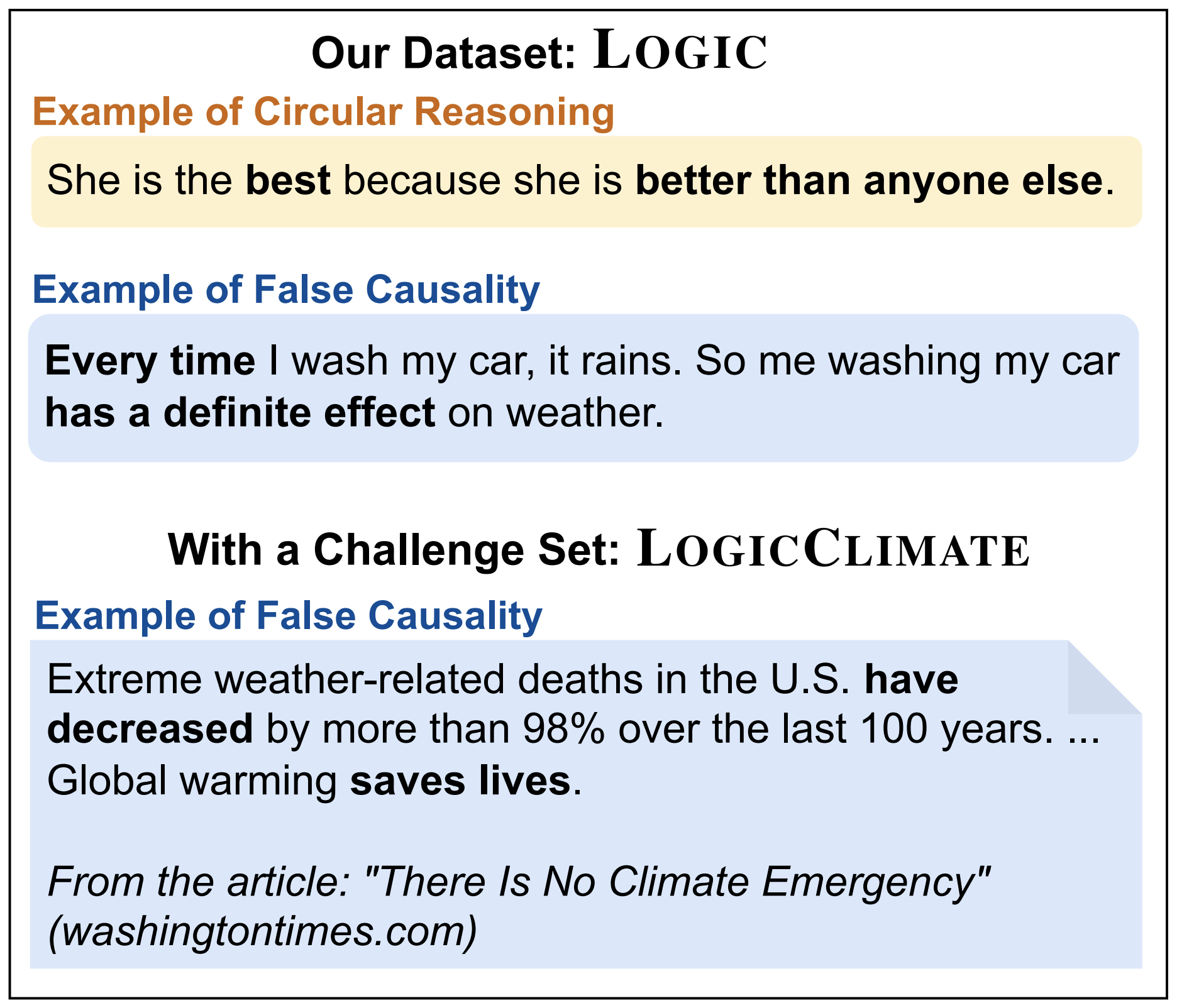

We introduce a new reasoning task and dataset of Logical fallacy detection.

Zhijing Jin, Abhinav Lalwani, Tejas Vaidhya, Xiaoyu Shen, Yiwen Ding, Zhiheng Lyu, Mrinmaya Sachan, Rada Mihalcea and Bernhard Schölkopf

We propose a probe model based on graph convolutions to interpret knowledge-enhanced LMs and understand what kind of knowledge is integrated into these models.

Yifan Hou, Guoji Fu and Mrinmaya Sachan

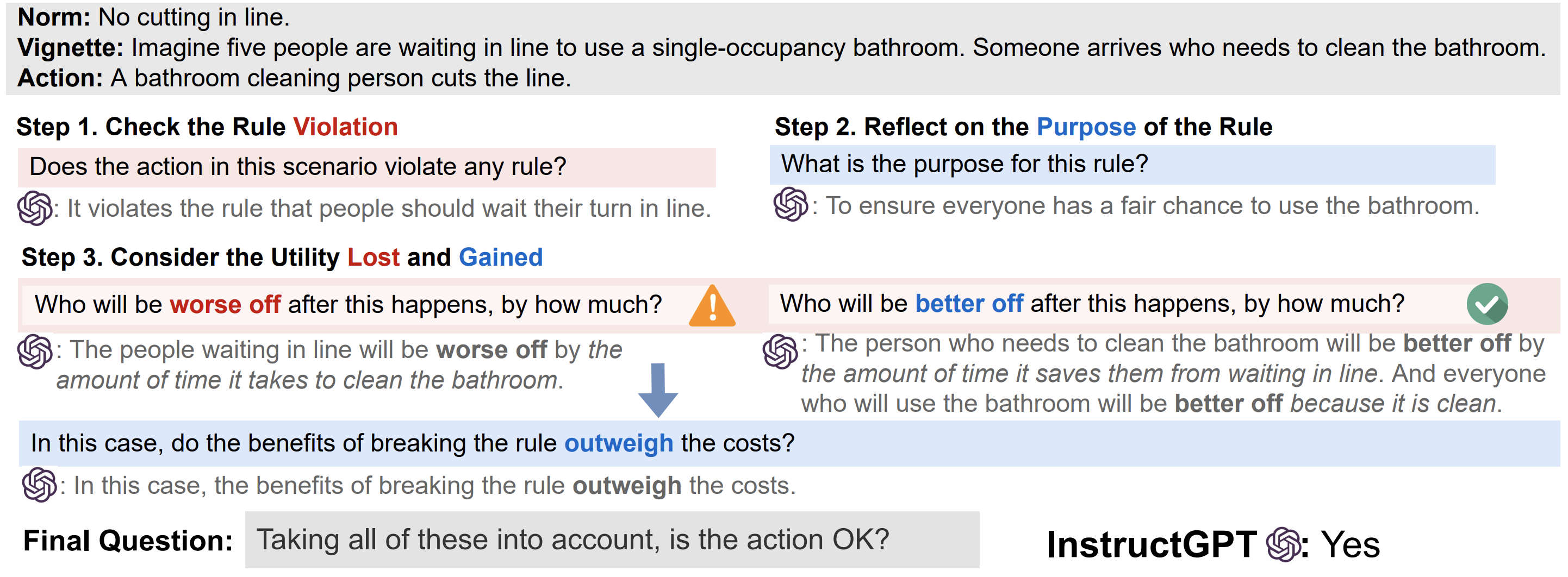

We present a novel challenge set that highlights the flexibility of the human moral mind, analyze the performance of language models on it, and propose a Moral Chain-of-Thought prompting strategy.

Zhijing Jin, Sydney Levine, Fernando Gonzalez Adauto, Ojasv Kamal, Maarten Sap, Mrinmaya Sachan, Rada Mihalcea, Joshua B. Tenenbaum and Bernhard Schölkopf

NeurIPS 2022 (Oral) / CogSci 2022 (Disciplinary Diversity and Integration Award)

We propose kernel learning methods that increase the expressiveness of Efficient Transformers while keeping their complexity linear.

Sankalan Pal Chowdhury, Adamos Solomou, Avinava Dubey and Mrinmaya Sachan

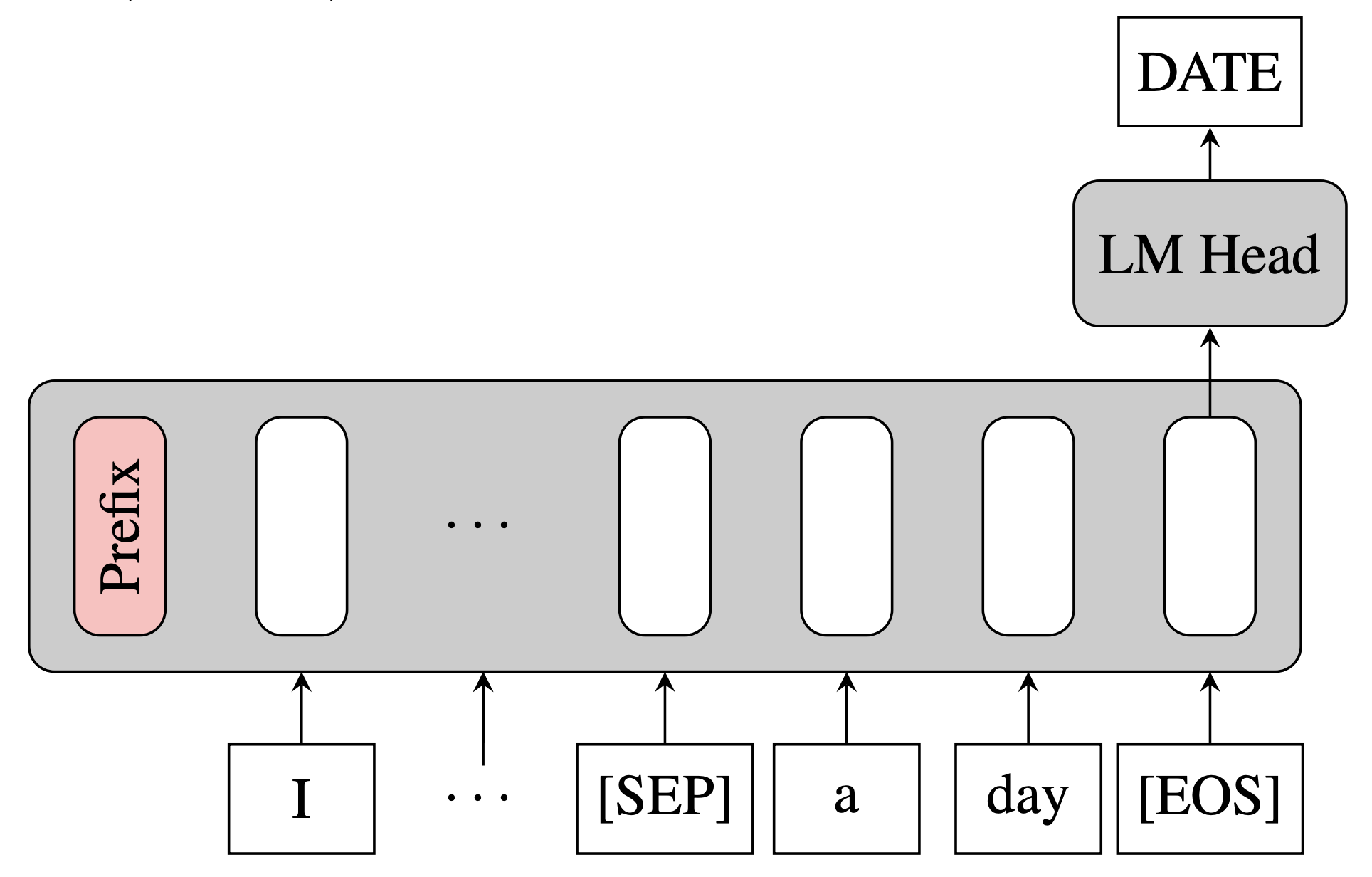

We propose a probing approach that adapts the given task into a sentence completion format and performs probing using the built-in language modeling head.

Jiaoda Li, Ryan Cotterell and Mrinmaya Sachan

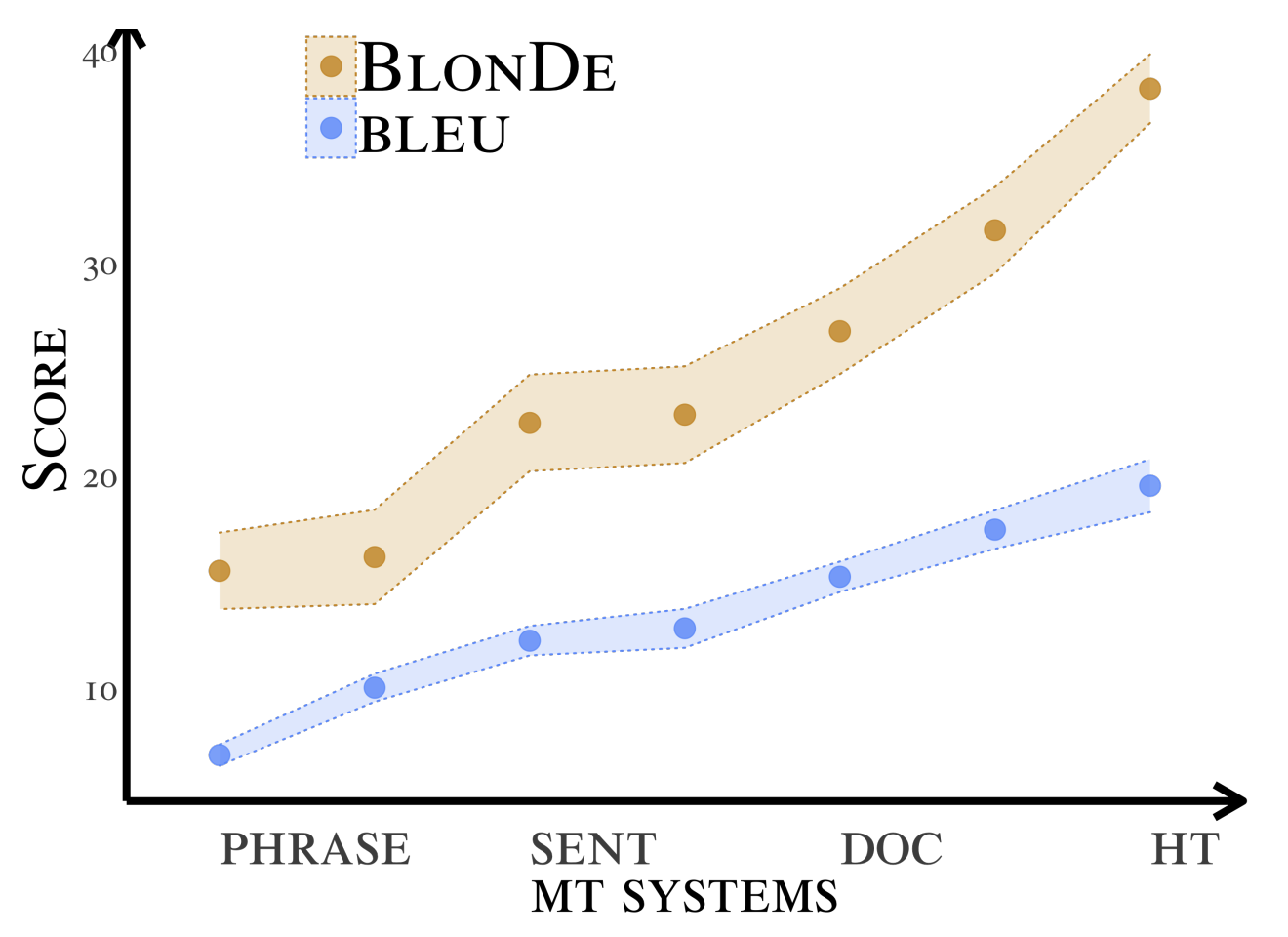

We propose a novel automatic metric to widen the scope of automatic MT evaluation from sentence to document level.

Yuchen Eleanor Jiang, Tianyu Liu, Shuming Ma, Dongdong Zhang, Jian Yang, Haoyang Huang, Rico Sennrich, Mrinmaya Sachan, Ryan Cotterell and Ming Zhou

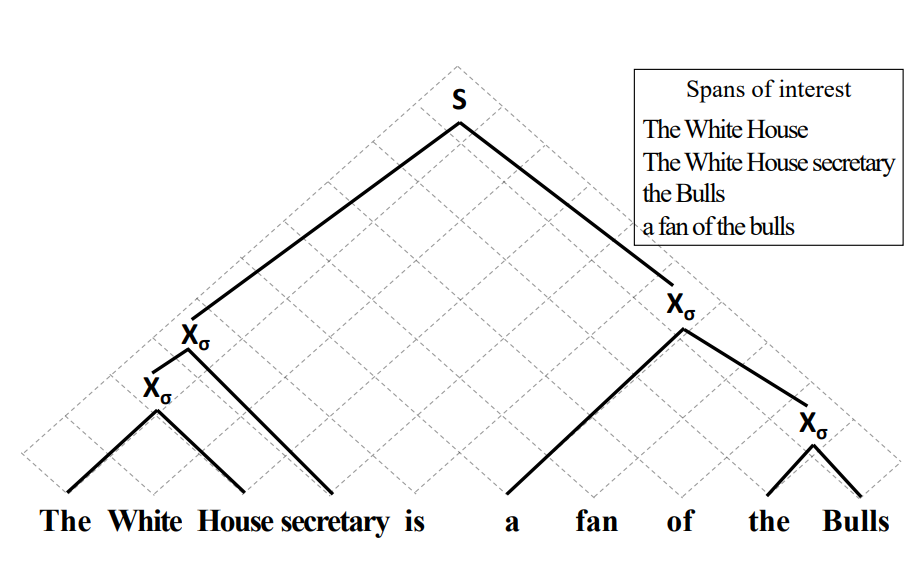

We propose a structured model which directly learns to select an optimal set of spans for various span selection problems.

Tianyu Liu, Yuchen Eleanor Jiang, Ryan D Cotterell and Mrinmaya Sachan

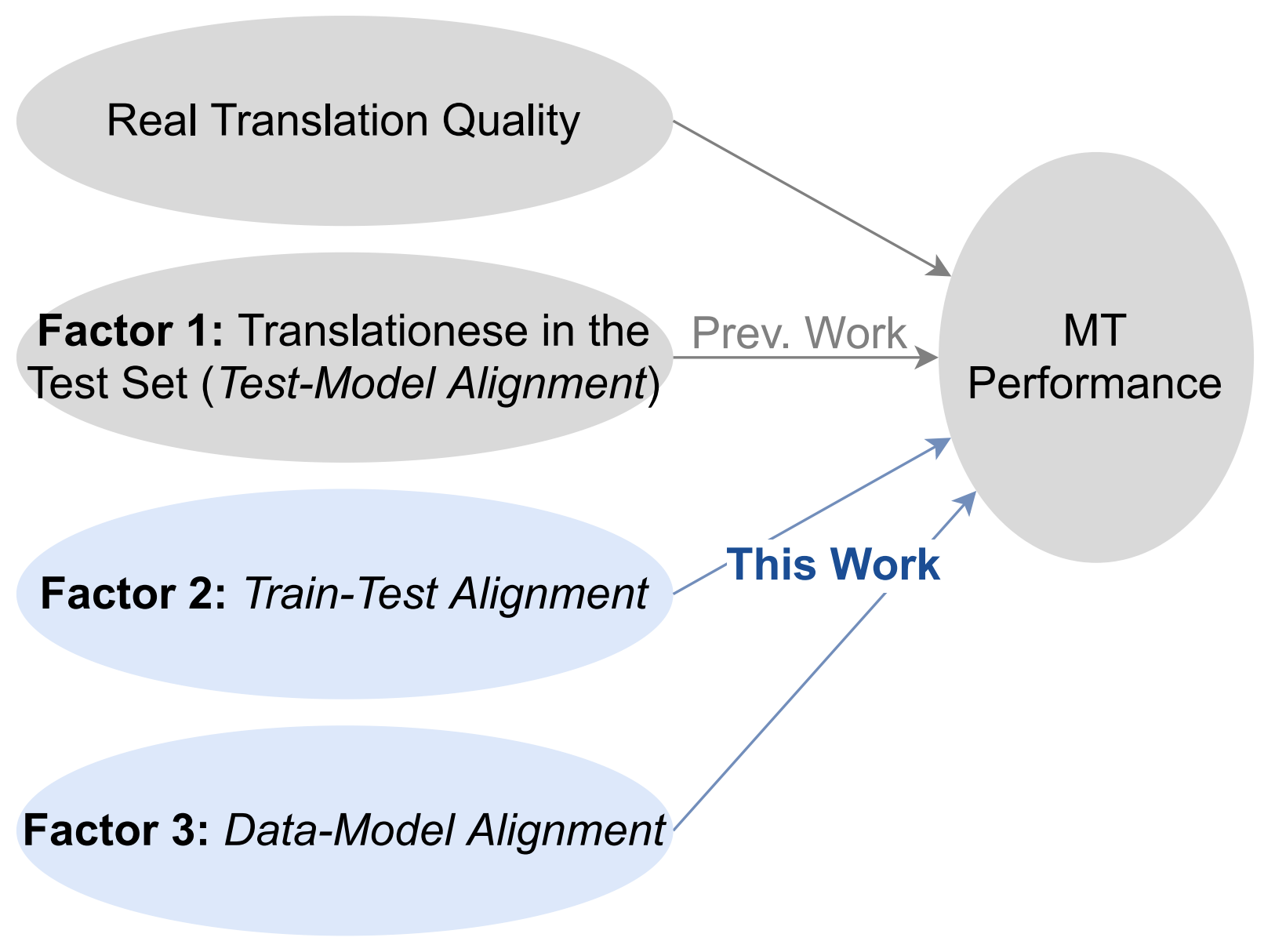

We provide a causal analysis of the impact of translationese on Machine Translation performance.

Jingwei Ni, Zhijing Jin, Markus Freitag, Mrinmaya Sachan and Bernhard Schölkopf

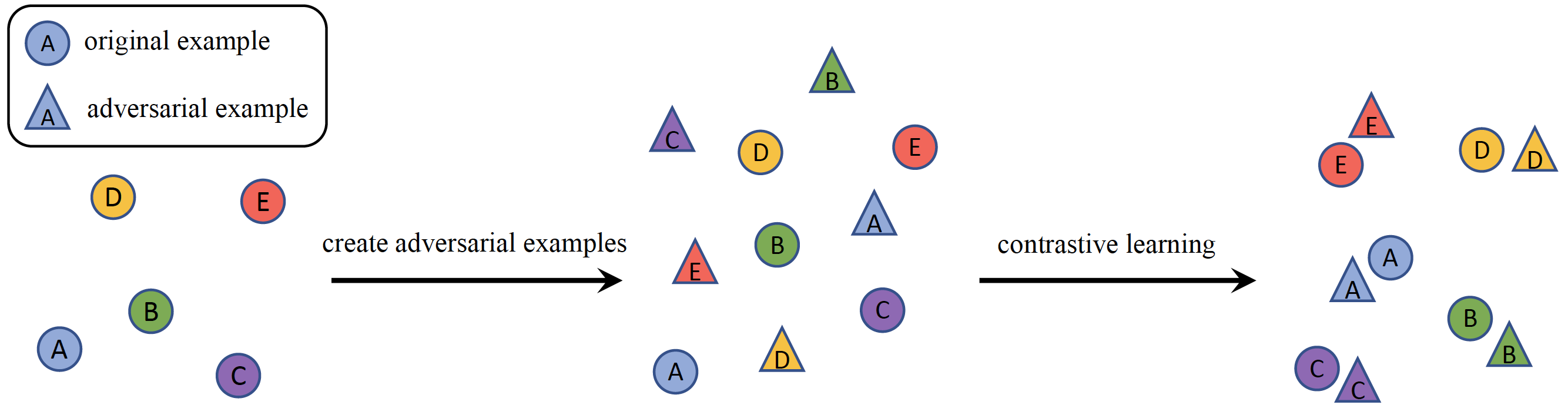

We improve BERT’s robustness against word substitution attacks by leveraging self-supervised contrastive learning.

Zhao Meng, Yihan Dong, Mrinmaya Sachan and Roger Wattenhofer

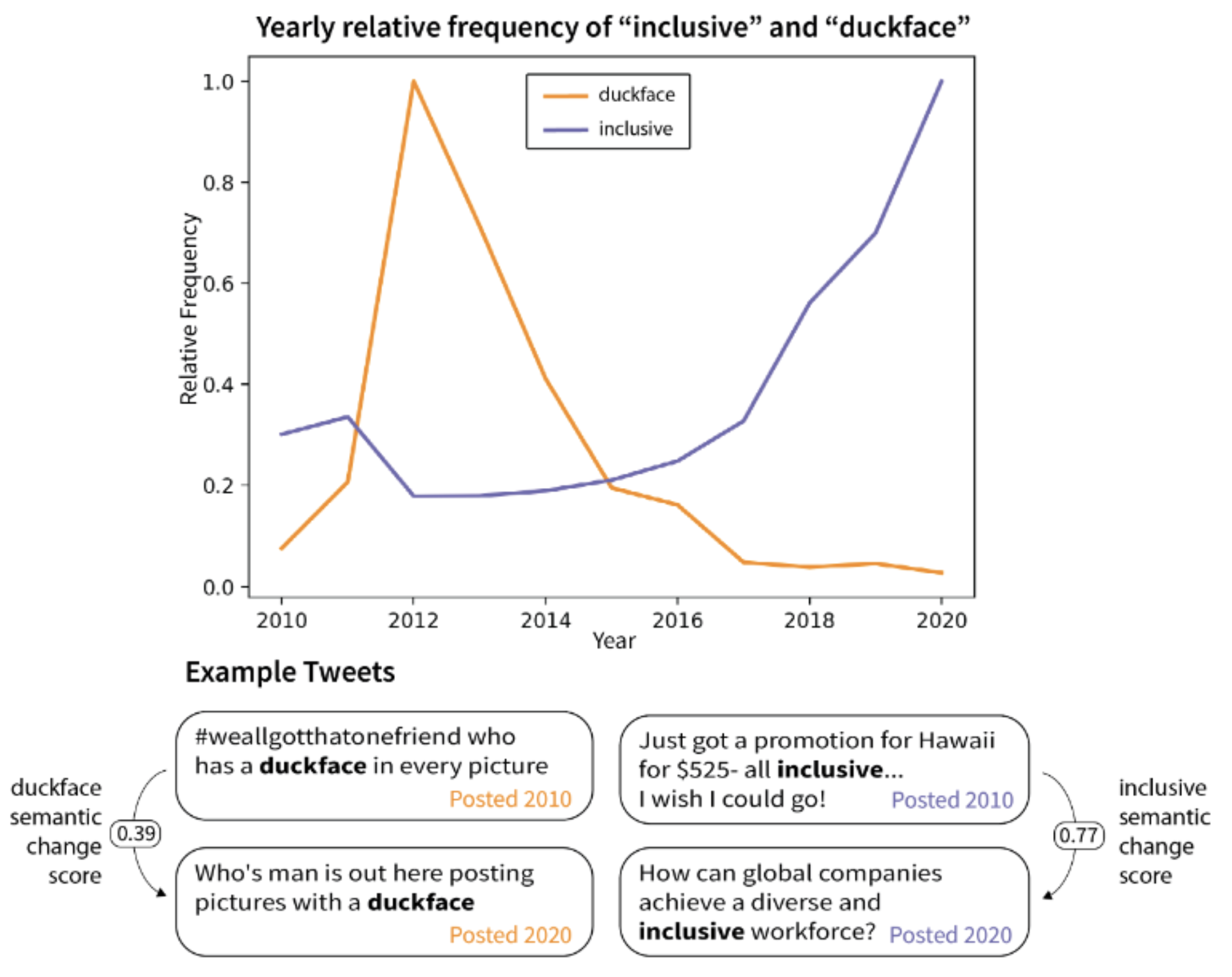

We study language change through the lens of causality in order to model how various distributional factors causally effect language change

Daphna Keidar, Andreas Opedal, Zhijing Jin and Mrinmaya Sachan

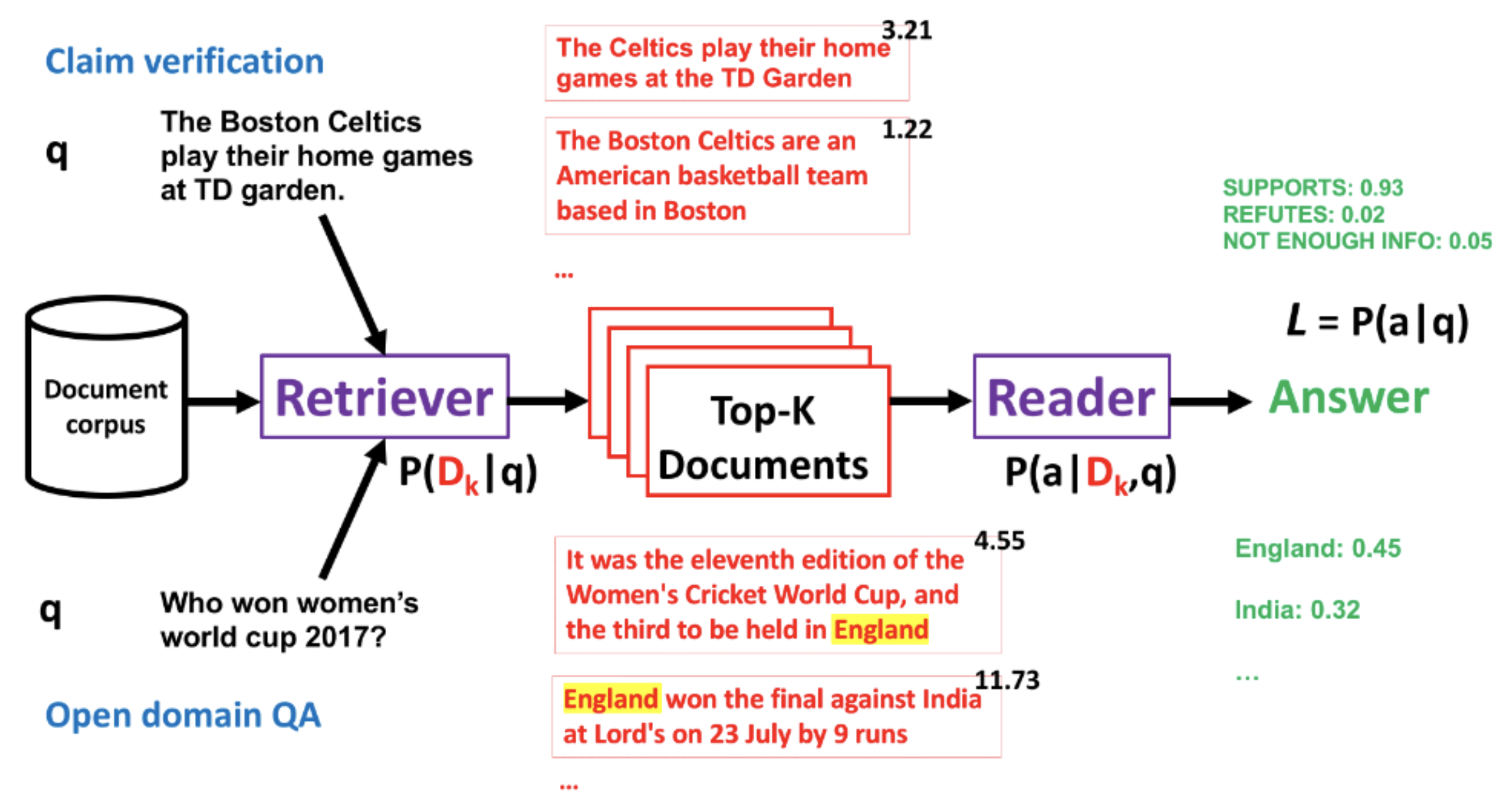

We propose approaches to calibrate open-domain machine reading systems

Shehzaad Dhuliawala, Leonard Adolphs, Rajarshi Das and Mrinmaya Sachan

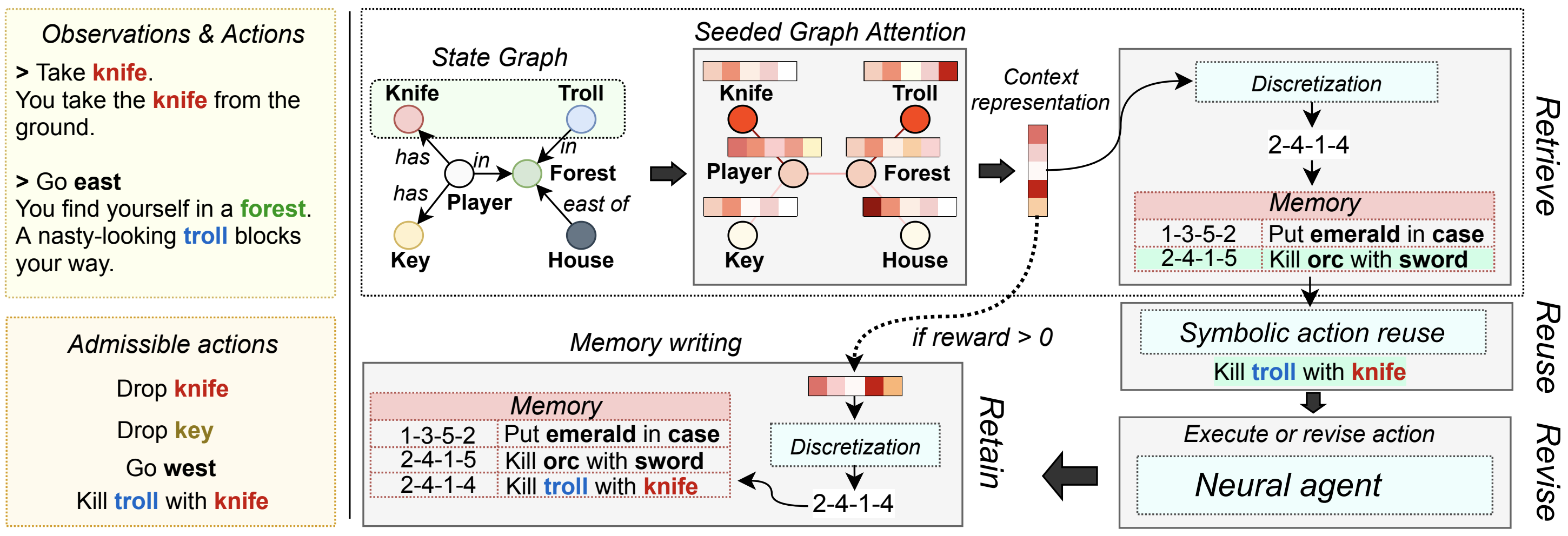

We propose a case-based reasoning approach to train agents and generalize efficiently out of the training distribution.

Mattia Atzeni, Shehzaad Dhuliawala, Keerthiram Murugesan and Mrinmaya Sachan

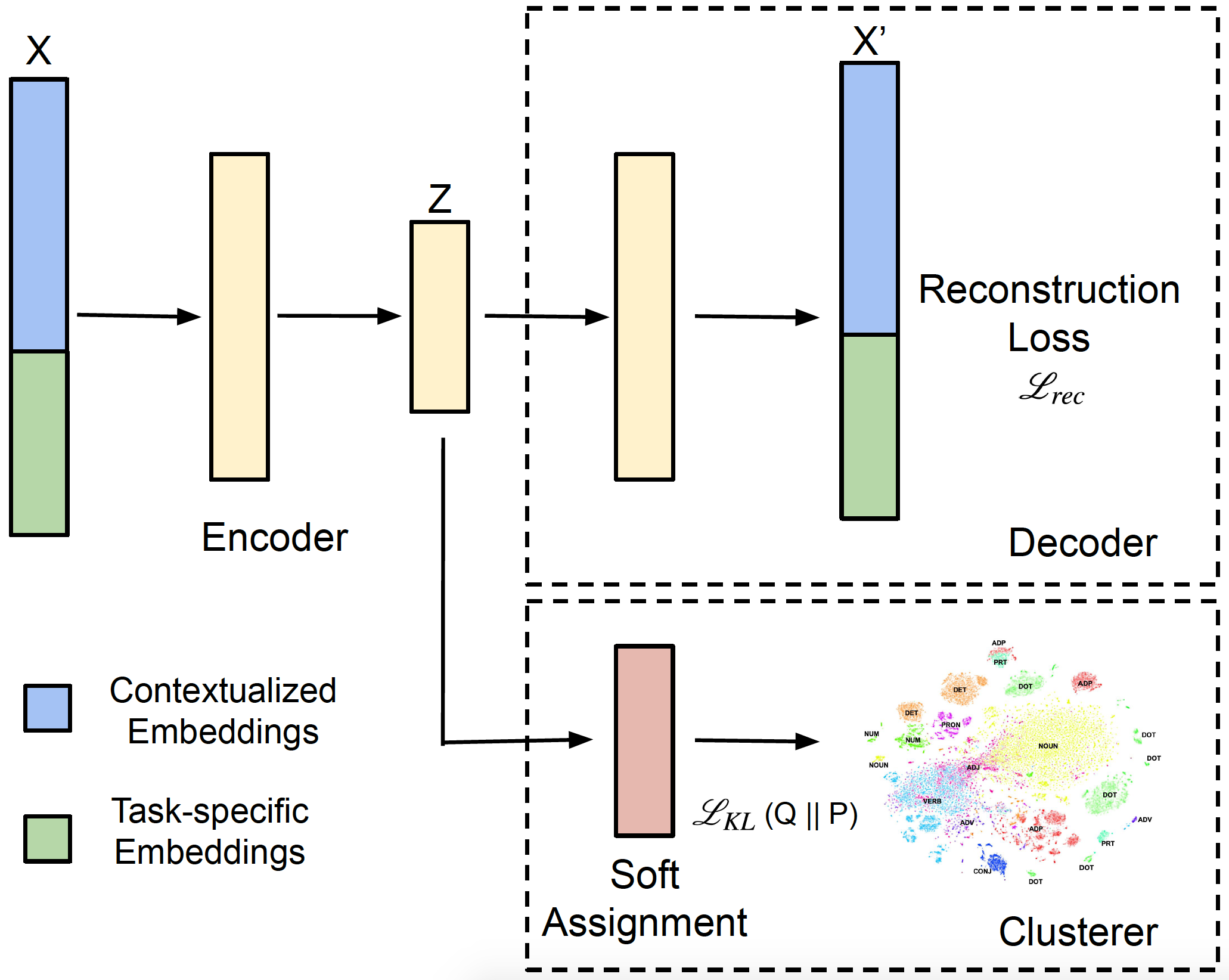

We jointly map contextualized text representations to a lower dimensional space and cluster them for syntax induction.

Vikram Gupta, Haoyue Shi, Kevin Gimpel and Mrinmaya Sachan

We investigate role of the process of data collection in NLP and explain it using causality.

Zhijing Jin, Julius von Kugelgen, Jingwei Ni, Tejas Vaidhya, Ayush Kaushal, Mrinmaya Sachan and Bernhard Schoelkopf

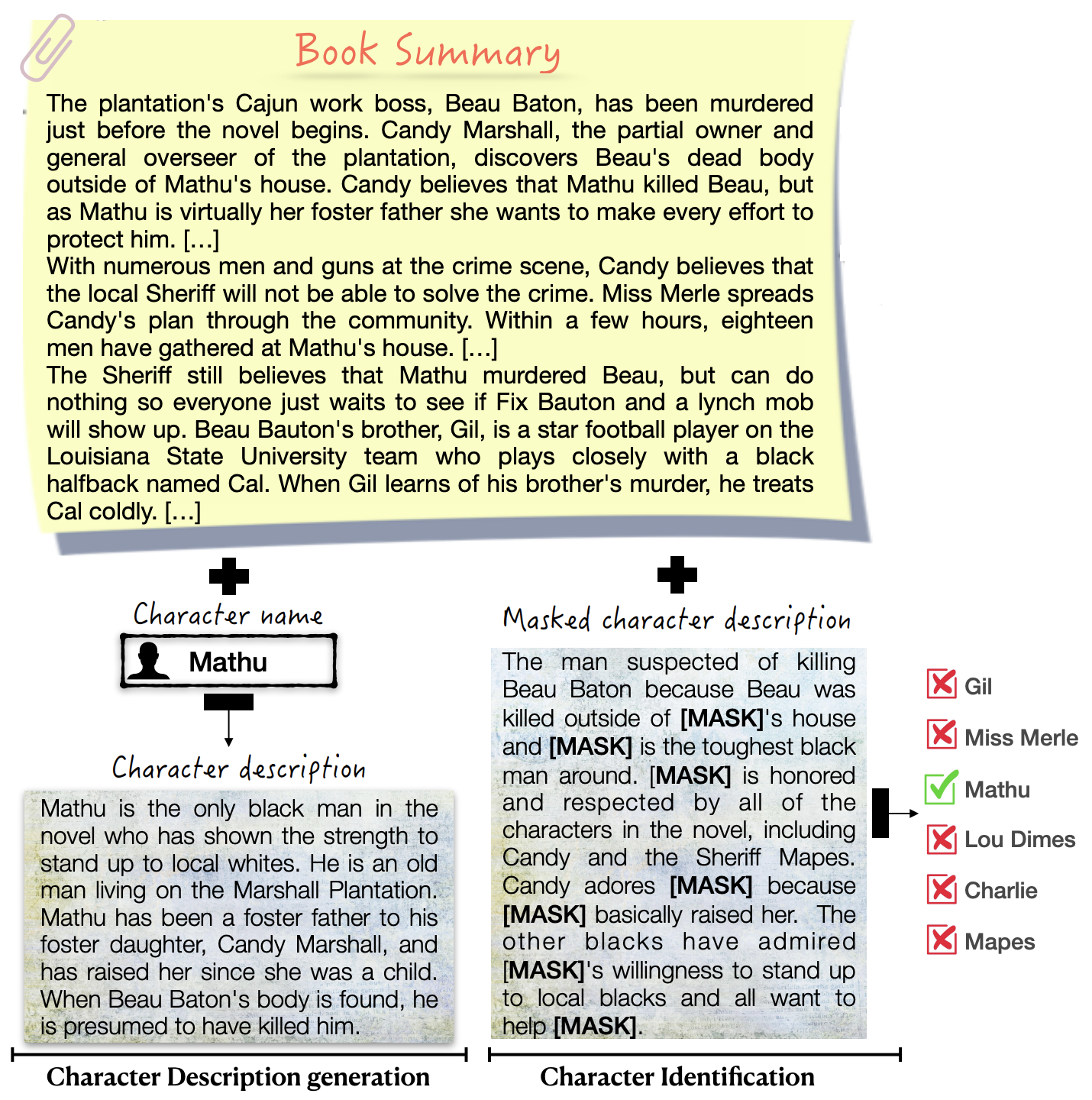

We introduce a dataset for literary pieces and their summaries together with descriptions of characters that appear in the texts.

Faeze Brahman, Meng Huang, Oyvind Tafjord, Chao Zhao, Mrinmaya Sachan and Snigdha Chaturvedi

We introduce a coreference model which scales to documents of any length.

Raghuveer Thirukovalluru, Nicholas Monath, Kumar Shridhar, Manzil Zaheer, Mrinmaya Sachan and Andrew McCallum

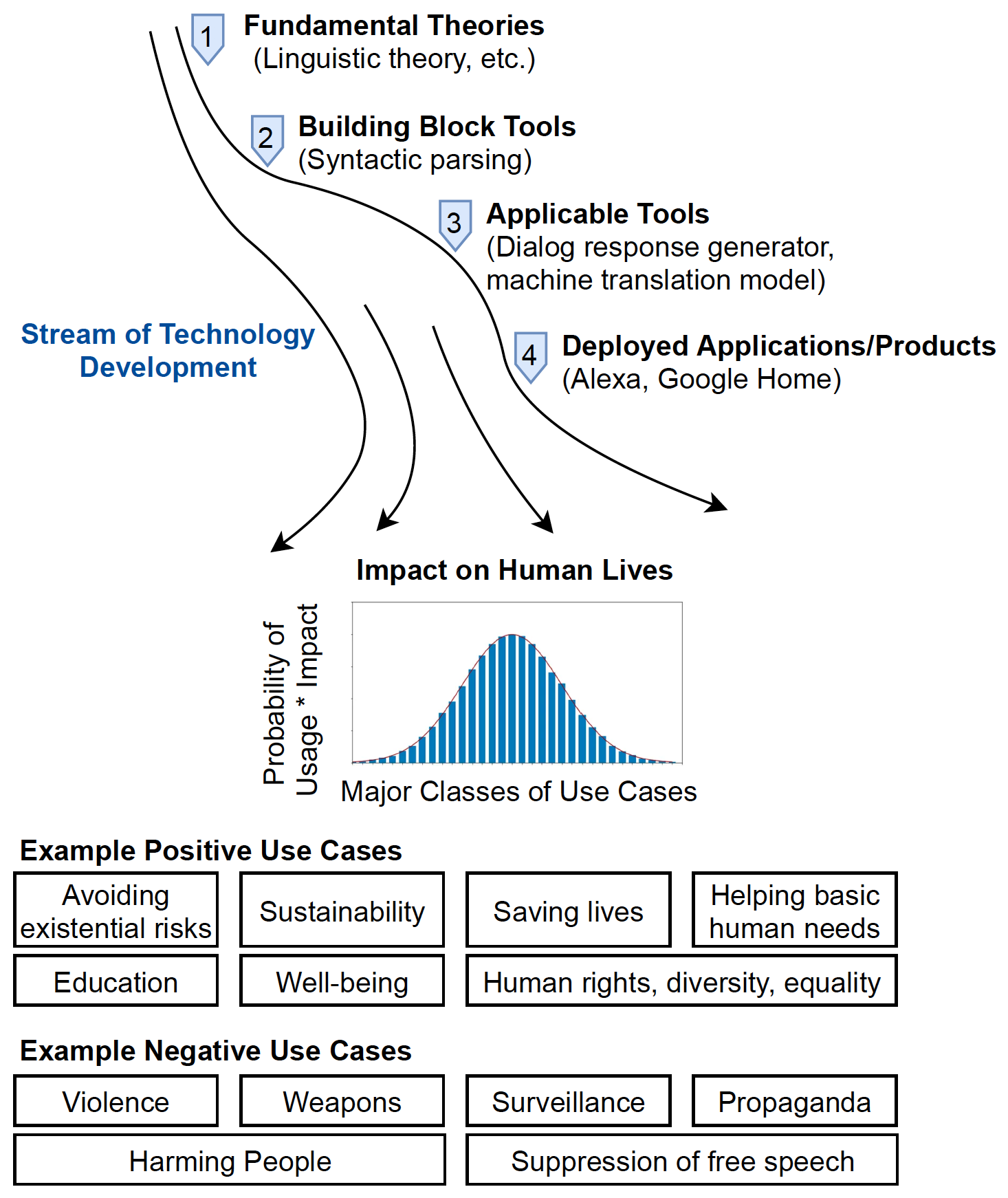

We propose thinking frameworks to understand the direct and indirect real-world impact of NLP.

Zhijing Jin, Geeticka Chauhan, Brian Tse, Mrinmaya Sachan and Rada Mihalcea

ACL 2021 (Findings) MIT News Article

We jointly map contextualized text representations to a lower dimensional space and cluster them for syntax induction.

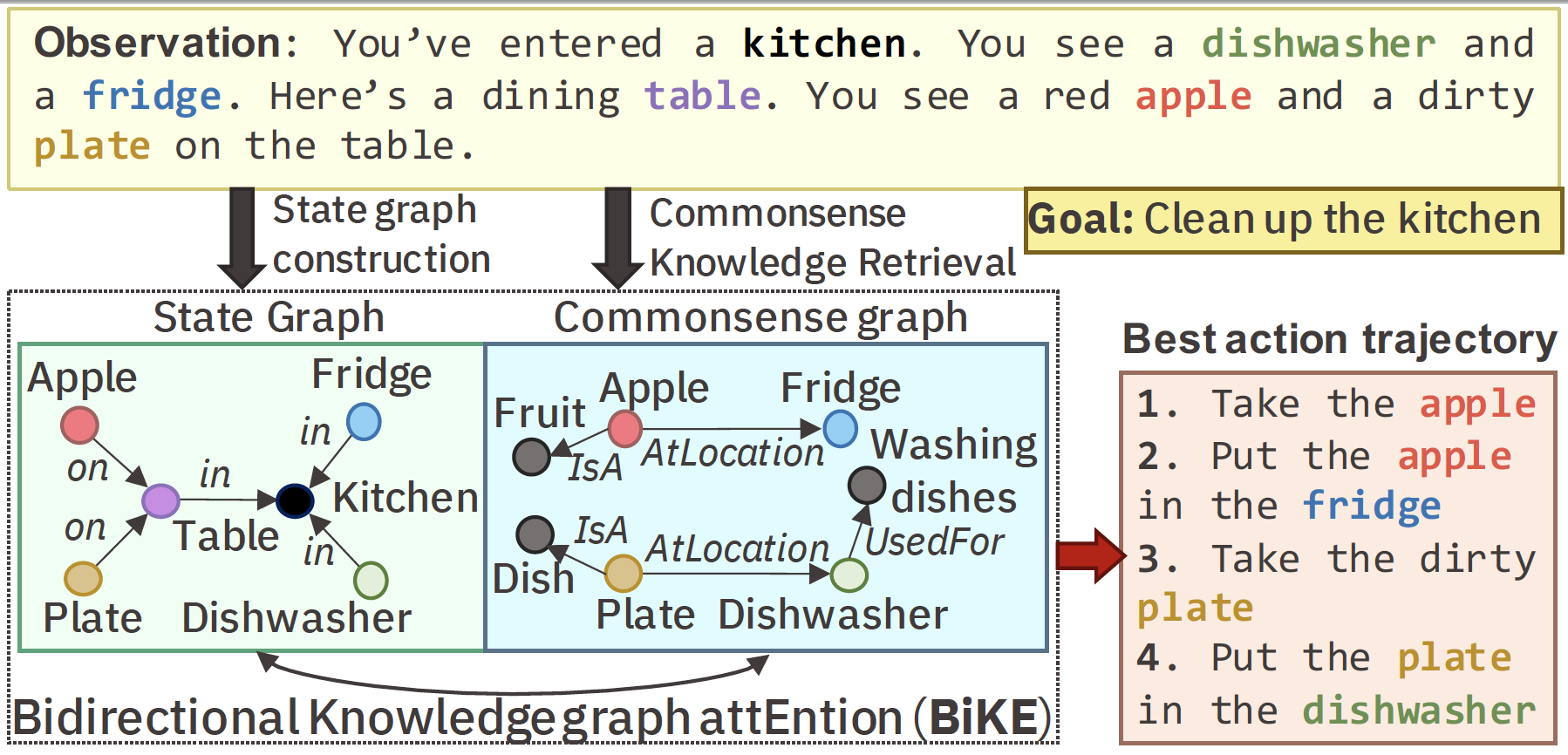

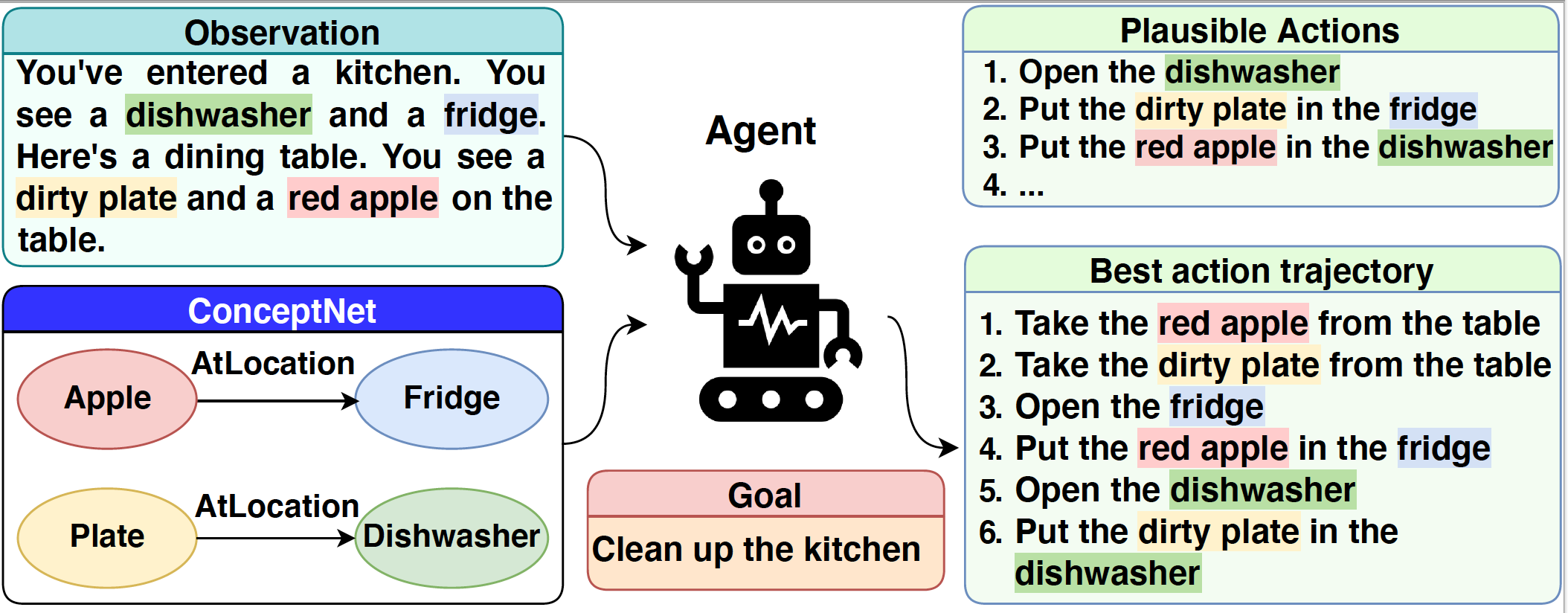

Keerthiram Murugesan, Mattia Atzeni, Pavan Kapanipathi,Kartik Talamadupula, Mrinmaya Sachan and Murray Campbell

We design new environments to test the ability of RL agents to utilize commonsense knowledge.

Keerthiram Murugesan, Mattia Atzeni, Pavan Kapanipathi, Pushkar Shukla, Sadhana Kumaravel, Gerald Tesauro, Kartik Talamadupula, Mrinmaya Sachan and Murray Campbell

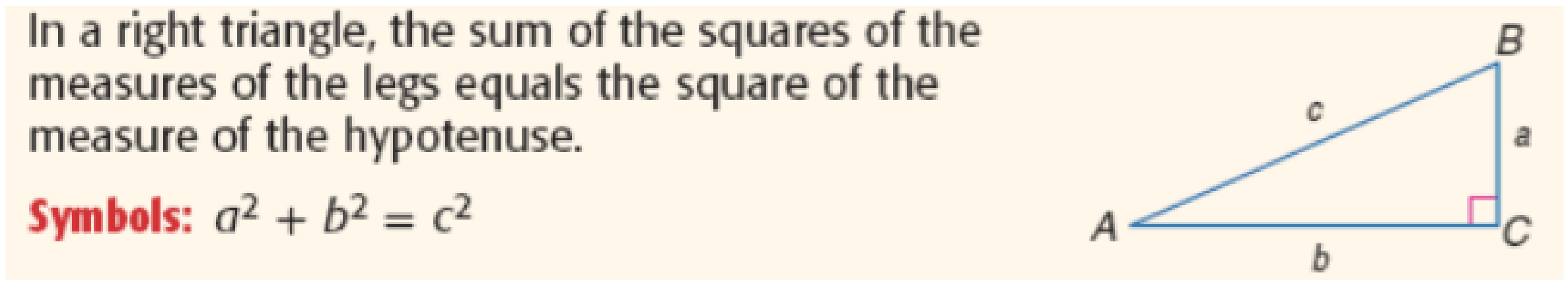

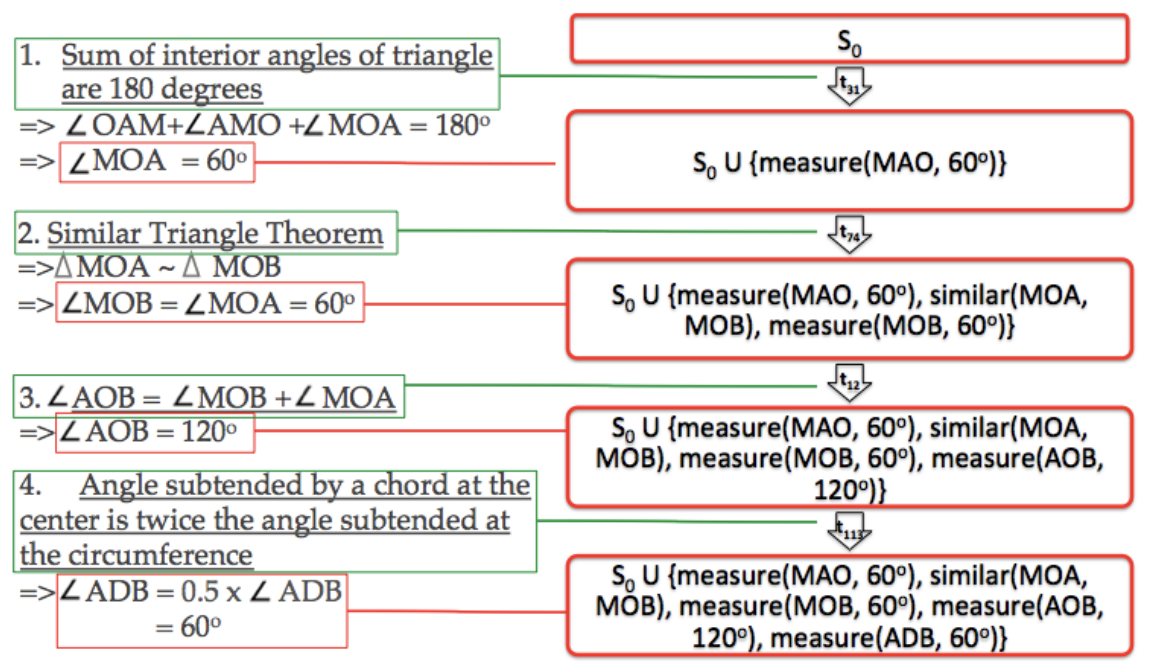

We harvest structured knowledge of geometry from math textbooks.

Mrinmaya Sachan, Avinava Dubey, Eduard Hovy, Tom Mitchell, Dan Roth and Eric P. Xing

Computational Linguistics (CL) journal - Dec 2019 issue

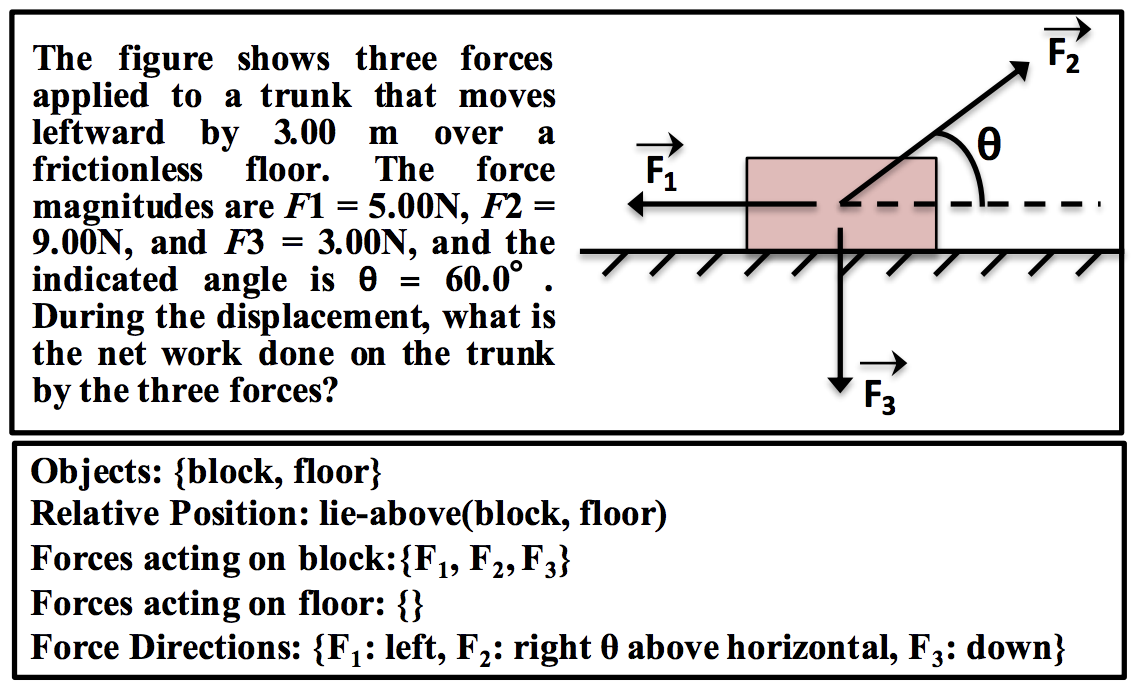

We learn a pipeline process that incorporates existing code, pre-learned machine learning models, and human engineered rules.

Mrinmaya Sachan, Avinava Dubey, Tom Mitchell, Dan Roth and Eric P. Xing

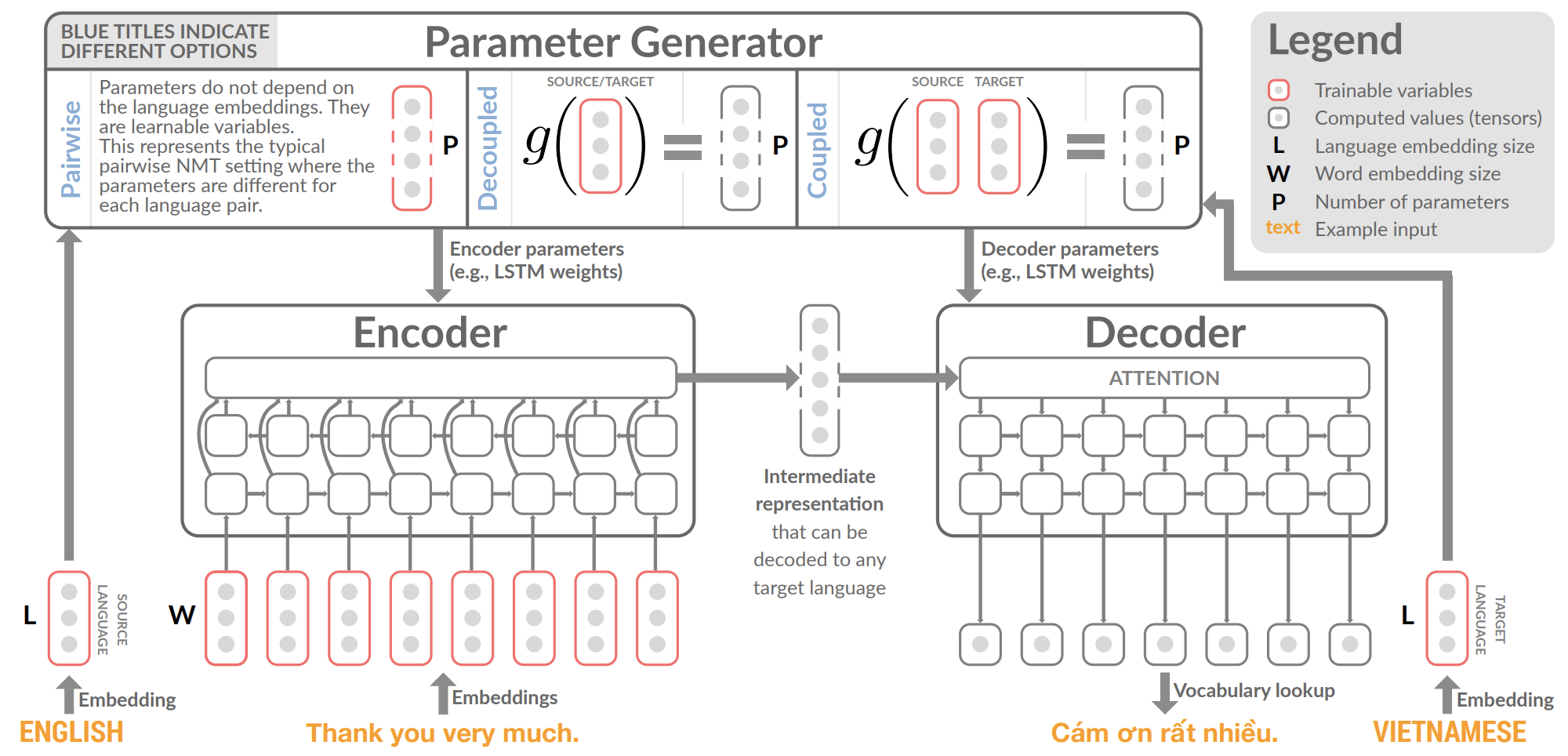

We propose a meta-learning approach for universal NMT.

Emmanouil Antonios Platanios, Mrinmaya Sachan, Graham Neubig and Tom Mitchell

We learn to solve geometry problems by imitation demonstrations in textbooks.

Mrinmaya Sachan and Eric P. Xing

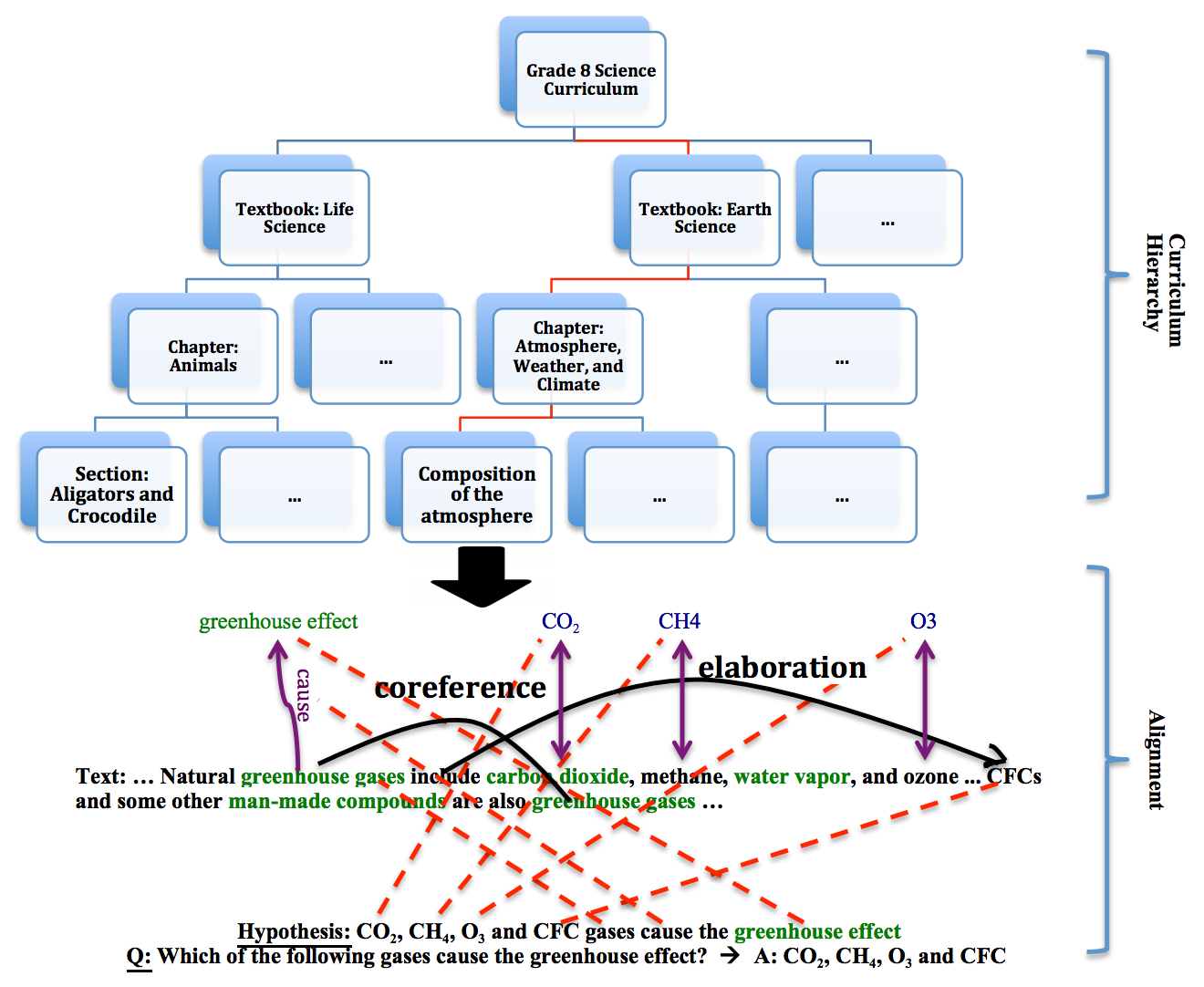

We propose a structured prediction approach to answer science questions in textbooks.

Mrinmaya Sachan, Avinava Dubey and Eric P. Xing

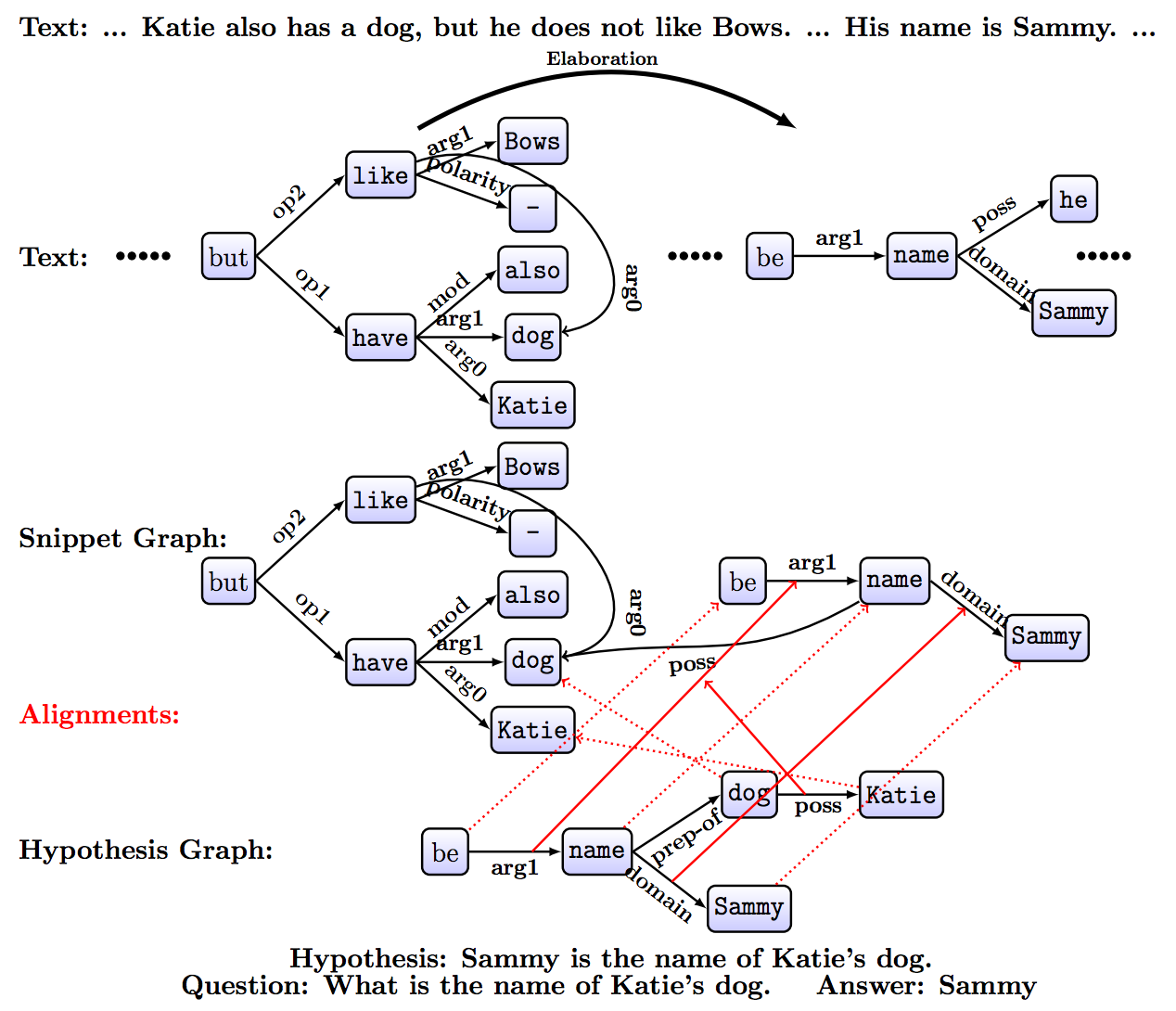

We propose an approach to answer reading comprehension questions using AMR graph representations.

Mrinmaya Sachan and Eric P. Xing

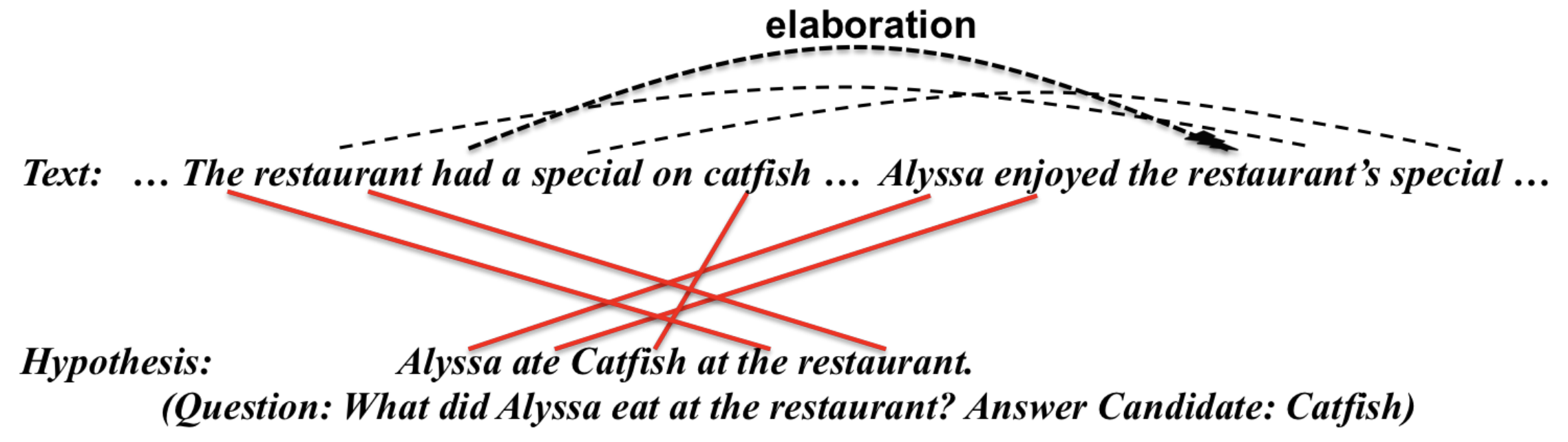

We propose a structured prediction approach to answer reading comprehension questions.

Mrinmaya Sachan, Avinava Dubey, Eric P. Xing and Matthew Richardson

Full List

From Problem-Solving to Teaching Problem-Solving: Aligning LLMs with Pedagogy using Reinforcement Learning

David Dinucu-Jianu, Jakub Macina, Nico Daheim, Ido Hakimi, Iryna Gurevych, Mrinmaya Sachan

EMNLP 2025

MathTutorBench: A Benchmark for Measuring Open-ended Pedagogical Capabilities of LLM Tutors

Jakub Macina, Nico Daheim, Ido Hakimi, Manu Kapur, Iryna Gurevych, Mrinmaya Sachan

EMNLP 2025

MATHGAP: OUT-OF-DISTRIBUTION EVALUATION ON PROBLEMS WITH ARBITRARILY COMPLEX PROOFS

Andreas Opedal, Haruki Shirakami, Bernhard Schölkopf, Abulhair Saparov, Mrinmaya Sachan

ICLR 2025

Language model alignment in multilingual trolley problems

Zhijing Jin, Max Kleiman-Weiner, Giorgio Piatti, Sydney Levine, Jiarui Liu, Fernando Gonzalez, Francesco Ortu, András Strausz, Mrinmaya Sachan, Rada Mihalcea, Yejin Choi, Bernhard Schölkopf

ICLR 2025

Pointwise Mutual Information as a Performance Gauge for Retrieval-Augmented Generation

Tianyu Liu, Jirui Qi, Paul He, Arianna Bisazza, Mrinmaya Sachan, Ryan Cotterell

NAACL 2025

Diras: Efficient llm annotation of document relevance for retrieval augmented generation

Jingwei Ni, Tobias Schimanski, Meihong Lin, Mrinmaya Sachan, Elliott Ash, Markus Leippold

NAACL 2025

Implicit personalization in language models

Zhijing Jin, Nils Heil, Jiarui Liu, Shehzaad Dhuliawala, Yahang Qi, Bernhard Schölkopf, Rada Mihalcea, Mrinmaya Sachan

EMNLP 2024 (findings)

How to Select Datapoints for Efficient Human Evaluation of NLG Models?

Vilém Zouhar, Peng Cui, Mrinmaya Sachan

preprint 2025

Grammar Control in Dialogue Response Generation for Language Learning Chatbots

Dominik Glandorf, Peng Cui, Detmar Meurers, Mrinmaya Sachan

NAACL 2025

Investigating the Zone of Proximal Development of Language Models for In-Context Learning

Peng Cui, Mrinamya Sachan

NAACL 2025 findings

AI-Assisted Human Evaluation of Machine Translation

Vilém Zouhar, Tom Kocmi, Mrinmaya Sachan

preprint 2024

Stepwise Verification and Remediation of Student Reasoning Errors with Large Language Model Tutors

Nico Daheim, Jakub Macina, Manu Kapur, Iryna Gurevych, Mrinmaya Sachan

EMNLP 2024

RELIC: Investigating Large Language Model Responses using Self-Consistency

Furui Cheng, Vilém Zouhar, Simran Arora, Mrinmaya Sachan, Hendrik Strobelt, Mennatallah El-Assady

CHI 2024

Error Span Annotation: A Balanced Approach for Human Evaluation of Machine Translation

Tom Kocmi, Vilém Zouhar, Eleftherios Avramidis, Roman Grundkiewicz, Marzena Karpinska, Maja Popović, Mrinmaya Sachan, Mariya Shmatova

WMT 2024

Towards Aligning Language Models with Textual Feedback

Saüc Abadal Lloret, Shehzaad Dhuliawala, Keerthiram Murugesan, Mrinmaya Sachan

ICML 2024 Workshop MHFAIA

Book2Dial: Generating Teacher Student Interactions from Textbooks for Cost-Effective Development of Educational Chatbots

Junling Wang, Jakub Macina, Nico Daheim, Sankalan Pal Chowdhury, Mrinmaya Sachan

ACL 2024 (findings)

How to Engage your Readers? Generating Guiding Questions to Promote Active Reading

Peng Cui, Vilém Zouhar, Xiaoyu Zhang, Mrinmaya Sachan

ACL 2024

AutoTutor meets Large Language Models: A Language Model Tutor with Rich Pedagogy and Guardrails

Sankalan Pal Chowdhury, Vilém Zouhar, Mrinmaya Sachan

Learning at Scale 2024

Do Language Models Exhibit the Same Cognitive Biases in Problem Solving as Human Learners?

Andreas Opedal, Alessandro Stolfo, Haruki Shirakami, Ying Jiao, Ryan Cotterell, Bernhard Schölkopf, Abulhair Saparov, Mrinmaya Sachan

ICML 2024

PWESuite: Phonetic Word Embeddings and Tasks They Facilitate

Vilém Zouhar, Kalvin Chang, Chenxuan Cui, Nathaniel Carlson, Nathaniel Robinson, Mrinmaya Sachan and David Mortensen

LREC-COLING 2024

A Mechanistic Interpretation of Arithmetic Reasoning in Language Models using Causal Mediation Analysis

Alessandro Stolfo, Yonatan Belinkov and Mrinmaya Sachan

EMNLP 2023

MATHDIAL: A Dialogue Tutoring Dataset with Rich Pedagogical Properties Grounded in Math Reasoning Problems

Jakub Macina, Nico Daheim, Sankalan Pal Chowdhury, Tanmay Sinha, Manu Kapur, Iryna Gurevych and Mrinmaya Sachan

EMNLP 2023 (Findings)

Towards a Mechanistic Interpretation of Multi-Step Reasoning Capabilities of Language Models

Yifan Hou, Jiaoda Li, Yu Fei, Alessandro Stolfo, Wangchunshu Zhou, Guangtao Zeng, Antoine Bosselut and Mrinmaya Sachan

EMNLP 2023

Let’s Synthesize Step by Step: Iterative Dataset Synthesis with Large Language Models by Extrapolating Errors from Small Models

Ruida Wang, Wangchunshu Zhou and Mrinmaya Sachan

EMNLP 2023 (Findings)

Re-visiting Automated Topic Model Evaluation with Large Language Models

Dominik Stammbach, Vilém Zouhar, Alexander Hoyle, Mrinmaya Sachan and Elliott Ash

EMNLP 2023 (Short)

A Diachronic Perspective on User Trust in AI under Uncertainty

Shehzaad Dhuliawala, Vilém Zouhar, Mennatallah El-Assady and Mrinmaya Sachan

EMNLP 2023

Can Large Language Models Infer Causation from Correlation?

Zhijing Jin, Jiarui Liu, Zhiheng Lyu, Spencer Poff, Mrinmaya Sachan, Rada Mihalcea, Mona Diab and Bernhard Schölkopf

arXiv 2023

CLadder: A Benchmark to Assess Causal Reasoning Capabilities of Language Models

Zhijing Jin, Yuen Chen, Felix Leeb, Luigi Gresele, Ojasv Kamal, Zhiheng LYU, Kevin Blin, Fernando Gonzalez Adauto, Max Kleiman-Weiner, Mrinmaya Sachan and Bernhard Schölkopf

NeurIPS 2023

Order-Theoretic Structured Prediction: Partially Ordering Tokens within a String

Tianyu Liu, Afra Amini, Mrinmaya Sachan and Ryan Cotterell

EMNLP 2023 (Outstanding Paper Award)

RecurrentGPT: Interactive Generation of (Arbitrarily) Long Text

Wangchunshu Zhou, Yuchen Eleanor Jiang, Peng Cui, Tiannan Wang, Zhenxin Xiao, Yifan Hou, Ryan Cotterell and Mrinmaya Sachan

arXiv 2023

Efficient Prompting via Dynamic In-Context Learning

Wangchunshu Zhou, Yuchen Eleanor Jiang, Ryan Cotterell and Mrinmaya Sachan

arXiv 2023

Beyond Good Intentions: Reporting the Research Landscape of NLP for Social Good

Fernando Gonzalez, Zhijing Jin, Bernhard Schölkopf, Tom Hope, Mrinmaya Sachan and Rada Mihalcea

EMNLP 2023 (Findings)

Enhancing Textbooks with Visuals from the Web for Improved Learning

Janvijay Singh, Vilém Zouhar and Mrinmaya Sachan

EMNLP 2023

Elastic Weight Removal for Faithful and Abstractive Dialogue Generation

Nico Daheim, Nouha Dziri, Mrinmaya Sachan, Iryna Gurevych and Edoardo M Ponti

arxiv:2303.17574

Investigating the Role of Centering Theory in the Context of Neural Coreference Resolution Systems

Yuchen Eleanor Jiang, Ryan Cotterell and Mrinmaya Sachan

arxiv:2210.14678

Adaptive and Personalized Exercise Generation for Online Language Learning

Peng Cui and Mrinmaya Sachan

ACL 2023

A Causal Framework to Quantify the Robustness of Mathematical Reasoning with Language Models

Alessandro Stolfo, Zhijing Jin, Kumar Shridhar, Bernhard Schölkopf and Mrinmaya Sachan

ACL 2023 (also at MATHAI Workshop at NeurIPS 22)

Distilling Reasoning Capabilities into Smaller Language Models

Kumar Shridhar, Alessandro Stolfo and Mrinmaya Sachan

ACL 2023 (Findings)

World Models for Math Story Problems

Andreas Opedal, Niklas Stoehr, Abulhair Saparov and Mrinmaya Sachan

ACL 2023 (Findings)

Byte-Pair Encoding is Approximately Optimal

Vilém Zouhar, Tim Vieira, Clara Meister, Juan Luis Gastaldi, Mrinmaya Sachan and Ryan Cotterell

ACL 2023 (Findings)

Tokenization and the Noiseless Channel

Vilém Zouhar, Clara Meister, Juan Luis Gastaldi, Li Du, Mrinmaya Sachan and Ryan Cotterell

ACL 2023

XDailyDialog: A Multilingual Parallel Dialog Corpus

Zeming Liu, Ping Nie, Jie Cai, Haifeng Wang, Zheng-Yu Niu, Peng Zhang, Mrinmaya Sachan and Kaiping Peng

ACL 2023

When Does Aggregating Multiple Skills with Multi-Task Learning Work? A Case Study in Financial NLP

Jingwei Ni, Zhijing Jin, Qian Wang, Mrinmaya Sachan and Markus Leippold

ACL 2023

Discourse-Centric Evaluation of Machine Translation with a Densely Annotated Parallel Corpus

Yuchen Eleanor Jiang, Tianyu Liu, Shuming Ma, Dongdong Zhang, Mrinmaya Sachan and Ryan Cotterell

ACL 2023

Membership Inference Attacks against Language Models via Neighbourhood Comparison

Justus Mattern, Fatemehsadat Mireshghallah, Zhijing Jin, Bernhard Scholkopf, Mrinmaya Sachan and Taylor Berg-Kirkpatrick

ACL 2023 (Findings)

Controlled Text Generation with Natural Language Instructions

Wangchunshu Zhou, Yuchen Eleanor Jiang, Ethan Wilcox, Ryan Cotterell and Mrinmaya Sachan

ICML 2023

Infusing Lattice Symmetry Priors in Attention Mechanisms for Sample-Efficient Abstract Geometric Reasoning

Mattia Atzeni, Mrinmaya Sachan and Andreas Loukas

ICML 2023

Educational Question Generation with Difficulty Level Controls

Ying Jiao, Kumar Shridhar, Peng Cui, Wangchunshu Zhou and Mrinmaya Sachan

AIED 2023

Opportunities and Challenges in Neural Dialog Tutoring

Jakub Macina, Nico Daheim, Lingzhi Wang, Tanmay Sinha, Manu Kapur, Iryna Gurevych and Mrinmaya Sachan

EACL 2023

Strategize Before Teaching: A Conversational Tutoring System with Pedagogy Self-Distillation

Lingzhi Wang, Mrinmaya Sachan, Xingshan Zeng and Kam-Fai Wong

EACL 2023 (Short, Findings)

Poor Man’s Quality Estimation: Predicting Reference-Based MT Metrics Without the Reference

Vilém Zouhar, Shehzaad Dhuliawala, Wangchunshu Zhou, Nico Daheim, Tom Kocmi, Yuchen Eleanor Jiang and Mrinmaya Sachan

EACL 2023

LongtoNotes: OntoNotes with Longer Coreference Chains

Kumar Shridhar, Nicholas Monath, Raghuveer Thirukovalluru, Alessandro Stolfo, Manzil Zaheer, Andrew McCallum and Mrinmaya Sachan

EACL 2023 (Findings)

Automatic Generation of Socratic Subquestions for Teaching Math Word Problems

Kumar Shridhar, Jakub Macina, Mennatallah El-Assady, Tanmay Sinha, Manu Kapur and Mrinmaya Sachan

EMNLP 2022 (also at MATHAI Workshop at NeurIPS 22)

Beyond prompting: Making Pre-trained Language Models Better Zero-shot Learners by Clustering Representations

Yu Fei, Zhao Meng, Ping Nie, Roger Wattenhofer and Mrinmaya Sachan

EMNLP 2022

Differentially Private Language Models for Secure Data Sharing

Justus Mattern, Zhijing Jin, Benjamin Weggenmann, Bernhard Schölkopf and Mrinmaya Sachan

EMNLP 2022

Autoregressive Structured Prediction with Language Models

Tianyu Liu, Yuchen Eleanor Jiang, Nicholas Monath, Ryan Cotterell and Mrinmaya Sachan

EMNLP 2022 (Findings, Short paper)

Adapters for Enhanced Modeling of Multilingual Knowledge and Text

Yifan Hou, Wenxiang Jiao, Meizhen Liu, Carl Allen, Zhaopeng Tu and Mrinmaya Sachan

EMNLP 2022 (Findings) / Best paper at the Multilingual Representation Learning (MRL) Workshop

Logical Fallacy Detection

Zhijing Jin, Abhinav Lalwani, Tejas Vaidhya, Xiaoyu Shen, Yiwen Ding, Zhiheng Lyu, Mrinmaya Sachan, Rada Mihalcea and Bernhard Schölkopf

EMNLP 2022 (Findings)

What has been Enhanced in my Knowledge-Enhanced Language Model?

Yifan Hou, Guoji Fu and Mrinmaya Sachan

EMNLP 2022 (Findings)

Rule-Based but Flexible? Evaluating and Improving Language Models as Accounts of Human Moral Judgment

Zhijing Jin, Sydney Levine, Fernando Gonzalez Adauto, Ojasv Kamal, Maarten Sap, Mrinmaya Sachan, Rada Mihalcea, Joshua B. Tenenbaum and Bernhard Schölkopf

NeurIPS 2022 (Oral) / CogSci 2022 (Disciplinary Diversity and Integration Award)

Learning the Transformer Kernel

Sankalan Pal Chowdhury, Adamos Solomou, Avinava Dubey and Mrinmaya Sachan

TMLR 2022

Probing via Prompting

Jiaoda Li, Ryan Cotterell and Mrinmaya Sachan

NAACL 2022

BlonDe: An Automatic Evaluation Metric for Document-level Machine Translation

Yuchen Eleanor Jiang, Tianyu Liu, Shuming Ma, Dongdong Zhang, Jian Yang, Haoyang Huang, Rico Sennrich, Mrinmaya Sachan, Ryan Cotterell and Ming Zhou

NAACL 2022

A Structured Span Selector

Tianyu Liu, Yuchen Eleanor Jiang, Ryan D Cotterell and Mrinmaya Sachan

NAACL 2022

Original or Translated? A Causal Analysis of the Impact of Translationese on Machine Translation Performance

Jingwei Ni, Zhijing Jin, Markus Freitag, Mrinmaya Sachan and Bernhard Schölkopf

NAACL 2022

Self-Supervised Contrastive Learning with Adversarial Perturbations for Robust Pretrained Language Models

Zhao Meng, Yihan Dong, Mrinmaya Sachan and Roger Wattenhofer

NAACL 2022 (Findings)

Slangvolution: A Causal Analysis of Semantic Change and Frequency Dynamics in Slang

Daphna Keidar, Andreas Opedal, Zhijing Jin and Mrinmaya Sachan

ACL 2022

Calibration of Machine Reading Systems at Scale

Shehzaad Dhuliawala, Leonard Adolphs, Rajarshi Das and Mrinmaya Sachan

ACL 2022 (Findings)

Case-based Reasoning for Better Generalization in Text-Adventure Games

Mattia Atzeni, Shehzaad Dhuliawala, Keerthiram Murugesan and Mrinmaya Sachan

ICLR (2022)

Deep Clustering of Text Representations for Supervision-free Probing of Syntax

Vikram Gupta, Haoyue Shi, Kevin Gimpel and Mrinmaya Sachan

AAAI (2022)

Causal Direction in Data Matters: Implications of Causal and Anticausal Learning in NLP

Zhijing Jin, Julius von Kugelgen, Jingwei Ni, Tejas Vaidhya, Ayush Kaushal, Mrinmaya Sachan and Bernhard Schoelkopf

EMNLP 2021

Let Your Characters Tell Their Story: A Dataset for Character-Centric Narrative Understanding

Faeze Brahman, Meng Huang, Oyvind Tafjord, Chao Zhao, Mrinmaya Sachan and Snigdha Chaturvedi

EMNLP 2021 (Findings)

Differentiable Subset Pruning of Transformer Heads

Jiaoda Li, Ryan Cotterell and Mrinmaya Sachan

TACL 2021

Bird’s Eye: Probing for Linguistic Graph Structures with a Simple Information-Theoretic Approach

Yifan Hou and Mrinmaya Sachan

ACL 2021

Scaling Within Document Coreference for Long Texts

Raghuveer Thirukovalluru, Nicholas Monath, Kumar Shridhar, Manzil Zaheer, Mrinmaya Sachan and Andrew McCallum

ACL 2021 (Findings)

How Good Is NLP? A Sober Look at NLP Tasks through the Lens of Social Impact

Zhijing Jin, Geeticka Chauhan, Brian Tse, Mrinmaya Sachan and Rada Mihalcea

ACL 2021 (Findings)

Efficient Text-based Reinforcement Learning by Jointly Leveraging State and Commonsense Graph Representations

Keerthiram Murugesan, Mattia Atzeni, Pavan Kapanipathi,Kartik Talamadupula, Mrinmaya Sachan and Murray Campbell

ACL 2021 (Short paper)

Text-based RL Agents with Commonsense Knowledge: New Challenges, Environments and Baselines

Keerthiram Murugesan, Mattia Atzeni, Pavan Kapanipathi, Pushkar Shukla, Sadhana Kumaravel, Gerald Tesauro, Kartik Talamadupula, Mrinmaya Sachan and Murray Campbell

AAAI 2021

Stronger Transformers for Neural Multi-Hop Question Generation

Devendra Singh Sachan, Lingfei Wu, Mrinmaya Sachan and William Hamilton

arXiv:2010.11374 (2020)

Knowledge Graph Embedding Compression

Mrinmaya Sachan

ACL 2020

Discourse in Multimedia: A Case Study in Extracting Geometry Knowledge from Textbooks

Mrinmaya Sachan, Avinava Dubey, Eduard Hovy, Tom Mitchell, Dan Roth and Eric P. Xing

Computational Linguistics (CL) journal - Dec 2019 issue

Learning Pipelines with Limited Data and Domain Knowledge: A Study in Parsing Physics Problems

Mrinmaya Sachan, Avinava Dubey, Tom Mitchell, Dan Roth and Eric P. Xing

NeurIPS 2018

Parsing to Programs: A Framework for Situated QA

Mrinmaya Sachan and Eric P. Xing

KDD 2018

Self-Training for Jointly Learning to Ask and Answer Questions

Mrinmaya Sachan and Eric P. Xing

NAACL-HLT 2018

Contextual Parameter Generation for Universal Neural Machine Translation

Emmanouil Antonios Platanios, Mrinmaya Sachan, Graham Neubig and Tom Mitchell

EMNLP 2018

Effective Use of Bidirectional Language Modeling for Medical Named Entity Recognition

Devendra Singh Sachan, Pengtao Xie, Mrinmaya Sachan and Eric P. Xing

MLHC 2018

From Textbooks to Knowledge: A Case Study in Harvesting Axiomatic Knowledge from Textbooks to Solve Geometry Problems

Mrinmaya Sachan, Avinava Dubey and Eric P. Xing

EMNLP 2017

Learning to Solve Geometry Problems from Natural Language Demonstrations in Textbooks

Mrinmaya Sachan and Eric P. Xing

StarSem 2017

Easy Questions First? A Case Study on Curriculum Learning for Question Answering.

Mrinmaya Sachan and Eric P. Xing

ACL 2016

Science Question Answering using Instructional Materials

Mrinmaya Sachan, Avinava Dubey and Eric P. Xing

ACL 2016 (Short paper)

Machine Comprehension using Rich Semantic Representations

Mrinmaya Sachan and Eric P. Xing

ACL 2016 (Short paper)

Learning Concept Taxonomies from Multi-modal Data

Hao Zhang, Zhiting Hu, Yuntian Deng, Mrinmaya Sachan, Zhicheng Yan and Eric P. Xing

ACL 2016

Grounding Topic Models with Knowledge Bases

Zhiting Hu, Gang Luo, Mrinmaya Sachan, Eric P. Xing and Zaiqing Nie

IJCAI 2016

Learning Answer-Entailing Structures for Machine Comprehension

Mrinmaya Sachan, Avinava Dubey, Eric P. Xing and Matthew Richardson

ACL 2015 (Outstanding paper)

An Active Learning Approach to Coreference Resolution.

Mrinmaya Sachan, Eduard H. Hovy and Eric P. Xing

IJCAI 2015